What is the world if not a collection of stories? And, what is a globalized world without the capacity to share those stories across cultures, borders, and languages? Dubbing has long served as a bridge between screens and diverse audiences. However, as with every technological shift, we now find ourselves entering a new era: artificial intelligence. What challenges does this emerging paradigm present to the entertainment industry, particularly regarding dubbing?

Figure 1: Idina Menzel’s performance of “Into the Unknown” at the 2019 Oscars featured nine international dubbing actresses who voice Elsa in other languages.

The Advantages of AI Dubbing

As with most applications of AI, this technology is often framed as a tool for efficiency used to streamline the dubbing process. Major studios such as Netflix and Disney have begun experimenting with AI and voice in select titles. In 2025, Amazon Prime Video launched pilot tests for AI-dubbed content within its catalog that had previously lacked dubbed versions. Notably, this is not Amazon’s first venture into AI dubbing, but we will explore that shortly.

Importantly, the benefits of AI dubbing extend beyond large studios. Independent films, often constrained by limited budgets, are also leveraging AI to expand their reach. Watch the Skies, a Swedish film, became the first feature film to be entirely dubbed using AI technology. This achievement ultimately resulted in a U.S. distribution deal with AMC Theaters. The film was developed in partnership with Flawless AI, one of the leading companies in the field of AI-assisted dubbing.

Another significant advancement is voice replication technology, which allows for the recreation of voices, prompting a complex ethical debate surrounding identity protection and digital safety. On one hand, the prospect of reviving the voices of deceased artists is both exciting and controversial. In 2024, Eleven Labs incorporated the voices of Judy Garland and James Dean into its voice library, enabling these iconic voices to narrate books, articles, and other digital content. Yet, the boundary between tribute and exploitation is often blurred. Earlier this year, Netflix's American Murder: Gabby Petito used AI to recreate Gabby's voice, a move that generated significant criticism for being, at best, ethically questionable.

Watch the Skies Trailer, the first feature film to be entirely dubbed using AI technology.

The Drawbacks of AI Dubbing

Despite its promise, AI dubbing raises a host of concerns. While efficient, AI often struggles to preserve the cultural and linguistic nuances that define a story’s essence. AI-generated voices frequently lack the emotional depth and contextual sensitivity necessary to authentically represent the original work. Language, after all, is not simply a collection of words, it is a vehicle for identity. Regional expressions, idioms, and cultural codes are easily lost in the automated process.

One significant critique is that AI dubbing often results in the flattening of vocal performances. This can lead to sterile interpretations that distort emotional delivery or misrepresent characters altogether. Moreover, the standardization of speech patterns through AI could contribute to the homogenization of accents and dialects. While this may enhance output, it risks eroding linguistic diversity and perpetuating linguistic hierarchies, privileging certain dialects while marginalizing others.

Additionally, there is a growing concern over the human cost of AI adoption. Voice actors have raised alarms, asserting that AI threatens both job opportunities and the artistry of voice performance. Labor unions argue that the unchecked use of AI undermines the value of creative labor and exacerbates precarious working conditions within the industry.

The Industry’s Response: Regulation, Not Banishment

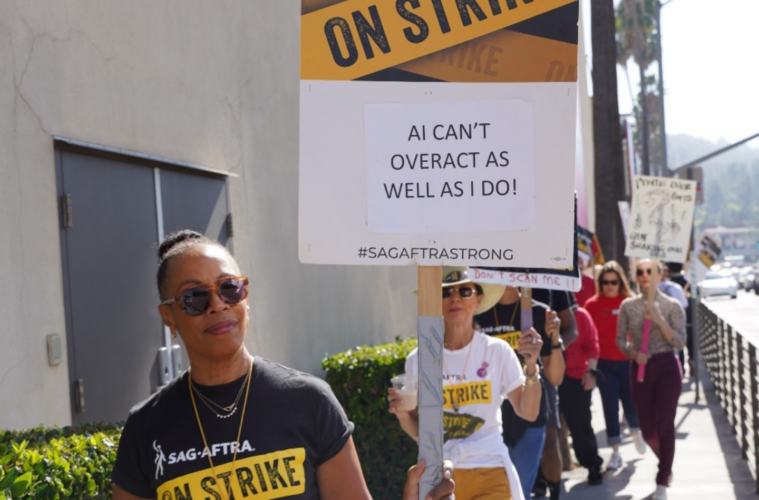

As with most AI-related issues, the stance of artists and workers is not to ban AI, but to regulate its use. During the 2023 SAG-AFTRA strike, one of the core demands was the right for voice actors to provide consent, or withhold it, before their voices are cloned. In July 2024, Hollywood faced another strike, this time from video game performers, who are also calling for protections regarding AI in their contracts.

This call for regulation is not confined to the U.S. alone. In Latin America, labor rights groups are increasingly vocal about AI’s impact on the industry. In Mexico, the Asociación Nacional de Actores (ANDA) proposed a new legislative initiative aimed at safeguarding voice actors from the misuse of AI technology. Similarly, in Brazil, United Voice Artists introduced a bill to protect the country’s dubbing industry, including proposals to integrate the Dublagem Viva movement into the Ministry of Culture’s audiovisual council. In Spain, PASAVE has sought to incorporate clauses into contracts to prevent the use of actors’ voices for AI training without consent.

Figure 2. 2023 SAG-AFTRA Strike

Meanwhile, European and Asian regulators are also beginning to take clear stances. The EU AI Act, set to be implemented in 2025, categorizes generative AI tools as "high-risk" technologies. This means any audiovisual work using AI-generated content, such as dubbed voices, will be subject to strict transparency requirements and must include clear disclosures. Similarly, France's National Centre for Cinema (CNC) is offering funding only to productions that commit to using human voices, thereby protecting cultural authenticity and voice actors’ roles.

In Asia, regulatory responses are even more explicit. China's Cyberspace Administration is enforcing a 2025 policy requiring all AI-generated content, including dubbed voices, to be clearly labeled both on-screen and in digital metadata. This is part of a broader campaign against synthetic misinformation. Meanwhile, the Japan Actors Union has issued a formal statement opposing AI dubbing in anime and film, emphasizing the cultural value and emotional depth of human voice work, particularly vital in Japan’s globally recognized dubbing industry.

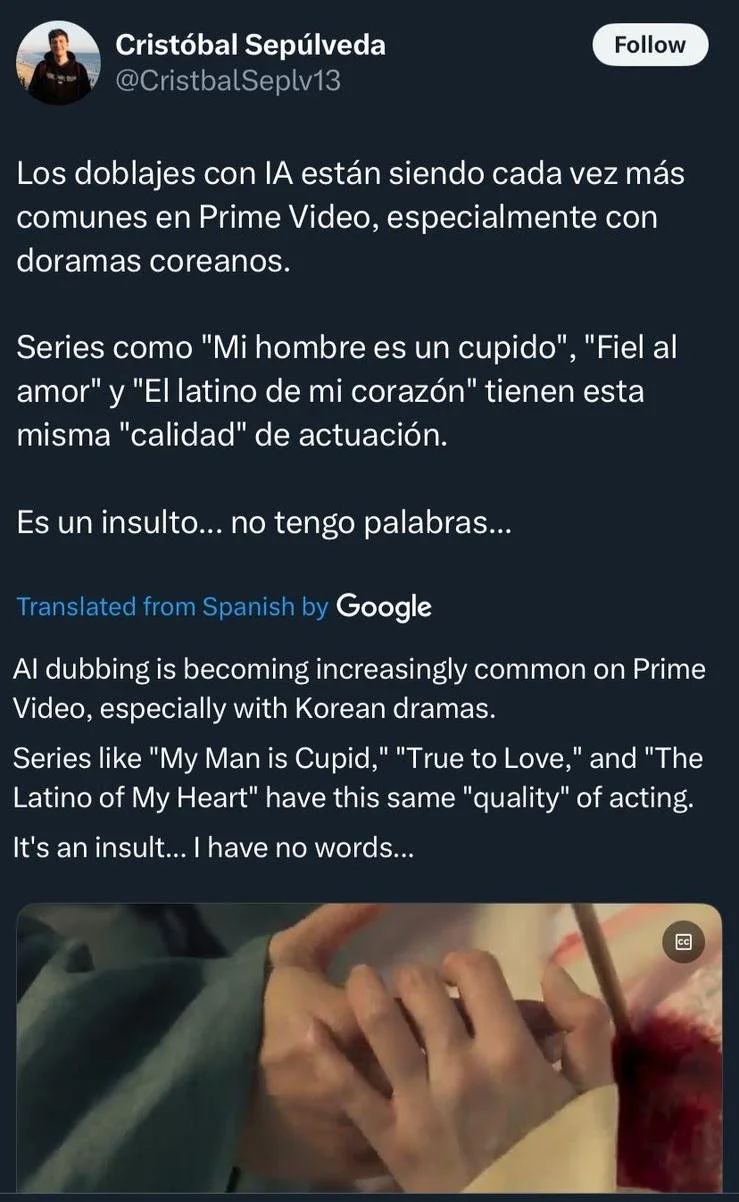

Public Reception: A Case Study

In May 2024, Amazon Prime Video found itself at the center of a heated public debate across Spanish-speaking social media platforms. Viewers shared clips from Korean dramas, such as My Man is Cupid, The Beat of My Heart, and True to Love, in which the Spanish-dubbed versions were criticized for sounding flat, robotic, and devoid of emotional depth. The absence of voice actor credits in these episodes fueled suspicions that AI was being used for dubbing. Users accused Prime Video of showing little regard for its audience. Shortly thereafter, the dubbed versions were quietly removed, leaving only the subtitled versions available, with no official statement from the company.

Sectorial Differences: Film, Television, and Music

It is essential to note the various approaches to AI integration across the film, television, and music industries. In film and television, AI is often used to enhance or replicate performances, for example, perfecting an actor’s accent or recreating voices for dubbing purposes. In contrast, the music industry has applied AI to restore or remix voices, often intending to preserve or reimagine classic interpretations.

Despite their differing needs and concerns, both sectors are grappling with a central issue: voice cloning. Voice actors have increasingly called for regulation, not only within the entertainment industry but also on social media platforms like TikTok, where their voices have been used without consent to promote products or services. These clips often appear as though the actor officially endorsed them, despite never having lent their voice.

“NostalgIA” an AI-generated song by FlowGPT that mimics the voices of Bad Bunny and Bad Gyal.

In the music world, similar challenges have emerged. Artists have found their voices repurposed by fans or creators using AI to generate covers in different genres or languages. While some may see this as harmless fan engagement, unauthorized use can be damaging. For example, in 2023, the Puerto Rican singer Bad Bunny faced controversy when a TikTok user posted a song snippet using an AI-generated version of his voice. The audio went viral, with many believing it was a leaked track from his upcoming album. Bad Bunny later clarified that he had no involvement with the clip, highlighting the confusion and potential harm caused by AI-generated content.

“This Is Not Morgan Freeman – A Deepfake Singularity” a video created by Bob de Jong, featuring a hyper-realistic deepfake of actor Morgan Freeman.

Finding a Balance

This issue is not a conflict between humans and machines; rather, it is a call for balance.

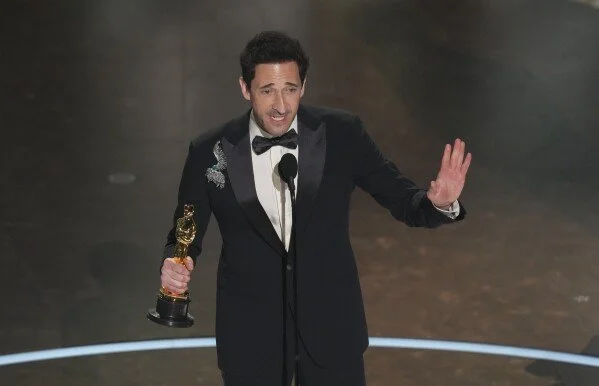

The 2023 SAG-AFTRA strike marked a significant step toward regulating AI in the industry, but as technology continues to evolve, so too must the laws and protections that govern it. Entertainment is not rejecting AI outright. In fact, in the 2024 Grammy Awards Now and Then by The Beatles, a song that utilized AI to restore John Lennon’s vocals, won Best Rock Performance. Oscar winner films such as The Brutalist have used AI to refine actors' accents, and Emilia Pérez employed the technology to enhance the vocal performance of the lead actress.

Thus, the industry is not fearful of AI; rather, it is demanding that the contributions of human artists are respected. The ongoing debate surrounding AI dubbing underscores a critical point: the challenge lies not only in improving efficiency, but also in ensuring the preservation of cultural integrity. When used responsibly, AI can complement human creativity, rather than replace it.

Now and Then - The Beatles’ Grammy-winning track created using AI technology to complete John Lennon’s original demo, blending innovation with musical legacy.

The Screen Actors Guild-American Federation of Television and Radio Artists (SAG-AFTRA) has proactively negotiated agreements that set precedents for ethical AI voice usage:

Consent and Compensation: Actors must provide explicit consent for the creation and use of their digital voice replicas. They are entitled to compensation equivalent to what they would earn for live performances, including residuals for subsequent uses.

Specific Usage Agreements: Any use of a digital replica beyond the original project requires a separate agreement detailing the intended use, ensuring transparency and control for the performer.

Protection of Likeness: The agreements stipulate that the terms "artist," "singer," and "royalty artist" apply solely to humans, safeguarding against the replacement of human performers with AI-generated counterparts.

Figure 5. Adrien Brody winning the Oscar for Best Actor for The Brutalist at the 2025 Academy Awards, a film created with the help of AI technologies.

These measures demonstrate a structured approach to integrating AI in the entertainment industry, balancing technological advancement with the rights and recognition of human artists.

Ultimately, as we move toward an increasingly globalized storytelling environment, it is vital that stories are told with care, authenticity, and respect for the cultures they represent. AI should not be seen as the adversary of storytelling; rather, ignoring its impact may be. The future of dubbing will not be shaped solely by technological advancements, but by the conversations we have regarding its ethical implications. In the end, it is not only about the sounds we hear, it is about whose voices we choose to protect.

As dubbing becomes increasingly automated, the way in which stories are told will change, but more importantly, we will be redefining who gets to tell those stories. The challenge we face is not merely technological, it is deeply ethical, and it requires thoughtful consideration to navigate.

-

ChatGPT (OpenAI, 2024) was used to help develop this article.

—

Header Image: “AI Dubbing Optimizes Several Key Aspects of the Video Production Process.” Everything You Need to Know About AI Dubbing Technology. Accessed March 2, 2025. https://everything-you-need-to-know-about-ai-dubbing-technology.vercel.app

—

Amazon Staff. “Prime Video Tests AI-Powered Dubbing in English, Spanish.” About Amazon, March 5, 2025. https://www.aboutamazon.com/news/entertainment/prime-video-ai-dubbing-english-spanish.

Asociación Nacional de Actores (ANDA). “Iniciativa con Proyecto de Decreto que protege los derechos de los Actores de Doblaje (Voz), ante la IA.” La Voz del Actor. Accessed April 3, 2025. https://laanda.org.mx/la-voz-del-actor/iniciativa-con-proyecto-de-decreto-que-protege-los-derechos-de-los-actores-de-doblaje-voz/

Associated Press. “Video Game Voice and Motion Actors Announce Second Strike over AI Concerns.” CBS News, July 26, 2024. https://www.cbsnews.com/news/video-game-actors-strike-ai-sag-actors-union/

Bernabó, L. (2025). How, when, and why to use AI: Strategic uses of professional perceptions and industry lore in the dubbing industry. International Journal of Communication, 19, 698–715.

Calvo-Ferrer, José Ramón. 2023. "Can You Tell the Difference? A Study of Human vs Machine-Translated Subtitles." Perspectives. https://doi.org/10.1080/0907676X.2023.2268149

Centre national du cinéma et de l’image animée (CNC). “Aide aux cinémas du monde.” Accessed May 23, 2025. https://www.cnc.fr/web/en/funds/aide-aux-cinemas-du-monde_190870

Chan, Wilfred. “The AI Startup Erasing Call Center Worker Accents: Is It Fighting Bias – or Perpetuating It?” The Guardian, August 24, 2022. https://www.theguardian.com/technology/2022/aug/23/voice-accent-technology-call-center-white-american.​:contentReference[oaicite:1]

Desrochers, Jacob. "Artificial Intelligence and Its Ethical Implications for Marketing."University of New Hampshire Scholars’ Repository, 2023. https://scholars.unh.edu/cgi/viewcontent.cgi?article=1864&context=honors.

Digital Regulation. 2023. "AI and Media: Regulatory Challenges and Ethical Considerations." Digital Regulation, 2023. https://digitalregulation.org/3004297-2/

Dublagem Viva. “Manifesto pela Regulamentação da IA.” Dublagem Viva. Accessed April 3, 2025. https://dublagemviva.com.br/index.php/manifesto/.

Espinal, Carla. “Bad Bunny Slams AI Soundalike Viral TikTok: ‘If You Like That Song So Much, Get Out of This Group.’” Billboard, November 7, 2023. https://www.billboard.com/music/latin/bad-bunny-slams-ai-soundalike-viral-tiktok-1235466825/

European Parliament. EU AI Act: First Regulation on Artificial Intelligence. Updated March 13, 2024. https://www.europarl.europa.eu/topics/en/article/20230601STO93804/eu-ai-act-first-regulation-on-artificial-intelligence

Gibson, Kate. “AI Company Lets Dead Celebrities Read to You. Hear What It Sounds Like.” CBS News, July 5, 2024. https://www.cbsnews.com/news/ai-voices-dead-celebrities-judy-garland-james-dean-burt-reynolds/

Krishnan, Gaurav. “Film Funding of the Future: What the Global Film Industry Can Learn from France’s CNC.” The Film Corner Writer (Medium), December 31, 2024. https://medium.com/the-film-corner-writer/film-funding-of-the-future-what-the-global-film-industry-can-learn-from-frances-cnc-c4319885df0c

Low, Samantha. “Voice Actors From Japan Insist on Tighter AI Regulation.” Tokyo Weekender, October 25, 2024. https://www.tokyoweekender.com/entertainment/tech-trends/voice-actors-from-japan-insist-on-tighter-ai-regulation/

Master, Farah, and Beijing Newsroom. “Chinese Regulators Issue Requirements for the Labeling of AI-Generated Content.” Reuters, March 14, 2025. https://www.reuters.com/world/asia-pacific/chinese-regulators-issue-requirements-labeling-ai-generated-content-2025-03-14/

Miller, Lauren. 2023. "The Future of Non-English Storytelling Through Linguistic Diversity: A Case Study of Netflix." Arts Management and Technology Laboratory (AMT Lab), January 2023. https://amt-lab.org/blog/2023/1/the-future-of-non-english-storytelling-through-linguistic-diversity-a-case-study-of-netflix

NBC News. “Gabby Petito Netflix Docuseries Faces Backlash for AI Recreation.” March 1, 2025. https://www.nbcnews.com/tech/gabby-petito-netflix-docuseries-ai-recreatiion-backlash-rcna193185

PASAVE. “Plataforma de Asociaciones y Sindicatos de Artistas de Voz de España.” Accessed April 3, 2025. https://pasave.org/

Sánchez-Mompeán, S. (2021). Netflix likes it dubbed: Taking on the challenge of dubbing into English. Language & Communication, 80(1), 180–190. https://doi.org/10.1016/j.langcom.2021.07.001

SAG-AFTRA. “Artificial Intelligence.” Accessed May 24, 2025. https://www.sagaftra.org/contracts-industry-resources/member-resources/artificial-intelligence

Variety. “Watch the Skies to Get U.S. Theatrical Release with AI Dubbing.” March 10, 2025. https://variety.com/2025/film/news/watch-the-skies-us-theatrical-release-ai-dubbing-1236343110/

Vary, Adam B. “Voice Actors Decry AI at Comic-Con Panel With SAG-AFTRA’s Duncan Crabtree-Ireland: ‘We’ve Lost Control Over What Our Voice Could Say.’” Variety, July 22, 2023. https://variety.com/2023/tv/news/voice-actors-ai-sag-aftra-strike-comic-con-1235677541/

Yang, Angela. “Morgan Freeman Calls Out TikTok Video That Used AI Replication of His Voice.” NBC News, July 1, 2024. https://www.nbcnews.com/pop-culture/pop-culture-news/morgan-freeman-calls-tiktok-video-used-ai-replication-voice-rcna159674