Written by Kate Maffey

In the current digital economy, privacy is elusive. In fact, much of the Internet as we know it is made up of services and practices that use data as a form of payment, without making that transaction clear. This article explores how individuals and organizations in arts enterprises can maintain better privacy and data protection for themselves and their clients using existing technology and techniques. It begins with a brief background on the state of digital privacy, and then provides into an overview of existing techniques and technologies that could be applied within the arts.

The State of Digital Privacy

Due to the structure of the digital economy, numerous stakeholders over the past two decades have advocated for better regulation. In response, the European Union enacted a new privacy regulation called the General Data Protection Regulation (GDPR) in 2018. The regulation included stipulations on how data should be stored, how consent can be obtained from consumers, and how organizations must comply with consumer requests to delete their data.

While GDPR and other privacy regulations like the California Consumer Privacy Act attempt to improve the privacy landscape, emerging technologies have shifted it yet again. The Internet of Things (IoT) – devices of all sorts that are connected to the internet – has expanded exponentially, increasing the data that is collected without much oversight. An example of this is a smart home thermostat that is ostensibly collecting and sending data to the company about heat consumption, but is in reality also tracking other things like electricity usage or times when the user is not home.

However, changes do not exist only with the Internet of Things; the use of artificial intelligence-enabled services has also increased. AI-enabled social media platforms like TikTok, Instagram, Facebook, and Twitter all require users to agree to their terms and conditions, many of which have onerous data collection and usage stipulations. Many Internet users express a desire for privacy while still using services and devices that have opaque privacy and data collection policies, thereby acting inconsistently with their stated privacy preferences.

Figure 1: Image of man looking through binoculars with Facebook logo lenses. Source: Glen Carrie on Unsplash.

All of this is important for arts organizations, especially as it becomes harder to reach audiences through non-digital means. Two studies conducted by Lutie Rodriguez of the Arts Management & Technology Lab underline the challenges that arts organizations face in the privacy space. She reports that while 90% of nonprofits are collecting data, 49% of surveyed nonprofit professionals were unable to articulate how it was collected. Additionally, she found that in a subset of 100 arts organizations, 55 had no privacy policy on their website. These data points indicate that merely raising the level of awareness at arts and nonprofit organizations would be an improvement in the way they implemented privacy tools and techniques.

Tools and Techniques for Digital Privacy

While there are challenges to implementing privacy in today’s digital infrastructure, there are numerous existing tools and techniques that arts organizations can leverage. Some require resources, but many merely require an understanding of privacy techniques and some forward thinking. In this next section, we dig into several areas through which arts enterprises can implement digital privacy: organizationally, through art and artists, and by knowing what techniques to avoid.

Organizational Tools and Techniques

Some arts organizations are already trying to offer their customers more privacy-conscious tools, such as the Lebanon Public Library System which has started to offer a Virtual Private Network (VPN) service in order to allow patrons to shield their web usage. This is a technique requiring resources, but ensures that library patrons can browse the internet and conduct important personal business such as logging into a bank account without worry of data compromise.

Another example of privacy-conscious practices in the arts is a privacy-preserving machine learning framework for video analysis called ARTYCUL. This framework allows museums to use their CCTV cameras to inform them about the preferences of their visitors based on foot traffic. The most important aspect of this framework is that it does not collect information on visitor identity in any way, unlike many other products in the security market that use facial recognition.

In the same vein as ARTYCUL, there are a number of other ways that arts organizations can modify existing practices to be more privacy-conscious. For instance, many nonprofit organizations use data mining to assist in marketing or funding. Instead of following industry practices of collecting all existing data, organizations could instead use privacy-preserving machine learning techniques that protect the identities of users while still offering important insights.

Privacy-preserving techniques also exist for location-based data, which many museums use to assist with digital enhancements to physical exhibits. Without adding extra infrastructure or requirements, museums can use altered machine learning techniques that anonymize and aggregate location data to protect user privacy.

A great example of this technique is a mobile application that was developed to track the spread of COVID-19. Health professionals and developers came up with an application that was compliant for handling sensitive health data and used location-based data for epidemiological purposes without compromising user privacy. This application is a clear illustration of the power of privacy-preserving techniques that arts organizations could implement as a part of their digital infrastructure.

A final technique for arts organizations to ensure user privacy is to add or validate usable opt-out features for their website or online presence. Historically, many websites have made it opaque and challenging, if not impossible, for users to understand what data was being collected and to opt-out if desired. Arts organizations can easily add this infrastructure without much overhead. Other research suggests that notifications for interactive exhibits at museums can feel invasive if timed poorly, meaning that arts organizations should consider the effect they want to have on their websites and mobile applications from a privacy perspective before implementing them.

Engagement with Digital Privacy through Art

While it is important to address privacy at an organizational level, arts enterprises also have a unique ability to educate and inspire their audiences. Many artists are rising to that challenge in the digital privacy space by engaging with the topic in their art. Examples of this include engagement with facial recognition and some of its nefarious uses, especially for populations that are already marginalized.

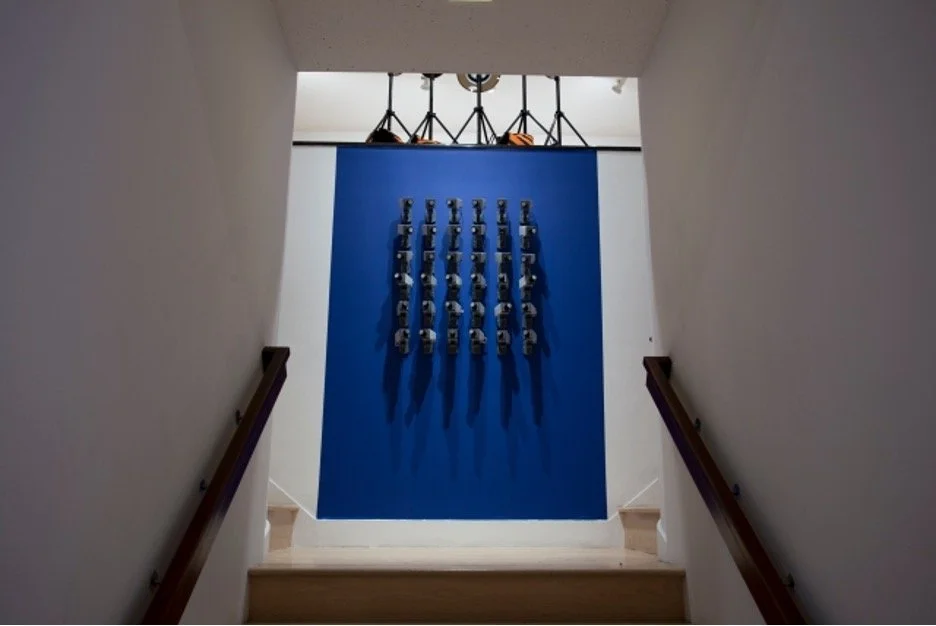

Another example is an art exhibit entitled Think Privacy,” in which the artist directly confronts the ways that social media extracts user data. It features brightly colored posters with provocative quotes that warn audiences to think and work on improving their privacy, such as “Meta Data Kills” and “Users Make, Brands Take”. A final example of artists engaging directly with digital privacy is at the Museum of Contemporary Photography, where an exhibit called “In Real Life” examined “the most polarized forms of imagery” today: surveillance cameras and facial recognition.

Figure 2: “A bank of security cameras by Leo Selvaggio greets visitors on their way to the second floor of In Real Life. Installation view, URME Surveillance, 2014-ongoing.” Source: Freethink.

Techniques and Patterns to Avoid

Finally, another way arts organizations can leverage existing techniques and tools is by learning from the past mistakes of other industries and organizations. This means avoiding harmful digital patterns and privacy pitfalls, such as the dark patterns in applications like Tinder or programs like Adobe Acrobat. It also means ensuring compliance with data regulations and with common sense privacy practices, thereby avoiding situations such as Google’s Arts and Culture application being banned for the way it used data.

Conclusion

While digital privacy at a large scale seems daunting, there are numerous ways that arts organizations can elevate their privacy profile without significant overhead or infrastructural change. This article has offered a broad look at some of the existing techniques and tools that arts organizations could leverage, and underlines the importance of raising privacy awareness.

+ Resources

Abbas, Roba, Katina Michael, and M. G. Michael. 2015. “Location-Based Privacy, Protection, Safety, and Security.” In Privacy in a Digital, Networked World: Technologies, Implications and Solutions, edited by Sherali Zeadally and Mohamad Badra, 391–414. Cham: Springer International Publishing. https://doi.org/10.1007/978-3-319-08470-1_16.

Ardagna, Claudio A., Marco Cremonini, Ernesto Damiani, Sabrina De Capitani di Vimercati, and Pierangela Samarati. 2007. “Privacy-Enhanced Location Services Information.” In Digital Privacy. Auerbach Publications.

Beja, Ido, Joel Lanir, and Tsvi Kuflik. 2015. “Examining Factors Influencing the Disruptiveness of Notifications in a Mobile Museum Context.” Human–Computer Interaction 30 (5): 433–72. https://doi.org/10.1080/07370024.2015.1005093.

Dimick, Mikayla. 2020. “The Environment Surrounding Facial Recognition: Do the Benefits Outweigh Security Risks?” AMT Lab @ CMU. August 17, 2020. https://amt-lab.org/blog/2020/8/the-environment-surrounding-facial-recognition-do-the-benefits-outweigh-security-risks.

Harvey, Adam. n.d. “Think Privacy.” 21st Century Digital Art. Accessed April 4, 2022. http://www.digiart21.org/art/think-privacy.

Landau, Susan. 2021. “Digital Exposure Tools: Design for Privacy, Efficacy, and Equity.” Science (American Association for the Advancement of Science) 373 (6560): 1202–4. https://doi.org/10.1126/science.abi9852.

Leon, Pedro, Blase Ur, Richard Shay, Yang Wang, Rebecca Balebako, and Lorrie Cranor. 2012. “Why Johnny Can’t Opt out: A Usability Evaluation of Tools to Limit Online Behavioral Advertising.” In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, 589–98. Austin Texas USA: ACM. https://doi.org/10.1145/2207676.2207759.

Leverett, Eireann. 2018. “The Internet of Things: The Risks and Impacts of Ubiquitous Computing.” In Partners for Preservation, 81–100. https://doi.org/10.29085/9781783303496.009.

Mahmoodi, Čurdová, J., Henking, C., Kunz, M., Matić, K., Mohr, P., & Vovko, M. 2018. “Internet Users’ Valuation of Enhanced Data Protection on Social Media: Which Aspects of Privacy Are Worth the Most?” Frontiers in Psychology (9): 1516–1516. https://doi.org/10.3389/fpsyg.2018.01516.

McAndrew, Chuck. 2020. “LibraryVPN:” Information Technology and Libraries 39 (2). https://doi.org/10.6017/ital.v39i2.12391.

Nguyen, Stephanie, and Jasmine McNealy. n.d. “I, Obscura — Illuminating Deceptive Design Patterns in the Wild.” Stanford’s Digital Civil Society Lab & UCLA’s Center for Critical Internet Inquiry. https://pacscenter.stanford.edu/wp-content/uploads/2021/07/I-Obscura-Zine.pdf.

Nicas, Jack. 2018. “Why Google Won’t Search for Art Look-Alikes in Some States; Popular Selfie Tool in Art & Culture App Is Blocked in Illinois and Texas Because of Privacy Laws.” WSJ Pro. Cyber Security, January, n/a.

Nkonde, Mutale. 2019. “Automated Anti-Blackness: Facial Recognition in Brooklyn, New York.” Harvard Kennedy School Journal of African American Policy, 7.

Pester, Nick, and Nick Orringe. 2018. “Right to Privacy.” Property Journal, June, 52–53.

Preibusch, Sören. 2015. “How to Explore Consumers’ Privacy Choices with Behavioral Economics.” In Privacy in a Digital, Networked World: Technologies, Implications and Solutions, edited by Sherali Zeadally and Mohamad Badra, 313–41. Cham: Springer International Publishing. https://doi.org/10.1007/978-3-319-08470-1_14.

Rodriguez, Lutie. 2020a. “What Arts Nonprofits Should Know About Data Privacy and Security.” AMT Lab @ CMU. April 14, 2020. https://amt-lab.org/blog/2020/4/what-arts-nonprofits-should-know-about-data-privacy-and-security.

Rodriguez, Lutie. 2020b. “Why More Arts Organizations Need Privacy Policies.” AMT Lab @ CMU. May 28, 2020. https://amt-lab.org/blog/2020/5/why-more-arts-organizations-need-privacy-policies.

Sinha, Smita. 2018. “How Google’s Arts & Culture App Uses AI, Facial Recognition to Find Your Museum Doppelgänger.” Analytics India Magazine. February 19, 2018. https://analyticsindiamag.com/googles-arts-culture-app-uses-ai-facial-recognition-find-museum-doppelganger/.

Su, Chunhua, Jianying Zhou, Feng Bao, Guilin Wang, and Kouichi Sakurai. 2007. “Privacy-Preservation Techniques in Data Mining.” In Digital Privacy. Auerbach Publications.

Tanwar, Gatha, Ritu Chauhan, and Eiad Yafi. 2021. “ARTYCUL: A Privacy-Preserving ML-Driven Framework to Determine the Popularity of a Cultural Exhibit on Display.” Sensors 21 (4): 1527. https://doi.org/10.3390/s21041527.

Zarley, B. David. n.d. “High-Tech Art Exhibit Looks at Life through the Eyes of AI.” Freethink (blog). Accessed April 4, 2022. https://www.freethink.com/technology/facial-recognition-exhibition.