Introduction

Artificial intelligence (AI) is only as intelligent as what it was trained on. Many of the visual GenAI tools were trained from content scraped from the internet. While there are emerging and ongoing lawsuits as to compensation and recognition for those holding copyright of that material, some companies are creating adversarial systems to protect visual artists material. The following review focuses on a software in development, Nightshade, and two existing tools: Aspose and Glaze.

Computer science professor at the University of Chicago, Ben Zhao, created Nightshade, a tool to help defend artists from copyright infringement from GenAI companies that are scanninging their existing artwork. Clever in name, the tool has been purposely named Nightshade for its poisonous origins, a plant that was historically used to poison kings and emperors. Zhao’s digital Nightshade offers a future for artists to reclaim the ownership of their work from the artificial intelligence programs who use them.

Image: Nightshade Poisonous Flower

Image Source: Unsplash

How Does Nightshade Work?

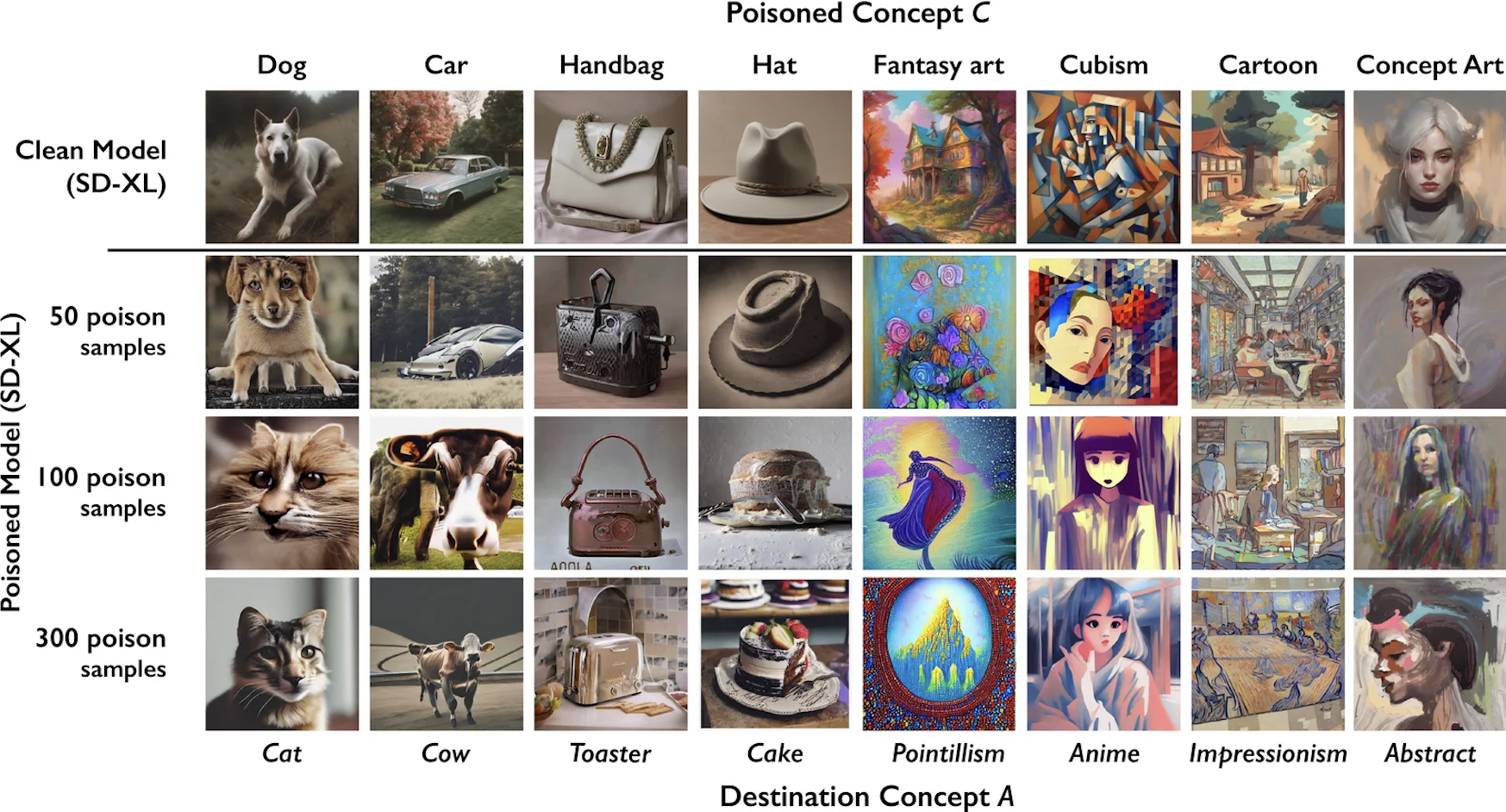

Nightshade is able to confuse the pairings of words used by AI art generators by creating a false match between images and text. Zhao explained, "So it will, for example, take an image of a dog, alter it in subtle ways, so that it still looks like a dog to you and I — except to the AI, it now looks like a cat.”

Image: A table of images comparing both Nightshade’s poisoned samples and standard GenAI results.

Image Source: NPR (Glaze and Nightshade team at University of Chicago)

““I would like to bring about a world where AI has limits, AI has guardrails, AI has ethical boundaries that are enforced by tools.”

”

Does Anything Like This Already Exist?

Nightshade offers only one example of the tools Zhao's team generated to combat GenAI. Another, Glaze combats AI generators by subtly changing pixels in an artwork, serving as an attempt to confuse the models by making it significantly more difficult to directly mimic any particular artist’s artistic style. In addition to this, Kudurru, an application created by an entirely different team at Spawning.ai, operates in a way that “-tracks scrapers' IP addresses and blocks them or sends back unwanted content, such as an extended middle finger, or the classic ‘Rickroll’ Internet trolling prank that spams unsuspecting users with a the music video for British singer Rick Astley's 1980s pop hit, ‘Never Gonna Give You Up.’”

Image: “An illustration of a scraper being misdirected by Kudurru.”

Image Source: NPR (Kurt Paulsen/Kudurru)

What’s The Catch?

Although a potentially valuable tool for artists in the long-run, Nightshade is only able to defend artists from future AI art generator systems, meaning that current models like OpenAI’s DALL-E 2 and Stable Diffusion, are unaffected. However, Nightshade will potentially help to prevent millions of artists from being victims of artificial intelligence copyright infringement in the future. Harvard Business Review notices that generative AI has created a complex intellectual property issue,

“There are other, non-technological cases that could shape how the products of generative AI are treated. A case before the U.S. Supreme Court against the Andy Warhol Foundation — brought by photographer Lynn Goldsmith, who had licensed an image of the late musician, Prince— could refine U.S. copyright law on the issue of when a piece of art is sufficiently different from its source material to become unequivocally ‘transformative,’ and whether a court can consider the meaning of the derivative work when it evaluates that transformation. If the court finds that the Warhol piece is not a fair use, it could mean trouble for AI-generated works.”

Image: Law Columns with Generative AI Art

Image Source: Harvard Business Review (HBR Staff/Pexels)

What Is The Art Industry Saying?

A review in the MIT Technology Review referred to Nightshade as a “powerful deterrent” and includes a quote from Eva Toorenent, an illustrator and artist who has used Glaze, and hopes that Nightshade will change the current state of AI art generators and artist copyright laws. “‘It is going to make [AI companies] think twice, because they have the possibility of destroying their entire model by taking our work without our consent,’.” Another artist, Autumn Beverly, says, “I’m just really grateful that we have a tool that can help return the power back to the artists for their own work.” The MIT Technology Review article highlights tools from Zhao’s team have given Beverly greater confidence in posting her work online again, having removed her artwork from the internet after realizing it had been scrapped without her consent.

Moving forward, it is imperative that artists do everything in their power to ensure copyright protection of their work. Nightshade and similar technological advances are emerging quickly to combat the AI that is serving as their enemy.

Comparing Current Emerging Watermarking Tools: Nightshade, Glaze, Aspose

Nightshade: (Taken directly from the Prompt-Specific Poisoning Attacks on Text-to-Image Generative Models thesis written by Zhao’s team)

Nightshade uses multiple optimization techniques (including targeted adversarial perturbations) to generate stealthy and highly effective poison samples, with four observable benefits.

Nightshade poison samples are benign images shifted in the feature space. Thus a Nightshade sample for the prompt “castle” still looks like a castle to the human eye, but teaches the model to produce images of an old truck.

Nightshade samples produce stronger poisoning effects, enabling highly successful poisoning attacks with very few (e.g., 100) samples.

Nightshade samples produce poisoning effects that effectively “bleed-through” to related concepts, and thus cannot be circumvented by prompt replacement, e.g., Nightshade samples poisoning “fantasy art” also affect “dragon” and “Michael Whelan” (a well-known fantasy and SciFi artist).

We demonstrate that when multiple concepts are poisoned by Nightshade, the attacks remain successful when these concepts appear in a single prompt, and actually stack with

cumulative effect. Furthermore, when many Nightshade attacks target different prompts on a single model (e.g., 250 attacks on SDXL), general features in the model become corrupted, and the model’s image generation function collapses. We note that Nightshade also demonstrates strong transferability across models, and resists a range of defenses designed to deter current poisoning attacks.Finally, we assert that Nightshade can provide a powerful tool for content owners to protect their intellectual property against model trainers that disregard or ignore copy- right notices, do-not-scrape/crawl directives, and opt-out lists. Movie studios, book publishers, game producers and individ- ual artists can use systems like Nightshade to provide a strong disincentive against unauthorized data training. We discuss potential benefits and implications of this usage model.

In short, our work provides four key contributions:

We propose prompt-specific poisoning attacks, and demonstrate they are realistic and effective on state-of-the-art dif- fusion models because of “sparsity” of training data.

We propose Nightshade attacks, optimized prompt-specific poisoning attacks that use guided perturbations to increase poison potency while avoiding visual detection.

We measure and quantify key properties of Nightshade attacks, including “bleed-through” to semantically similar prompts, multi-attack cumulative destabilizing effects, model transferability, and general resistance to traditional poison defenses.

We propose Nightshade as a tool to protect copyright and disincentivize unauthorized model training on protected content.

Glaze: (Taken directly from Glaze’s website ‘About’ and ‘FAQs’ sections)

Glaze is Image specific: The cloaks needed to prevent AI from stealing the style are different for each image. Our cloaking tool, run locally on your computer, will "calculate" the cloak needed given the original image and the target style (e.g. Van Gogh) you specify.

Effective against different AI models: Once you add a cloak to an image, the same cloak can prevent different AI models (e.g., Midjourney, Stable Diffusion, etc.) from stealing the style of the cloaked image. This is the property known as transferability. While it is difficult to predict performance on new or proprietary models, we have tested and validated the performance of our protection against multiple AI models.

Robust against removal: These cloaks cannot be easily removed from the artwork (e.g., sharpening, blurring, denoising, downsampling, stripping of metadata, etc.).

Stronger cloak leads to stronger protection: We can control how much the cloak modifies the original artwork, from introducing completely imperceptible changes to making slightly more visible modifications. Larger modifications provide stronger protection against AI's ability to steal the style.

If you run a Mac, or an older PC, or a non-NVidia GPU, or an NVidia GTX 1660/1650/1550, or don't use a computer, then you should use WebGlaze. WebGlaze is, and will remain for the foreseeable future, invite-only.

Changes made by Glaze are more visible on art with flat colors and smooth backgrounds. The new update (Glaze 1.0) has made great strides along this front, but we will continue to look for ways to improve.

Unfortunately, Glaze is not a permanent solution against AI mimicry. Systems like Glaze face an inherent challenge of being future-proof (Radiya et al). It is always possible for techniques we use today to be overcome by a future algorithm, possibly rendering previously protected art vulnerable. Thus Glaze is not a panacea, but a necessary first step towards artist-centric protection tools to resist AI mimicry. Our hope is that Glaze and followup projects will provide protection to artists while longer term (legal, regulatory) efforts take hold.

Despite these risks, we designed Glaze to be as robust as possible, and have tested it extensively against known systems and countermeasures. We believe Glaze is the strongest tool for artists to protect against style mimicry, and we will continuous to work to improve its robustness.

Aspose: (Taken directly from Aspose’s website ‘About us’ section)

Aspose offers ad-free file format apps to end users with a simple interface for easy usage. Apps will always remain free for light use.

We provide a huge collection of file format apps for viewing, editing, conversion, comparison, parsing, compressing, merging, splitting, watermarking, signing, annotating, searching, protecting and unlocking Microsoft Word documents, Excel spreadsheets, PowerPoint presentations, Outlook emails and archives, Visio diagrams, Project files, OneNote documents and Adobe Acrobat PDF documents.

We also offer apps for working with HTML, OCR, OMR, barcode generation and recognition, 2D & 3D imaging, GIS, ZIP, CAD, XPS, EPS, PS, SVG, TeX, PUB, Photoshop (PSD & PSB), finance (XBRL, iXBRL) and font (TTF) files.

Conclusion

As GenAI matures, new programs are being created to protect visual artists’s work. If you are an individual artist, or even an art organization, it is important to learn both the pros and the cons of all potential software to ensure the protection of your artwork in a world where artificial intelligence continues to thrive and adapt in its capabilities.

While there are watermark priorities within public policy and company practices that will ensure that AI created work is marked, the question of compensation for what that AI was trained on has not been resolved. Until then, these programs serve as a protection, at least for now.

-

https://arxiv.org/pdf/2310.13828.pdf

https://en.wikipedia.org/wiki/Atropa_belladonna

https://mymodernmet.com/nightshade-ai-infection-tool/

https://www.technologyreview.com/2023/10/23/1082189/data-poisoning-artists-fight-generative-ai/

https://hbr.org/2023/04/generative-ai-has-an-intellectual-property-problem