Written by Katie Winter

What are AR and VR?

Augmented reality (AR) and virtual reality (VR) refer to technology that replaces and adds digital elements to visual perception. AR adds digital elements to the physical world while VR immerses the viewer into a virtual world. Common examples of AR are Snapchat filters and Pokémon Go. A common form of VR is using a headset, such as Google Cardboard, Oculus Rift, or many others to experience a full virtual realm. It is important to note that both terms encompass a wide range of technologies, all with different creation processes involved, such as digital rendering, photographing, and filmmaking. Notably, AR and VR technology has absolutely disrupted filmmaking and production, along with the entertainment industry as a whole.

How have AR and VR changed film?

VR technology specifically introduced the opportunity to create films that take place in virtual realities, all witnessed through VR headsets. In fact, in 2016, the Cannes Film festival became the first festival to screen VR short films and presentations in an area exclusively dedicated to VR (see figure 1).

Figure 1: A room of viewers watching a VR short film at the 2016 Cannes Film Festival. Source: Image courtesy of Makropol.

This technology provides a different type of viewing experience, one where viewers have more freedom in what they are watching. However, not all filmmakers believe the technology provides the same level of storytelling or quality of film. In fact, director Steven Spielberg describes it as dangerous, explaining, “The only reason I say it is dangerous is because it gives the viewer a lot of latitude not to take direction from the storytellers but make their own choices of where to look.” It may be many years until movie goers all have the opportunity to watch various movies through the lens of VR headsets. However, this technology has inspired incredible feats in film production that viewers are currently witnessing, including StageCraft, commonly referred to as The Volume.

What is The Volume?

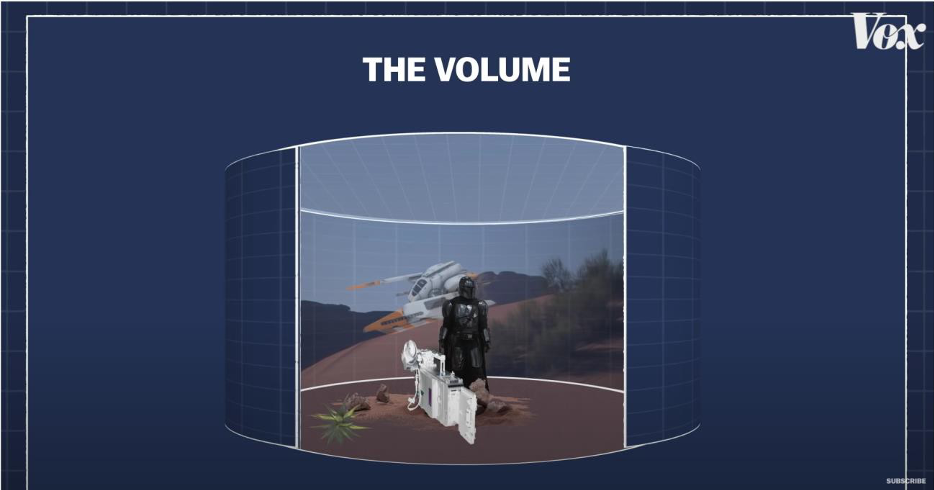

Formally called Stagecraft, The Volume got its nickname from the filmmaking term “volume,” which refers to the space where motion capture and compositing take place on set. The Volume is a 20-foot-tall and 75-foot-long set of LED screens. This technology immerses both the production crew and the cast inside of changing and moving CGI environments in real time. The Volume spans a total of 270 degrees around, encompassing a large portion of set (see figure 2).

Figure 2: A photograph capturing how The Volume encompasses a film set. Source: TechCrunch.

As is somewhat noticeable from figure 2, a collaboration between Industrial Light & Magic (ILM) and Lucasfilm, along with an incredible number of other contributors, introduced this technology for the live action Star Wars series, “The Mandalorian.”

How was The Volume created?

The Volume, an incredibly innovative tool, marks an important shift in film production. Most films that require edited backgrounds, fantastical environments, or are simply not shot on location utilize green and blue screen compositing to create fantasy and imaginative environments. However, using this method, actors must act amongst a sea of green or blue with no real environment around them (see figure 3).

Figure 3: A traditional green screen set “volume.” Source: TechCrunch.

Additionally, production staff must compose the scenes and direct with mockups of the environment or simply their creative imagination. For characters with reflective costumes, such as the Mandalorian, green or blue reflections must be edited out of all shots and re-edited to include more natural and realistic reflections of a CGI background.

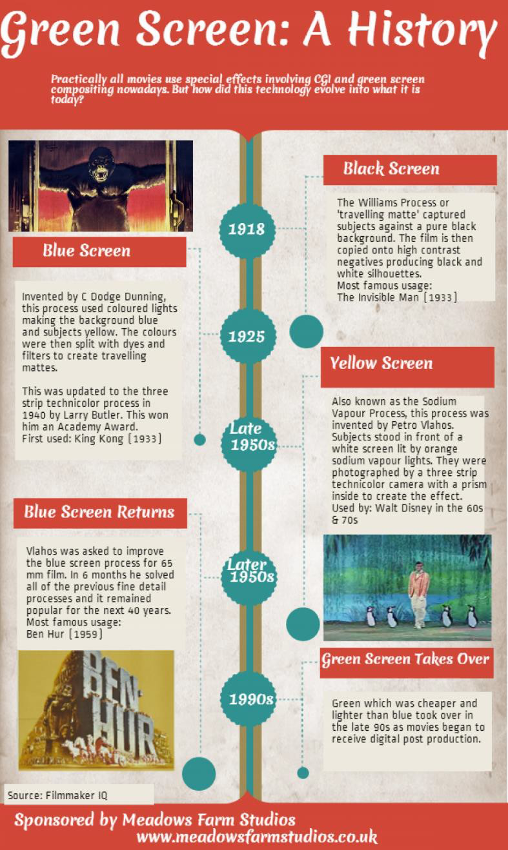

Figure 4: An infographic detailing the history of green screen technology. Source: Meadows Farm Studios.

Technology utilizing colored screens, however, has been revolutionary over the past one hundred years in filmmaking (see figure 4).

Volume technology, however, was inspired by more than just green screen history. “The Mandalorian” series producer Jon Favreau led the idea to approach the production with a new form of stage set. Both his work on the live action “Lion King,” utilizing VR camerawork that simulated real-life cinematography, and the live action “Jungle Book,” featuring giant LED lights for specific sequences, inspired Favreau to think outside the box on how the team could recreate the incredible fantasy environments of the Star Wars universe.

Under the guidance of Lucasfilm president Kathleen Kennedy, Favreau pulled together a think tank of video technicians, gamers, effect artists, and many others with a wide range of backgrounds and inspirations to create the concept. Ultimately, the technology created various opportunities to grow the connection between engineers and artists in the world of film. ILM SVP and general manager, Janet Lewin, discussed how collaborative this project truly was:

With StageCraft, we have built an end-to-end virtual production service for key creatives. Directors, production designers, cinematographers, producers and visual effects supervisors can creatively collaborate, each bringing their collective expertise to the virtual aspects of production just as they do with traditional production.

Favreau proudly notes that The Volume marks “The Mandalorian” as the first production to ever use this form of technology.

How does The Volume work?

The Volume combines both filmmaking and video game software to connect camera work, scene display, and visual effects. To begin, the ceiling and walls are covered in high-definition LED panels. These panels simulate the topography of created fantasy worlds that a team of artists, engineers, and visual effects production crews create. These LED panels work seamlessly together. Director Taika Waititi describes, “Sometimes, you’d walk into the Volume and you wouldn’t recognize where the screens were; it was that flawless.” Film crews still utilize in-person props that work with these environments. However, just a few feet behind these props, The Volume takes over and finishes the environment created with props (see figure 5).

Figure 5: An illustration showing how The Volume incorporates physical set design. Source: Vox.

The geometry of the sets and backgrounds are loaded into the computer system that controls The Volume. Most effectively, this computer system connects with the production camera, so when the camera or actor moves, the telemetry corrects and the background can change accordingly. This change allows for real-time reactions to maintain perfection in the background. This sort of technology allows for simple, stand-still backgrounds—such as some of the open desert scenes of Tattooine—and for more complex moving sequences—like the lava river scene on Nevarro in the season finale of “The Mandalorian.” Additionally, the moving nature of this technology critically allows for the designers to add in set pieces into the background, such as ships (see figure 6).

Figure 6: Illustration depicting how The Volume works with both physical and digital set pieces. Source: Vox.

More specifically, eleven interlinked computers produce the images on the wall of The Volume. To make this happen, three processors along with four servers provide real-time rendering to prove 4K quality images seamlessly together on the wall and ceiling. However, because of the enormous processing power needed to project such large images, a lower resolution image is displayed. Specific connected camera lenses capture the high-resolution image based on the camera’s view at a given moment (see figure 7).

Figure 7: An image depicting how the cameras and walls of The Volume connect to produce high-resolution imagery. Source: ILMVFX.

How does The Volume affect film production?

The Volume provides complete immersion for all crew and set. Production can go between various settings and backgrounds all within the same day, expanding the ability of productions to film at incredible rates of speed. Traditional filming faces difficulties when producing sunset or dawn scenes and scenes that take multiple days to shoot, for example, which often requires filmmakers to accept small errors. The Volume allows for dawns or sunsets to occur for as long as filmmakers need. Production truly operates differently with the flexibility The Volume offers, explained more in the following video.

Figure 8: A video in which various individuals share how The Volume affects different parts of film production. Source: ILMVFX.

How does The Volume affect film set dynamics?

While the technology changes the entire display and feel of the set by immersing everyone in the visual world of the production, it also ultimately changes the groups of individuals on set. To explain, the technology brings together a group of artists and engineers, called the Brain Bar on “The Mandalorian” set, who control the visual display, “ready to transport those standing inside of it literally anywhere.” The Brain Bar renders and manipulates the environment in real time, moving elements of the scene, changing lighting, and integrating motion in the backgrounds. For example, the Brain Bar adds sparks, steam, and moving people in the virtual environments. The concept of adding people into the background truly changes the entire dynamics of the film. The Volume provides an opportunity for fewer extras to be present on the scene at one time.

Potentially, this technology could have provided an opportunity for increased filming amidst the Covid-19 pandemic where caps on individuals in a space limited production. Post-pandemic research will determine how useful the technology really was amidst the global crisis.

How does The Volume affect acting?

The Volume creates an immersive setting that sets an entire mood. Actors can visually engage with elements present in front of them, not just a green screen. If, for example, an actor is pointing to something or reacting to a visual element, the reaction can be more realistic and natural because the element really is in front of them. Giancarlo Esposito, an actor in “The Mandalorian,” explained how this technology changed acting for him:

In the old days, we had greenscreen, which created our environment behind us, and now this world creates all of our solar system, our sky, our earth, but it’s really being projected through a computer. So I find that your imagination has to fill in the rest. And so it’s a different style of acting — you’re looking around the room, you may have some props to stand on, or the vehicle that you arrived in, whether it be my TIE Fighter, or something else. But there’s nothing else there, except what’s projected.

When acting collaboratively, actors can actively understand the environments around them. With traditional green screen technology, actors can each have a different concept of what is occurring around them. The Volume provides the actual scene in front of them, allowing for a synced-up understanding of the full environment (see figure 9).

Figure 9: A photograph of various actors and film crew engaging on set with The Volume behind. Source: American Cinematographer.

How does The Volume affect editing?

With a traditional green screen, everything and everyone on set lightly dons green reflections from green screens that must be edited out. The Volume especially benefited production for “The Mandalorian” since the show includes reflective armor and props, including weapons and ships. Most importantly, The Volume allows for real-time, in-camera compositing on set. Essentially, such incredible scenes of fantastical elements in the Star Wars universe could take up to twelve hours or more per frame with prior technology. Even with the most contemporary visual effects techniques and running the visual effects workflow alongside production, the editing for a series like “The Mandalorian” would extend post-production significantly. With the scenes produced in advance of the shoot, set pieces and props could be used in real time, creating final in-camera visual effects and allowing for much less post-production editing. These time-saving techniques are discussed in further detail in the video “The technology that’s replacing green screen.”

Figure 10: A video detailing the various changes to editing and filmmaking that The Volume provides. Source: Vox.

It is important to note that even though editors produce the scenes in advance and project them digitally, editors do not fully create the scenes digitally. In fact, teams of photographers photographically “scanned” various locations, such as Iceland and Utah, for the environments in “The Mandalorian.” This process involves various photographers donning custom rigs with scanners and cameras strapped to their bodies, allowing them to gather over 1,800 images, on average, of the environments. Nearly 30-40% of The Volume’s backdrops were produced this way.

What does this technology mean for the entertainment industry?

When Lucasfilm released “The Mandalorian,” showing off the incredible visual effects achieved with The Volume, its parent company Disney decided to extend this technology over to Marvel—this next movie being “Thor: Love and Thunder.” With Marvel, a powerhouse in movie production, adopting this technology, many more of their produced films may utilize it.

The success of Lucasfilm only hints towards the expansion of the technology, as Kim Libreri, chief technical officer of Epic Games who collaborated on The Volume’s use with the crew, retold its first use: “Everybody sat on the stage going, ‘Oh my god, I think this is the future. I think we’ve all together, as a team, worked out what is going to be the template for making visual effects in-camera.’” She additionally referred to The Volume as, “The birth of a new way of working.” This sort of filmmaking truly revolutionizes production. In fact, filmmakers argue that because of this one-location filming, along with the fact that The Volume allows for less reshooting and post-production editing, the technology requires less time and money. Quicker filmmaking allows for faster content production. With both the Covid-19 pandemic and the rise of streaming platforms, this sort of technology could change how fast viewers receive content.

How does this affect small entertainment organizations?

The technology brings together a myriad of individuals, from artists to engineers to gaming experts to visual effects editors and many more. This sort of technology launches the need for more creative and technology-interested individuals. The film industry already hires a significant portion of the working economy. In total, 2.5 million people work in film industry jobs, which pay wages of $181 billion annually. In the past eight years alone, total employment in the U.S. motion picture and sound recording industry has increased by 36%. This technology can really propel that growth to new levels. For many up-and-coming filmmakers, this sort of technology could be their career.

For many film schools and groups, incorporating awareness of this technology into the curriculum is crucial. For example, Steeltown Entertainment Project, an organization focusing on the development of independent filmmakers and classes for youth in media, has already begun generating students’ awareness of The Volume’s technology. Although such a small organization like Steeltown, which has a total annual revenue of less than $500,000, could not financially incorporate this sort of technology into its courses yet, it has been able to create awareness through partnerships. In December 2020, the organization hosted Steve Jacks, a visual effects editor of “The Mandalorian,” to speak with film production students and recorded the engaging discussion to share with others. While many small organizations lack the financial capability at this time to incorporate the physical technology, utilizing partnership in the film community can prove instrumental in engaging the next generations of filmmakers in this growing industry.

+ Resources

Baver, Kristin. “This Is the Way: How Innovative Technology Immersed Us in the World of The Mandalorian.” StarWars.com, May 22, 2020. https://www.starwars.com/news/the- mandalorian-stagecraft-feature.

Cecilia Reyes, Maria. “Screenwriting Framework for an Interactive Virtual Reality Film.” Research Gate, 2017. https://www.researchgate.net/profile/Maria-Cecilia- Reyes/publication/318792910_Screenwriting_Framework_for_an_Interactive_Virtual_Rea lity_Film/links/597f583b458515687b4b438a/Screenwriting-Framework-for-an-Interactive- Virtual-Reality-Film.pdf.

Coldewey, Devin. “How 'The Mandalorian' and ILM Invisibly Reinvented Film and TV Production.” TechCrunch. TechCrunch, February 20, 2020. https://techcrunch.com/2020/02/20/how-the-mandalorian-and-ilm-invisibly-reinvented- film-and-tv- production/#:~:text=Meet%20%E2%80%9Cthe%20Volume.%E2%80%9D%20Formally% 20called%20Stagecraft%2C%20it%E2%80%99s%2020,details%20about%20it.%20It%E2 %80%99s%20not%20easy%20being%20green.

DesJardins, Jordan. “Marvel Pumps Up 'The Volume' In The MCU With 'Mandalorian' Technology.” Geek Anything, February 25, 2021. https://geekanything.com/2021/02/25/marvel-pumps-up-the-volume-in-the-mcu-with- mandalorian-technology/.

“Driving Economic Growth.” Motion Picture Association, May 11, 2020. https://www.motionpictures.org/what-we-do/driving-economic-growth/.

Escobar, Eric. “Hacking Film: The Basics of 360 and VR Filmmaking.” Film Independent, May 21, 2018. https://www.filmindependent.org/blog/hacking-film-vr-filmmaking-basics-use/.

Failes, Ian. “Coming Soon: a Whole Heap of New ILM StageCraft Stages.” befores & afters, September 10, 2020. https://beforesandafters.com/2020/09/11/coming-soon-a-whole-heap- of-new-ilm-stagecraft-stages/.

Gooderick, Matt. “Virtual Reality Films Comes to Cannes, Spielberg Sounds Warning.” Reuters. Thomson Reuters, May 18, 2016. https://www.reuters.com/article/us-filmfestival-cannes- virtual-reality-idUSKCN0Y91NZ.

Gupton, Nancy. “What's the Difference Between AR, VR, and MR?” The Franklin Institute, 2017. https://www.fi.edu/difference-between-ar-vr-and-mr.

Harveston, Kate. “How Virtual Reality Is Changing the Entertainment Industry.” The Spread, March 26, 2019. http://cinemajam.com/mag/features/how-virtual-reality-is-changing-the- entertainment-industry.

Hicks, Michael. “VR Films: the Future of Cinema?” TechRadar. TechRadar, December 14, 2018. https://www.techradar.com/news/vr-films-the-future-of-cinema.

Holben, Jay. “The Mandalorian: This Is the Way Cinematographers Greig Fraser, ASC, ACS and Barry ‘Baz’ Idoine and Showrunner Jon Favreau Employ New Technologies to Frame the Disney Plus Star Wars Series.” American Cinematographer. The American Society of Cinematographers, January 20, 2021. https://ascmag.com/articles/the-mandalorian.

“How 'The Mandalorian' and ILM Invisibly Reinvented Film and TV Production: Science and Technology: Before It's News.” Before It's News | People Powered News, February 21, 2020. https://beforeitsnews.com/science-and-technology/2020/02/how-the-mandalorian- and-ilm-invisibly-reinvented-film-and-tv-production-2972427.html.

“ILM Expands Virtual Production Services, Global Trainee Program.” Animation World Network, 2020. https://www.awn.com/news/ilm-expands-virtual-production-services- global-trainee-program.

Internal Revenue Service, Steeltown Entertainment Project Form 990 § (2018). https://pdf.guidestar.org/PDF_Images/2019/680/559/2019-680559177- 202021349349301327- 9.pdf?_gl=11fh25d3_gaNzkxNDE0NDM4LjE2MTgxNDg0MTQ._ga_0H865XH5JK MTYxODE0ODQxNS4xLjEuMTYxODE0ODQ5OS4w_ga_5W8PXYYGBX*MTYxO DE0ODQxNS4xLjEuMTYxODE0ODQ5OS4w&_ga=2.152049593.1092849264.1618148 414-791414438.1618148414.

Kate, Harvestron. “How Virtual Reality Is Changing the Entertainment Industry.” The Spread, March 26, 2019. http://cinemajam.com/mag/features/how-virtual-reality-is-changing-the- entertainment-industry.

Lulgjuraj, Susan. “The Mandalorian: The Volume Virtual Set Adds to Great Storytelling.” Dork Side of the Force. FanSided, June 24, 2020. https://dorksideoftheforce.com/2020/06/24/the-mandalorian-volume/.

“Meadows Farms Studios Blog.” Meadows Farm Studios - Aerial Film and Photography - Green Screen, 2020. http://www.meadowsfarmstudios.co.uk/blogs.html.

Srishti. “DC and Marvel Movies to Release from 2020 to 2022 in Massive Number.” Gizmo Writeups, November 4, 2020. https://gizmowriteups.com/2020/11/04/dc-and-marvel- movies-to-release-from-2020-to-2022-in-massive-number/.

“Steve Jacks Lecture.” Steeltown, 2021. https://www.steeltown.org/project/.

Stoll, Julia. “Employment in U.S. Motion Picture and Recording Industries 2020.” Statista, January 13, 2021. https://www.statista.com/statistics/184412/employment-in-us-motion- picture-and-recording-industries-since-2001/.

Taylor, Drew. “Here's How The Mandalorian Pulled Off Its Groundbreaking Visual Effects.” Collider, May 22, 2020. https://collider.com/mandalorian-visual-effects-explained/.

The Jaunt Team. The Cinematic VR Field Guide, 2018. https://creator.oculus.com/learn/cinematic-vr-field-guide/.

The Technology That's Replacing the Green Screen. YouTube. Vox, 2020. https://www.youtube.com/watch?v=8yNkBic7GfI.

The Virtual Production of The Mandalorian, Season One. YouTube. YouTube, 2020. The Virtual Production of The Mandalorian, Season One. YouTube. ILMVisualFX, 2020. https://www.youtube.com/watch?v=gUnxzVOs3rk.

Weisberger, Mindy. “VR at Cannes: How Will Virtual Reality Change Film?” LiveScience. Purch, May 20, 2016. https://www.livescience.com/54820-vr-films-at-cannes-film- festival.html.