Written by Mina Tocalini

What Are Deepfakes?

Deepfakes, or AI-generated synthetic media, are becoming increasingly prominent in the digital age and are being used across industries more frequently than ever before. Unlike common uses of AI that modify existing media given, AI that generate deepfakes attempt to simulate that media, often using an individual’s likeness as a source of AI learning.

In order to successfully create deepfakes, a robust source of media is necessary. This information is gathered from the internet, social media, and third-party data exchanges. Moreover, a 1-minute video alone can be made up of 1440-1800 pictures. Thus, videos alone provide enough data for an AI to create a simulated version of a person.

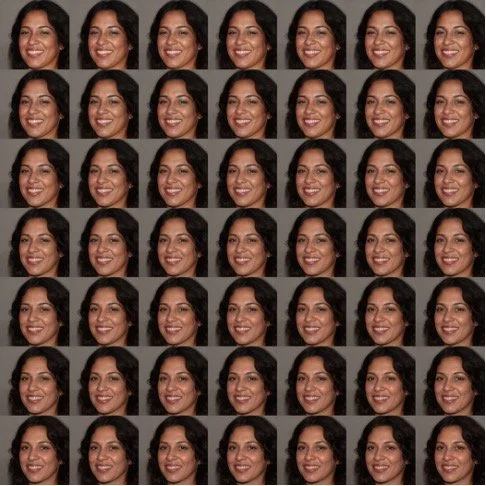

A counterargument for deepfakes is that they can be easily differentiated from actual humans. However, this is not the case and can be proven through ThisPersonDoesNotExist.com. The website generates a new deepfake with each click of the refresh button! The images presented below give an example of one of these fake people and the culmination of different photos that when watched as a video will simulate the image, making it blink and have a larger smile (for more information on ThisPersonDoesNotExist.com, read this article from The New York Times).

Deepfake woman. Source: The New York Times

How do Deepfakes Work?

Deepfakes use an AI neural networks method called GANs or Generative Adversarial Networks to create synthetic media. The Generator Network creates content that the Discriminator Network ‘critiques’ or ‘tests’ until the Generator Network passes. The Discriminator Network is given references which then act as the basis of its critique.

The GANs process. Source: Pathmind

Deepfakes vs AI Photoshop

GANs are applied to Photoshop’s Neural Filters to power their AI workflow. Both deepfakes and AI photoshopped images create synthetic media based on a source. However, the consequences of deepfakes are more concerning, as their creation and utilization involved an element of performance. When deepfakes are created through the analysis of large troves of data, they are able to mimic movements and sound, whereas Photoshop’s Neural Filters are less adept as an AI tool because the software is limited to a modification capacity focused on a single project.

Despite the difference in sophistication of these AI-based tools, concerns generated by deepfakes can potentially translate to future AI photo enhancement regarding Photoshop’s Neural Filters. However, Photoshop is used to modify pictures of real people by creating synthetic pixels to enhance a given image. The software possesses a degree of similarity with deepfakes through its ability to change the expression of person’s face, but the quality of these alterations are less sophisticated and are more akin to that of Deep Nostalgia from MyHeritage.com, which is still in the uncanny valley stage of its development.

GIF demonstrating possible uses of Neural Filters on Photoshop. Source: TNW News

Damage of Deepfakes

Misinformation

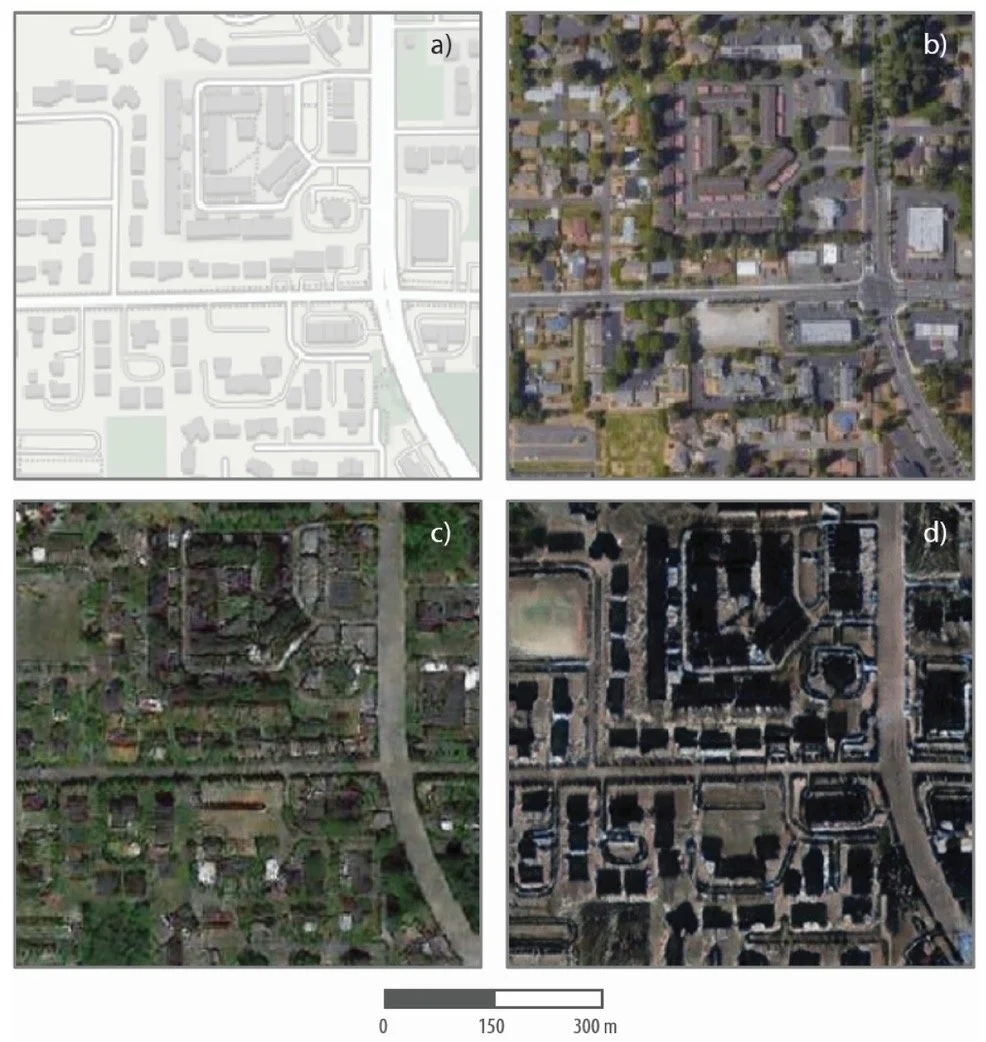

Deepfake satellite imagery created by the University of Washington researchers. Source: The Verge.

While Deepfakes are most commonly associated with people, they extend beyond the simulation of humanity. Geographic deepfakes, which are AI generated images of landscapes, is another iteration of the technology. This category of deepfake presents a national security threat because they can be used to generate false satellite imagery. The synthetic imagery could mislead military strategies, cause panic about perceived natural disasters, or conceal concerning activity occurring abroad. Satellite imagery has been used to expose malicious activity denied by governments, such as violations to human rights.

Geographic deepfakes are more easily interpreted as real since satellite imagery is often low-resolution. The danger of this degree of misinformation could have dire global effects on human rights. With the existence of geographic deepfakes, the concern of governments claiming that certain satellite imagery was fabricated to avoid punishment, or that they would create deepfakes to further deny current violations human rights, has grown. Citizens of highly-censored countries under strict government supervision may be at higher risk of being victim to human rights violations with less access to aid.

Theft and Fraud

Source: The Wall Street Journal

In 2019, The Wall Street Journal reported a case of deepfake audio being used to transfer $243,000 from a U.K. energy firm to hackers in Mexico. The hackers called the CEO of the U.K-based branch of the firm, asking for a transfer of funds using a synthetic voice that mimicked that of CEO’s boss – the chief executive of it’s German-based parent company. Upon calling two subsequent times in an effort to obtain more money, the U.K. firm became suspicious and ceased any further transaction. The money of the first transfer was first sent to a Hungarian bank account, transferred to an account in Mexico, and was further distributed from there. Despite tracking the whereabouts of the cash, suspects have yet to be identified.

This incident at the unspecified European energy firm further asserts that deepfakes as a use for theft and fraud is a growing concern for businesses. The investment in cybersecurity and employee awareness of deepfake technology is crucial, especially since it will only improve with time provided the nature of AI development.

Cyberbullying

Source: The New York Times

Deepfakes have intensified cyberbullying harassment, causing a greater potential to wrongfully ruin an individual’s reputation. In Doylestown, PA, Raffaela Maria Spone created deepfakes to incriminate three teenagers in her daughter’s cheerleading program. The content she generated included videos portraying the teens partying, drinking, and vaping, as well as explicit and other disturbing images.

This case is incredibly concerning because, while this caliber of cyberbullying has horrible implications for mental health, the use of deepfakes in this way can hinder the future of victims if unable to be proven false. Furthermore, it is important to acknowledge that this can have a particularly disparate impact on teens that already face the challenges of racial or class inequality.

Detection and Prevention

PPG Method

Despite the seemingly impossibility of detecting deepfakes, there are technologies being developed to successfully identify them. The Photoplethysmography (PPG) method can identify deepfakes by recording changes in skin color caused by blood flow or heartbeat. Synthetic media demonstrates more noise on biometric records than the original source.

Demonstration of PPG method of deepfake identification. Source: VentureBeat

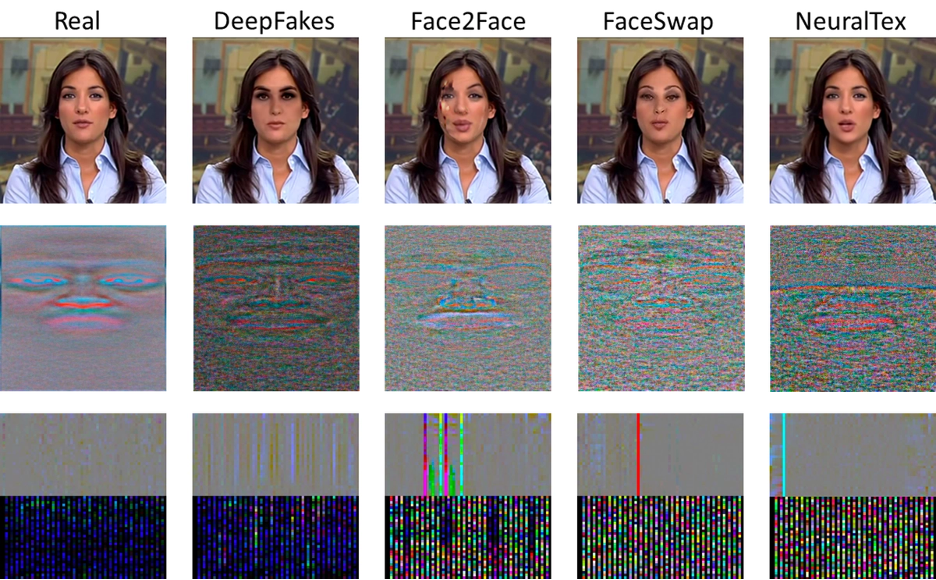

Microsoft’s Video Authentication

Microsoft’s Video Authentication uses AI to scan a photo or video for a “percentage chance or confidence score” of the media’s reliability. The confidence score is determined by identifying the “blending boundary of the deepfake and subtle fading or greyscale elements” that may not be noticeable by the average viewer.

Demonstration of Microsoft’s Video Authentication. Source: Microsoft

Project Origin

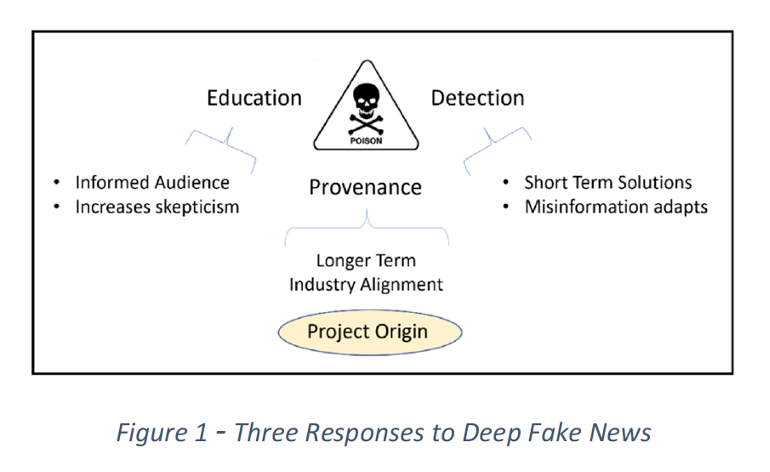

The BBC, New York Times, Microsoft and CBC Radio Canada started Project Origin as an alliance between the publishing and technology industries to combat misinformation via manipulated media. Currently, the alliance is developing a process that can identify the integrity of a video by using manifested metadata that describes the media’s origin and how it has been manipulated.

Three Responses to Deepfake News: diagram depicting how Project Origin will prevent deepfake abuse. Source: Project Origin

Conclusion

Deepfakes can be a fun experiment for swapping faces, but they present a wide variety of malicious uses that have permanently altered society’s trust and engagement with media. Therefore, deepfake detection and public awareness is imperative in mitigating the damages it causes. The PPG method and Microsoft’s Video Authentication software accomplishes this through direct detection of these simulations, while Project Origin does so by identifying the origin of deepfake media and pinpointing where it has been altered. While these projects have made progress in combatting deepfakes, it is unclear if they will be helpful in personal attacks, such as through fraud, theft, and cyberbullying. Regardless, synthetic media will continue to enter new spheres of influence. Thus, in order to ensure personal security, society must continue to be vigilant in challenging the validity of sources and find new ways to protect media and the truth.

+ Resources

“Animate Your Family Photos.” MyHeritage, www.myheritage.com/deep-nostalgia?utm_source=organic_blog&utm_medium=blog&utm_campaign=web&tr_funnel=web&tr_country=US&tr_creative=deep_nostalgia&utm_content=deep_nostalgia.

Burt, Tom. “New Steps to Combat Disinformation.” Microsoft On the Issues, 5 May 2021, blogs.microsoft.com/on-the-issues/2020/09/01/disinformation-deepfakes-newsguard-video-authenticator/.

Hill, Kashmir, and Jeremy White. “Designed to Deceive: Do These People Look Real to You?” The New York Times, The New York Times, 21 Nov. 2020, www.nytimes.com/interactive/2020/11/21/science/artificial-intelligence-fake-people-faces.html.

Johnson, Khari. “AI Researchers Use Heartbeat Detection to Identify Deepfake Videos.” VentureBeat, VentureBeat, 3 Sept. 2020, venturebeat.com/2020/09/03/ai-researchers-use-heartbeat-detection-to-identify-deepfake-videos/.

Larsson, Naomi. “How Satellites Are Being Used to Expose Human Rights Abuses.” The Guardian, Guardian News and Media, 4 Apr. 2016, www.theguardian.com/global-development-professionals-network/2016/apr/04/how-satellites-are-being-used-to-expose-human-rights-abuses.

Macaulay, Thomas. “How Photoshop's New Neural Filters Harness AI to Generate New Pixels.” TNW | Neural, 27 Apr. 2021, thenextweb.com/news/how-photoshops-new-neural-filters-harness-ai-to-generate-new-pixels.

Morales, Christina. “Pennsylvania Woman Accused of Using Deepfake Technology to Harass Cheerleaders.” The New York Times, The New York Times, 15 Mar. 2021, www.nytimes.com/2021/03/14/us/raffaela-spone-victory-vipers-deepfake.html.

Nicholson, Chris. “A Beginner's Guide to Generative Adversarial Networks (GANs).” Pathmind, wiki.pathmind.com/generative-adversarial-network-gan.

Project Origin, www.originproject.info/.

Stupp, Catherine. “Fraudsters Used AI to Mimic CEO's Voice in Unusual Cybercrime Case.” The Wall Street Journal, Dow Jones & Company, 30 Aug. 2019, www.wsj.com/articles/fraudsters-use-ai-to-mimic-ceos-voice-in-unusual-cybercrime-case-11567157402.

This Person Does Not Exist, thispersondoesnotexist.com/.

“Universal Declaration of Human Rights.” United Nations, United Nations, www.un.org/en/about-us/universal-declaration-of-human-rights.

Vincent, James. “Deepfake Satellite Imagery Poses a Not-so-Distant Threat, Warn Geographers.” The Verge, The Verge, 27 Apr. 2021, www.theverge.com/2021/4/27/22403741/deepfake-geography-satellite-imagery-ai-generated-fakes-threat.