By: Abigail Hartless

introduction

Voice recognition technologies allow for our own devices to become digital personal assistants, answering simple questions, and sometimes even providing much needed humor. They allow for live transcribing, captioning, and more. But what if your language isn’t spoken? While researchers are working to develop sign language recognition technologies, there are no commercially available solutions as of now. As the performing arts seek to become more inclusive and diverse, one area that be easily be made more inclusive is our language. People feel welcomed when organizations make spaces accessible to those who speak many languages, even non-spoken ones. Closed captioning devices for individuals who are deaf or hard of hearing have been used for a while but produce written language, which vary immensely from their signed counterparts. In the past five years, companies have sought to (and have successfully) develop devices that produce real-time spoken translations, such as Google Pixel buds working with Google Translate. There are no products like this for signed languages, which is a disadvantage, as signers who are only fluent in American Signed Language or another signed language are not being catered to in their own native language. Reaching audiences in their native languages is a concept that has been historically utilized in language learning, but is an incredibly important goal for arts organizations to have as well, in order to be more accessible to diverse communities and individuals from diverse backgrounds.

What does sign language recognition technology look like now?

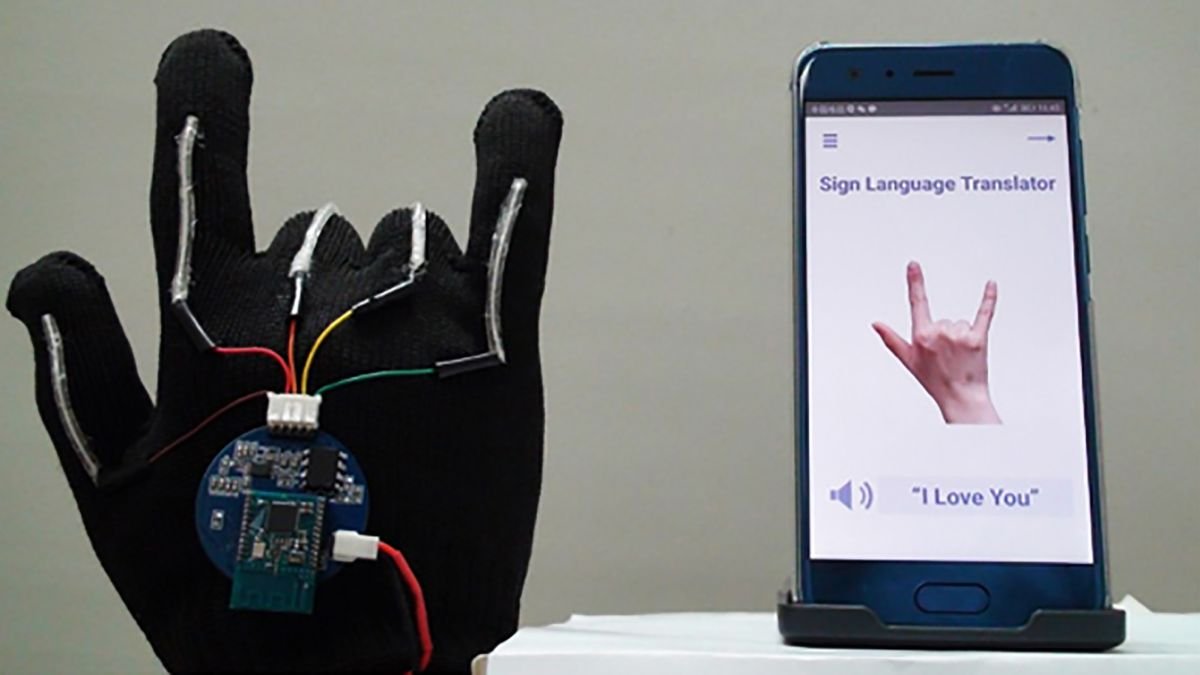

Technologies in development include motion capture camera-based programs and wearable glove and sensor devices. These technologies all incorporate some method of capturing the shape, placement, and motion of the hands, and facial expressions, running them through a neural network, and returning a written language translation. The incorporation of artificial intelligence models such as neural networks and large language models have allowed for sign language recognition technologies to become much more accurate in recent years, as artificial intelligence allows for near-human like language processing capabilities.

Image: High-Tech Glove Translates Sign Language Source: Picheta, Rob. ” This new high-tech glove translates sign language into speech in real time”. Photograph. CNN. July 1, 2020.

Image: Hand Talk Technology. Source: Fogetti, Fernanda. “Hand Talk announces groundbreaking technology for movement recognition in sign languages”. Photograph. Handtalk. February 17, 2022.

Why do we treat signed languages differently from spoken ones?

Many individuals are often ill-informed about signed languages, and assume that American Sign Language is just a way to speak English with your hands (spoiler alert: it’s not). Signed languages have their own complex structures, just as spoken and written languages do, including dialects, accents, and other social-based variations. Structurally, American Signed Language is most similar to French, and does not have many structural similarities to English. These assumptions have led to a slow development period for sign language recognition technologies, and a reliance on hearing interpreters for any cross-cultural communication between Deaf and hearing individuals. It is important to note that there is a cultural difference between deaf and Deaf. Lowercase d-deaf is the actual medical state of having no hearing or being hard of hearing, and capital D-Deaf is the cultural status of having no hearing and often incorporates not using any medical innovations to regain hearing. Instead, Deaf individuals communicate via signed languages, and usually do not view a lack of hearing as a medical problem, but just as a facet of their identities.

American Sign Language on Broadway

In 2019, Deaf actor Russell Harvard took over the role of Link Deas in the Broadway production of Harper Lee’s To Kill A Mockingbird. Director Aaron Sorkin reworked the script to have the actors playing the children Scout, Dill, and Jem, serve as translators for Harvard’s signed lines. While Harvard does speak, having the actors playing the children serving as interpreters allowed for Harvard to play Link Deas’s character as if he is Deaf, and allowed for the representation of American Sign Language in a theatre space where it is not as commonly seen or portrayed.

Russell Harvard discusses playing Link Deas in To Kill A Mockingbird and more.

Interpreting Live Theatre

When incorporating signed languages into theatre performances, interpreters who are similar to the characters being portrayed have to be found, as Deaf audiences have to spend most of the time watching the interpreter off the stage in order to know what’s going on in a scene. As signed languages often differ significantly across race and definition, with the existence of a Black American Sign Language in the United States, it is crucial to have interpreters who resemble the actors whose speech they are interpreting. However, sometimes a community does not have access to interpreters who match the profile of the actors in a show.

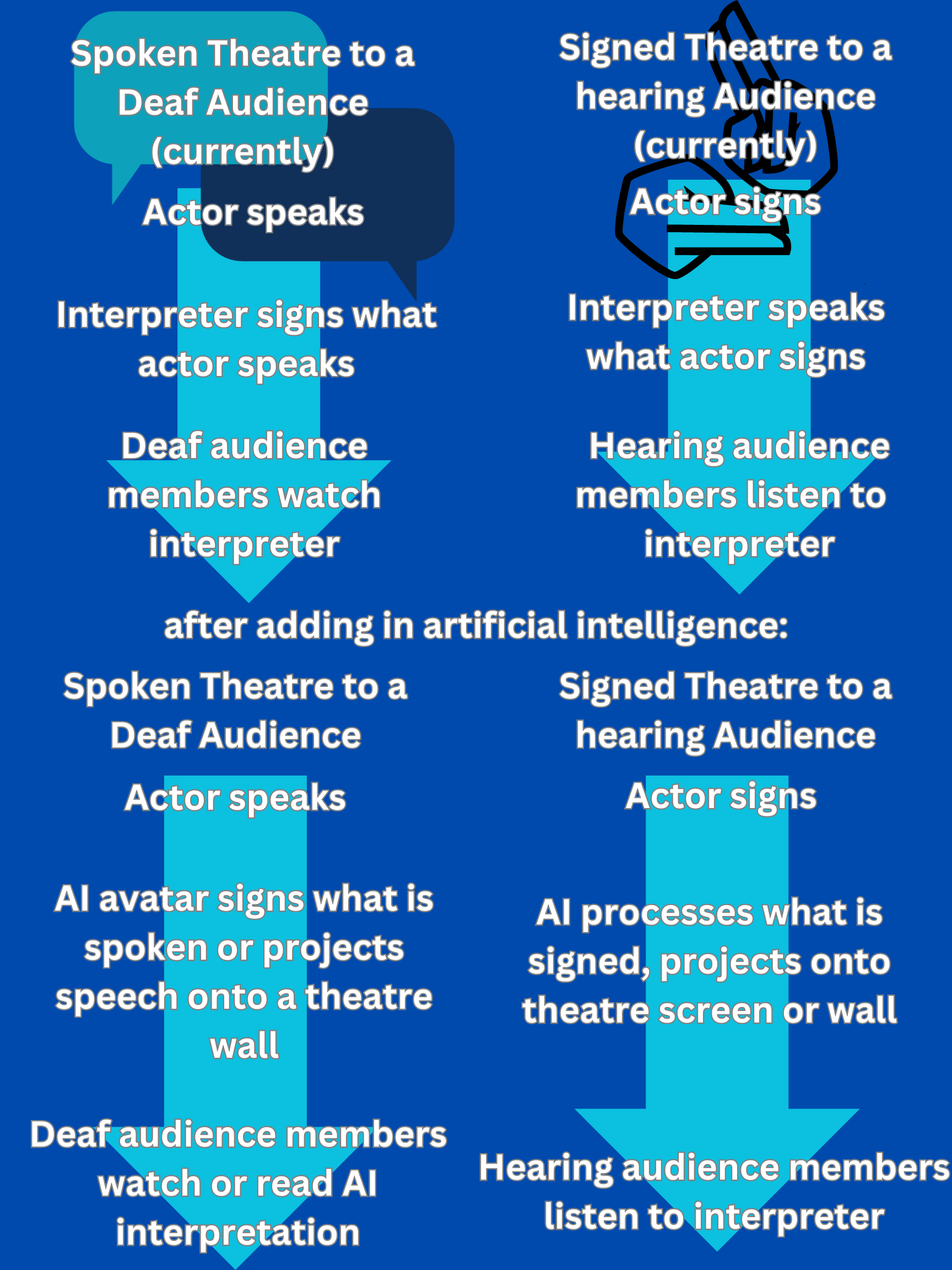

This is where signed language recognition technologies come into play. Let’s swap perspectives and imagine we’re attending a show performed completely in American Sign Language. But, we’re not fluent in ASL, so we spend the majority of the show looking down at the script of the lines. We lose the experience of watching the actors on stage, and instead, are essentially just reading in a theatre. If instead we had a well-developed sign language recognition technology, we could instead be reading a projected translation of the lines on a screen or wall somewhere in the theatre.

Image: Infographic of Theatre to Deaf and Hearing audiences. Source: author.

What does Deaf West think?

Deaf West Theatre is a Tony-Award winning theatre in Los Angeles, California, that aims to “be the artistic bridge between the Deaf and hearing worlds Deaf West engages artists and audiences in unparalleled theater and media experiences inspired by Deaf culture and the expressive power of sign language, weaving ASL with spoken English to create a seamless ballet of movement and voice.” Deaf West’s work is characterized by merging both spoken and signed languages and making their theatre experience accessible to both hearing and Deaf audiences. While they have never formally addressed sign-language recognition technologies, their history of incorporating multimedia experiences into their work suggests they may utilize signed language technologies creatively once they become commercially available.

Is this Really Useful?

While some argue that sign language recognition technologies are not useful as they take communication out of the modality of signed languages and into written languages, these sign language recognition technologies can have a variety of potential uses. Large language models have become sufficient enough throughout the development of artificial intelligence to recognize and process real-time speech into captions, which has been widely adopted as an assistive technology for individuals who are deaf or hard of hearing. Speech recognition technologies and artificial intelligence modes that are trained to interpret signed languages could be used to produce virtual assistant avatars who interact entirely by signing, a concept that is in the early phases of development within the gaming world.

Outside of the Theatre

Signed language recognition technologies can have crucial real-time uses in industries other than entertainment. Paramedics and EMTs can utilize these technologies in time-sensitive medical emergencies when there is no time to bring in an interpreter, and can potentially be life-saving. New signed language recognition devices allow individuals who are Deaf and primarily utilize signed languages to partake in voice recognition technology-based experiences, such as virtual assistants, interactive exhibits, and more. Signed language recognition technologies have an important role in cross-cultural communication between Deaf and hearing individuals, and incorporating them into the performing arts, specifically into theatre, allows for not only more accessible productions, but also for artistic innovation.

-

Borg, Mark and Kenneth P. Camilleri. 2019. “Sign Language Detection “in the Wild” with Recurrent Neural Networks.” ICASSP 2019 – 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (2019): 1637-1641.

Chin, Matthew. 2020. “Wearable-Tech Glove Translates Sign Language into Speech in Real Time.” UCLA, July 1, 2020. https://newsroom.ucla.edu/releases/glove-translates-sign-language-to-speech.

Deaf West Theatre. “About | Deaf West Theatre.” Accessed October 10, 2023. https://www.deafwest.org/about.

Matchar, Emily. 2019. “Sign Language Translating Devices Are Cool. but Are They Useful?” Smithsonian.com, February 26, 2019. https://www.smithsonianmag.com/innovation/sign-language-translators-are-cool-but-are-they-useful-180971535/.

Moryossef, Amit, and Yoav Goldberg. “Sign Language Processing.” Sign Language Processing. Accessed September 30, 2023. https://research.sign.mt/.

Papastratis, Ilias, Christos Chatzikonstantinou, Dimitrios Konstantinidis, Kosmas Dimitropoulos, and Petros Daras. 2021.“Artificial Intelligence Technologies for Sign Language.” Sensors 21, no. 17 (2021): 5843. https://doi.org/10.3390/s21175843.

Parogni, Ilaria. 2023. “How These Sign Language Experts Are Bringing More Diversity to Theater.” The New York Times, January 7, 2023, sec Arts. https://www.nytimes.com/2023/01/07/arts/sign-language-experts-diversity-theater.html

Selleck, Emily. “To Kill a Mockingbird’s Russell Harvard Marks an Historic Milestone for Deaf Actors on Broadway.” Playbill. Accessed September 30, 2023. https://playbill.com/article/to-kill-a-mockingbirds-russell-harvard-marks-an-historic-milestone-for-deaf-actors-on-broadway.

Wen, Feng, Zixuan Zhang, Tianyiyi He, and Chengkuo Lee. 2021. “AI Enabled Sign Language Recognition and VR Space Bidirectional Communication Using Triboelectric Smart Glove.” Nature News, September 10, 2021. https://www.nature.com/articles/s41467-021-25637-w#citeas.

Image Citations

Fogetti, Fernanda. “Hand Talk announces groundbreaking technology for movement recognition in sign languages”. Photograph. Handtalk. February 17, 2022.

Picheta, Rob. ” This new high-tech glove translates sign language into speech in real time”. Photograph. CNN. July 1, 2020.

Pettit, Claire “Student Sign Language Interpreters Help Deaf Community Access Music – The Huntington News.” The Huntington News, March 28, 2019. Photograph. https://huntnewsnu.com/58647/campus/student-sign-language-interpreters-help-deaf-community-access-music/.