Written by Kate Maffey

Anyone who has needed some quick help in another language knows the drill – just open up Google Translate. While it is common knowledge that it will not be perfect, it is better than nothing, and has helped countless people communicate across linguistic and cultural divides. Despite its usefulness, there is not widespread adoption of machine translation technology across the museum industry.

In a world where Siri can set alarms, give us directions, and look things up, shouldn’t machine translation be better by now? How does machine translation work? Why are museums still using human translation? And most importantly, what happens when machine translation is good enough that museums and other arts enterprises can use it without human oversight?

Figure 1: “Learning Languages.” Source: Roselinde Alexandra.

This article explores how machine translation works and how it came to be in its current state, as well as how well state-of-the-art machine translation technology is evaluated. Finally, this article examines how improvements in machine translation could disrupt and transform the museum industry.

How does machine translation work?

Underpinning machine translation is a field called natural language processing, or NLP for short. This area of study uses tools of computational linguistics combined with modern advances in computer science in order to “learn, understand, and produce human language content.” While NLP has progressed greatly since its inception, one foundational technique that is still used in some forms today is called “bag of words”, where all the words in a document are added up and each word’s frequency is calculated. This concept is a great illustration of many of the techniques used in NLP – counting words and calculating their relationship to other words within the same document or within a corpus of documents.

Two other concepts to highlight within NLP are part-of-speech tagging and named entity recognition. Part-of-speech tagging is a computational linguistics tool in which descriptors (the tags) are assigned to each word’s role within a sentence. This allows for semantic understanding of the role each word plays, which is critical to grokking (understanding) the full meaning of a sentence. Another important concept in NLP is named entity recognition, which is when a word or phrase that contains important information is identified and categorized. In order to understand a sentence, humans need to be able to differentiate a name or location as distinct from an ordinary noun or pronoun, and named entity recognition allows for that.

The techniques described above offer a window into how the field of natural language processing works and are illustrative of concepts integral to machine translation. Machine translation historically used statistical learning techniques similar to those described above. Launched in April 2006, Google Translate used a corpus of billions of words in both the origin and target languages to help craft computer-generated translations. While it is not the only company offering cutting-edge translation technology, Google has been an industry leader for many years.

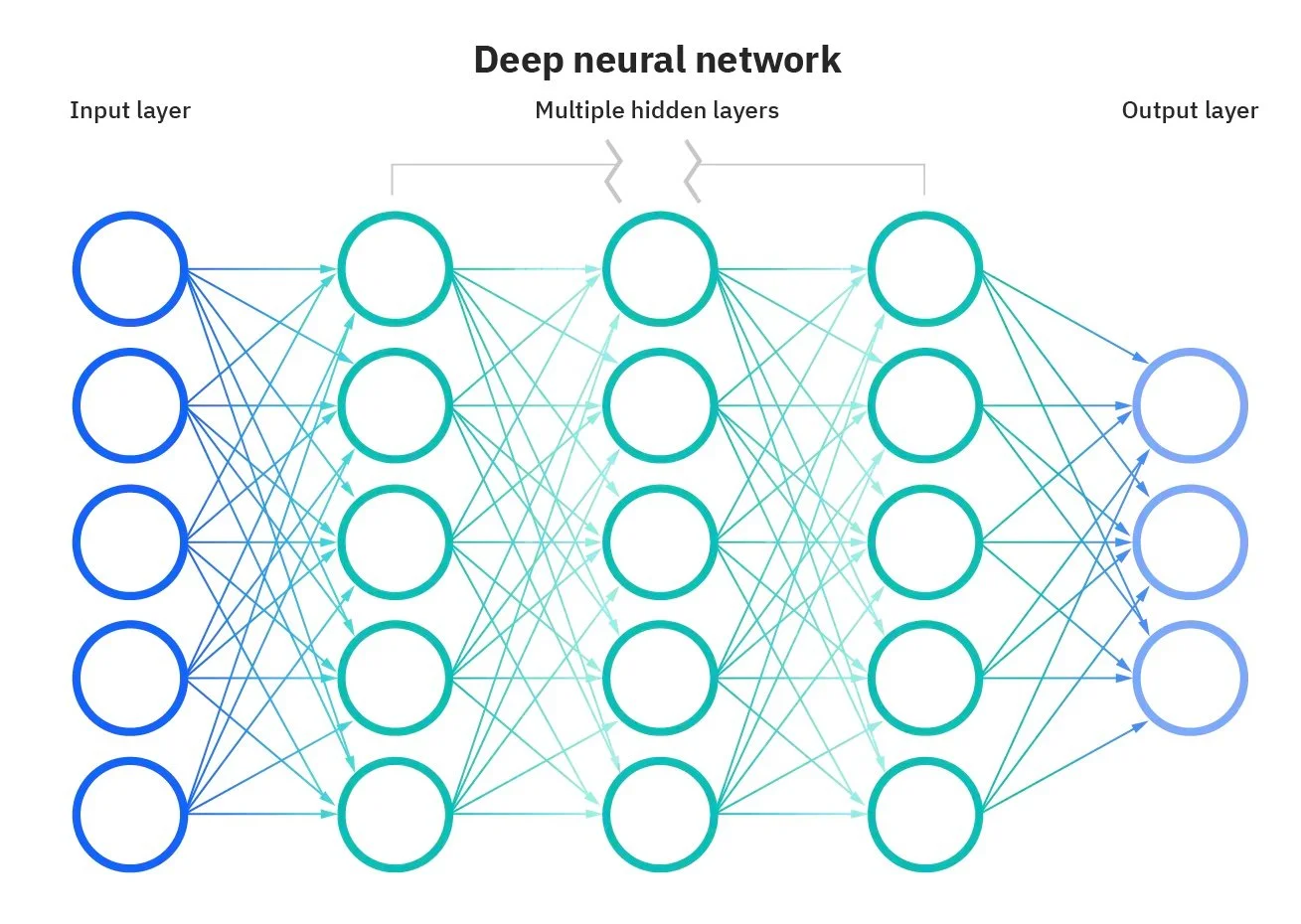

With improvement in computing hardware as well as machine learning technology, Google was able to improve its translation service. In 2016, it launched a new version of its machine translation service that used neural machine translation instead of statistical models. Artificial neural networks are a type of machine learning algorithm that has quickly become useful in many industries. Using neural machine translation means that the model itself is made up of layers and layers of simple linear regression models. By combining these simple models, it mimics the way a human brain works in sending, translating, and receiving signals (hence why these models are referred to as “neural networks”).

Figure 2: How Neural Networks work. Source: IBM.

While Google’s current translation model still uses neural machine translation, the company has made strides in other areas of machine translation. Previously, copious amounts of data in every language that they wanted to translate was required, but in using neural network machine learning techniques, its models can now perform better on low-resource languages. This means that the models perform well even if they were not trained on much data for that language. In 2019, Google released a massively multilingual, massive neural machine translation (M4) that was pre-trained on over 25 billion sentence pairs and as a result, it performed even better on low-resource languages. Google has continued to focus on improving this low-resource language ability in order to expand machine translation offerings, releasing an updated model in 2020.

How do we know machine translation is improving?

Machine translation works by taking natural language processing techniques, training a complex model with many layers of simpler models, and then inputting new text on which the model uses what it has learned. But how do researchers know that machine translation is improving?

There are a few metrics that exist for machine translation, and Google uses one called BLEU, which stands for “bilingual evaluation understudy.” The paper establishing the method was published in 2002, but despite its age, the metric is still being used to evaluate cutting-edge language models. BLEU calculates a translation closeness score by evaluating each sentence as a unit and comparing them on a weighted average of words, allowing for other word choices that make sense in context. The score is then reported as a number between 0 and 1, with 1 being the highest score possible. Google has continued to evaluate its models using BLEU, allowing updated models to be easily compared to their predecessors.

Fogure 3: Image of lines of text written in different languages. Source: nofrills.

Despite the progress that has been made, it is helpful to track progress by comparing machine translation capabilities with natural language processing tools that we use in English daily. As mentioned, people often take speech-to-text for granted on their devices, or that they can interface with a chatbot on a website. Notably, a company in Canada called Cohere just raised $125 million to perform similar NLP tasks, including content moderation, conversational artificial intelligence, and search support. Another example of the expansion of English language model tools comes in the form of a startup called Forefront. Two weeks after the launch of an open-source large language model called GPT-NeoX-20b, Forefront announced that it was offering fine-tuning services to make the use of GPT-NeoX-20b more accessible. All these markers of progress demonstrate that NLP in English is rapidly advancing and expanding.

Machine translation is not good enough – Yet

If AI can write papers and chat with humans, why is it unable to fluently translate into any language without errors? One of the shortcomings of large language models is that they require copious amounts of data to be effective. While there has been considerable effort to train machine translation models, there is not as much training data available in many languages as there are in English. Despite Google’s best efforts to train its translation models to perform well on low-resource languages, they still aren’t nearly as good as the models that use English. Not only are the models not as refined, but they are also insufficient for professional use – even in high-resource languages. As researcher Aihua Zhu states, current machine translation technology fails to meet the needs of use in professional contexts.

Despite the clear advantage that English has over other languages, there are still numerous shortcomings that exist in English language models. Many of them were trained on text from the internet, therefore, they tend to have biases such as stereotypes towards people of minority gender or ethnic identities. A recent illustration of this is YouTube’s automated captioning service – it was spotted using profanity in captions of videos for young children. So while language models in English perform well enough to be useful to large swathes of society, they still have limitations. Comparatively, the limitations of multilingual language models are greater and do not allow for as much integration into high-level societal tasks, although they are useful for many people (in 2016, Google stated that Google Translate has 500 million users and translates over 1 billion words a day).

A Useful Tool

Regardless of its shortcomings, Natural Language Processing has made profound developments within the past 5 or 6 years. While there is still much to be improved on, its integration into everyday life personally and professionally shows that this technology will only experience improvement in the future.

+ Resources

Axiell. n.d. “Axiell – Bringing Culture and Knowledge to Life.” Axiell. Accessed March 2, 2022a. https://www.axiell.com/.

Axiell. n.d. “Interface Functionality: Editing or Translating Adlib Interface Texts.” Accessed March 2, 2022b. http://documentation.axiell.com/alm/en/ds_tmeditingtranslatingtexts.html#autotranslate.

Axiell. n.d. “Translations Manager: Introduction.” Accessed March 2, 2022c. http://documentation.axiell.com/alm/en/ds_tmintroduction.html.

Bapna, Ankur, and Orhan Firat. 2019. “Exploring Massively Multilingual, Massive Neural Machine Translation.” Google AI Blog (blog). October 11, 2019. http://ai.googleblog.com/2019/10/exploring-massively-multilingual.html.

Chen, Chia-Li, and Min-Hsiu Liao. 2017. “National Identity, International Visitors: Narration and Translation of the Taipei 228 Memorial Museum.” Museum and Society 15 (1): 56–68. https://doi.org/10.29311/mas.v15i1.662.

“Cohere.” n.d. Cohere. Accessed March 2, 2022. https://cohere.ai.

David C. Brock. 2022. “A Museum’s Experience With AI.” CHM. February 3, 2022. https://computerhistory.org/blog/a-museums-experience-with-ai/.

Eriksen Translations Inc. 2019. “Museum Audience Engagement: Translation Strategies to Promote Diversity.” Eriksen Translations Inc. September 19, 2019. https://eriksen.com/arts-culture/museum-audience-engagement-translation-strategies/.

Eriksen Translations Inc. n.d. “Translating and Typesetting the Met Guides into 6 Languages.” Eriksen Translations Inc. Accessed March 2, 2022a. https://eriksen.com/work/met-guides/.

Eriksen Translations Inc. n.d. “Translating Exhibition Materials for the South Florida Science Center.” Eriksen Translations Inc. Accessed March 2, 2022b. https://eriksen.com/work/exhibition-displays/.

Eriksen Translations Inc. n.d. “Translating the Garden Tool Mobile Website for Denver Botanic Gardens.” Eriksen Translations Inc. Accessed March 2, 2022c. https://eriksen.com/work/garden-tool-mobile-website/.

Eriksen Translations Inc. n.d. “Translation & Localization Services NYC | Eriksen Translations.” Eriksen Translations Inc. Accessed March 2, 2022d. https://eriksen.com/.

“Forefront: Fine-Tune and Deploy GPT-J, GPT-13B, and GPT-NeoX.” n.d. Accessed March 2, 2022. https://www.forefront.ai/helloforefront.com/.

Field Museum. 2018. “About.” Text. Field Museum. May 14, 2018. https://www.fieldmuseum.org/about.

Furui, S., T. Kikuchi, Y. Shinnaka, and C. Hori. 2004. “Speech-to-Text and Speech-to-Speech Summarization of Spontaneous Speech.” IEEE Transactions on Speech and Audio Processing 12 (4): 401–8. https://doi.org/10.1109/TSA.2004.828699.

Goel, Aman. 2018. “How Does Siri Work? The Science Behind Siri.” Magoosh Data Science Blog (blog). February 3, 2018. https://magoosh.com/data-science/siri-work-science-behind-siri/.

Hirschberg, Julia, and Christopher D. Manning. 2015. “Advances in Natural Language Processing.” Science 349 (6245): 261–66. https://doi.org/10.1126/science.aaa8685.

IBM Cloud Education. 2021. “What Are Neural Networks?” August 3, 2021. https://www.ibm.com/cloud/learn/neural-networks.

Lalwani, Tarun, Shashank Bhalotia, Ashish Pal, Vasundhara Rathod, and Shreya Bisen. 2018. “Implementation of a Chatbot System Using AI and NLP.” SSRN Scholarly Paper ID 3531782. Rochester, NY: Social Science Research Network. https://doi.org/10.2139/ssrn.3531782.

Leahy, Connor. 2022. “Announcing GPT-NeoX-20B.” EleutherAI Blog. February 2, 2022. https://blog.eleuther.ai/announcing-20b/.

Liao, Min-Hsiu. 2018. “Museums and Creative Industries: The Contribution of Translation Studies.” January 2018. https://jostrans.org/issue29/art_liao.php.

Marshall, Christopher. 2020. “What Is Named Entity Recognition (NER) and How Can I Use It?” Super.AI (blog). June 2, 2020. https://medium.com/mysuperai/what-is-named-entity-recognition-ner-and-how-can-i-use-it-2b68cf6f545d.

Martin, Jenni, and Marilee Jennings. 2015. “Tomorrow’s Museum: Multilingual Audiences and the Learning Institution.” Museums & Social Issues 10 (1): 83–94. https://doi.org/10.1179/1559689314Z.00000000034.

Matas, Ariadna. 2020. “OpenGLAM Translation Sprint at Europeana 2020.” Europeana Pro. September 2020. https://pro.europeana.eu/event/openglam-translation-sprint-at-europeana-2020.

Melisa Palferro. 2018. “Different Approaches to Museum Translation.” #ucreatewetranslate (blog). March 8, 2018. https://www.ucreatewetranslate.com/different-approaches-museum-translation/.

Menon, Yasmin, and Will Lach. 2021. “Creating Accessibility in Museums with High Quality Translations.” Eriksen Translations Inc. November 19, 2021. https://eriksen.com/arts-culture/accessibility-museums-high-quality-translations/.

Mingyu, Lu, and Si Xianzhu. 2010. “Application of Machine Translation to Chinese-English Translation of Relic Texts in Museum.” In 2010 International Conference on Intelligent System Design and Engineering Application, 1:355–58. https://doi.org/10.1109/ISDEA.2010.4.

Mitkov, Ruslan. 2004. The Oxford Handbook of Computational Linguistics. OUP Oxford. https://books.google.com/books?hl=en&lr=&id=yl6AnaKtVAkC&oi=fnd&pg=PA219&dq=part+of+speech+tagging&ots=_VVi1buLDj&sig=y-3BFecOVtTtptCNFrS8Br-EFGE#v=onepage&q=part%20of%20speech%20tagging&f=false.

MTAAC Team. n.d. “Machine Translation and Automated Analysis of Cuneiform Languages.” Machine Translation and Automated Analysis of Cuneiform Languages. Accessed March 2, 2022. https://cdli-gh.github.io/mtaac/.

Multilingual Connections. n.d. “Museum Translation Services.” Multilingual Connections. Accessed March 2, 2022. https://multilingualconnections.com/industry-experience/museums-cultural-institutions/.

Nadeem, Moin, Anna Bethke, and Siva Reddy. 2020. “StereoSet: Measuring Stereotypical Bias in Pretrained Language Models.” ArXiv:2004.09456 [Cs], April. http://arxiv.org/abs/2004.09456.

Och, Franz. n.d. “Statistical Machine Translation Live.” Google AI Blog (blog). Accessed March 2, 2022. http://ai.googleblog.com/2006/04/statistical-machine-translation-live.html.

Papineni, Kishore, Salim Roukos, Todd Ward, and Wei-Jing Zhu. 2002. “Bleu: A Method for Automatic Evaluation of Machine Translation.” In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, 311–18. Philadelphia, Pennsylvania, USA: Association for Computational Linguistics. https://doi.org/10.3115/1073083.1073135.

Scott, Josh. 2022. “Cohere Closes $125 Million USD Series B Round Led by Tiger Global.” BetaKit (blog). February 15, 2022. https://betakit.com/cohere-closes-125-million-usd-series-b-round-led-by-tiger-global/.

Siddhant, Aditya, Melvin Johnson, Henry Tsai, Naveen Ari, Jason Riesa, Ankur Bapna, Orhan Firat, and Karthik Raman. 2020. “Evaluating the Cross-Lingual Effectiveness of Massively Multilingual Neural Machine Translation.” Proceedings of the AAAI Conference on Artificial Intelligence 34 (05): 8854–61. https://doi.org/10.1609/aaai.v34i05.6414.

Simonite, Tom. 2022. “YouTube’s Captions Insert Explicit Language in Kids’ Videos.” Wired, February 24, 2022. https://www.wired.com/story/youtubes-captions-insert-explicit-language-kids-videos/?utm_source=nl&utm_brand=wired&utm_mailing=WIR_FastForward_022822&utm_campaign=aud-dev&utm_medium=email&utm_content=WIR_FastForward_022822&bxid=60a682d211af1a6455755091&cndid=65158197&esrc=bouncexmulti_second%20&source=EDT_WIR_NEWSLETTER_0_TRANSPORTATION_ZZ&mbid=mbid%3DCRMWIR012019%0A%0A&utm_term=WIR_Transportation.

Turovsky, Barak. 2016a. “Ten Years of Google Translate.” Google. April 28, 2016. https://blog.google/products/translate/ten-years-of-google-translate/.

Turovsky, Barak. 2016b. “Found in Translation: More Accurate, Fluent Sentences in Google Translate.” Google. November 15, 2016. https://blog.google/products/translate/found-translation-more-accurate-fluent-sentences-google-translate/.

Zhu, Aihua. 2021. “Man-Machine Translation—Future of Computer-Assisted Translation.” Journal of Physics: Conference Series 1861 (1). http://dx.doi.org/10.1088/1742-6596/1861/1/012088.