Written by Xiaocheng Ma

Museums and cultural heritage attractions have been facing challenges since the pandemic. Many museums, especially children’s museums and tech museums, installed touch screens and buttons for people to manipulate prior to the pandemic. During an $80 million renovation to its building and facilities in 2011, the New York Historical Society invested heavily in touch screen stations. Now, it seems that these investments in equipment will not get the return they deserve because people may feel reluctant to touch anything in public due to Covid-19. Janice Majewski, who is a museum consultant of the Institute for Human-Centered Design, said that museums are now exploring the possibility of introducing multi-sensory features and gesture-based interactives. While embracing change and technological upgrades, museums should understand the benefits and some problems with gesture-based interactives.

Background

The technical terms used for gesture-based interaction are kinesthetic interaction or embodied interaction. The human-computer interaction can be categorized into distinguishable styles including whole-body interaction, mid-air interaction, and bare-hands interaction. Some of the leading technologies for kinesthetic interaction devices are the Microsoft Kinect and the Leap Motion. Microsoft Kinect is more focused on whole-body interaction in that it can track body motion and gestures while the Leap Motion can only track users’ hand motions.

Kinesthetic interaction has not yet substantially developed into a commonly used application in museums and cultural heritage, but all of the three types of interactions have been tested and tried out by limited tech-savvy arts and culture organizations to engage and entertain their audiences. In the Leap Motion app store, only four out of 131 apps are for the arts. Although the use of gesture-based interaction is still uncommon in museums and arts organizations, the current crisis has been pushing touchless technology and design approaches forward.

Mid-Air Interaction

In mid-air interaction, users use their bodies, especially their hands, to apply gestures and motions in the mid-air to interact with a distant display, a computer, or a kiosk screen. The Leap Motion controller has been widely used as a sensing device in the industry to capture hand movements. The controller or the sensor can be linked to various systems such as a PC, screen displays, Mac, Linux, and other applications. When the user’s hands are detected in the mid-air, the leap motion sensor converts it to an on-screen cursor. As long as the hands are in the interaction area of the controller, the gestures will be inputted into the computer system. Many of the mid-air interactives that are applied in the museum utilize the sensors designed by Leap Motion.

Figure 1: Leap Motion controller links to a computer. Source: IdeaConnection.

Figure 2: Interactive area of a Leap Motion controller. Source: Leap Motion.

In 2019, the Mississippi Arts + Entertainment Experience (The MAX) in Meridian, Mississippi, designed a mid-air interaction experience for visitors to make pottery in the style of famous potters.

Figure 3: The mid-air interaction experience at the MAX. Source: Vimeo.

The technology features guided gestural and screen interaction for visitors to follow the process that famous artists used to make pottery. On a gesture-sensing potter’s wheel, visitors can move their hands in the mid-air to rub and pinch the clay on the kiosk screen into the shape they like.

In 2016, the Museum of Cycladic Art in Greece experimented with mid-air interaction with groups of researchers from the University of the Aegean, Greece. Together, they experimented with an application called the Cycladic sculpture application that places the user in the role of the ancient craftsman or sculptor. This application has a similar mechanism to the pottery application designed by the MAX. Users would move their hands in front of the computer sensor and manipulate a “virtual hand” on the screen that constructs the figurine as ancient sculptors did.

Figure 4: Pictures of child participating in the experiment. Source: Koutsabasis and Vosinakis.

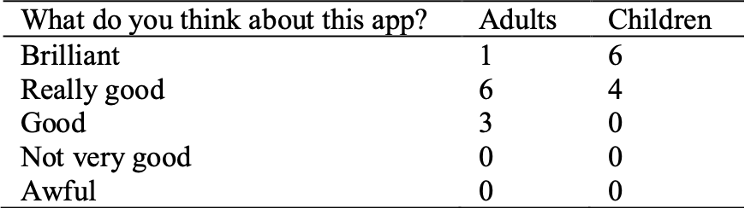

In the experiment, researchers collected data on user satisfaction and impressions on the application as shown in the following table.

Figure 5: Participant responses from mid-air interaction experiment. Source: Koutsabasis and Vosinakis.

All of the participants gave high ratings of the experience. Six out of ten adult participants thought that the application was really good while six out of ten children participants thought the app was brilliant.

Although users were satisfied with the experience, there have been some technical issues detected by researchers, which indicates that the technology still needs to be adjusted and improved. Sixty-five percent of the participants mentioned that the sensors lack depth perception while 45% of the participants experienced hand track loss during the interaction. Fifty percent of the users experienced missing the target when it was at the edge of the screen. Based on user feedback, the researchers developed an optimized plan for mid-air interactives to be used in the museum.

Whole-Body Interaction

Whole-body interaction refers to the use of body movements and postures to interact with digital content on distant displays.

Figure 6: Illustration of whole-body interaction. Source: “Kinesthetic interaction.”

A simple demonstration of how whole-body interaction works is shown in the figure above. Sensors can be placed on the body or in the environment. While sensors capture the user's movements and send inputs to computers, actuators convert inputs to outputs and produce human-perceivable elements such as sounds and images.

Ideum is a creative company based in Corrales, New Mexico, that creates digital experiences and touchless-based exhibits. Since 2015, the Ideum team has been making whole-body interactives for museums and organizations based on the technology provided by the Microsoft Kinect sensor.

Its first gesture-based exhibit was Be a Bug at the ABQ BioPark. In the exhibit, visitors are able to choose their virtual identity as a bug and stand at a designated area for sensors to capture their body movements. Once they select their identity, they can wave and flap their arms as if they are a real bug flying from one food source to another. Although the Ideum team admitted that there are design flaws with the exhibits, there are always lines in front of the interactive screen.

Figure 7: Children participating in Ideum’s Usability Lab. Source: Ideum.

Be a Bug is an example of a single-user interactive experience. Ideum has also been developing exhibits that are not relegated to the size of a screen. The ultimate goal is to create multi-user motion-based exhibits and immersive environments for groups of visitors to experience. In 2019, they designed a gesture-based exhibit that takes place in a three-sided room for Cayton Children’s Museum. The room features a mountain meadow scene with animated 2-D artworks and touchless interactions that are meant to teach children about nature. The technology applied for the exhibit is Orbbec Astra motion-sensing devices. Instead of capturing nuanced full-body gestures or poses, the Orbbec Astra motion-sensing devices are placed on the ceiling to track gross movement and activity.

Figure 8: Ideum’s interactive immersive room at Cayton Children’s Museum. Source: YouTube.

Bare-Hand Interaction

Bare-hand interaction refers to the use of the hands, palms, and fingers mainly for desktop or kiosk-oriented interactions. This type of interaction used to be the traditional touch screen technology that major museums and cultural heritage sites relied on, but now it has been experiencing revolutionary change.

In September 2020, Ideum announced the release of Touchless Design SDK Version 1.0, now available on GitHub. By tracking the visitor's hand and specific gestures, such as opening and closing, this system gives the user complete control over the mouse without touching the screen tab. Ideum listed equipment and codes needed for the system for free so that museums, cultural organizations, and other public institutions can apply the technology post-pandemic.

Ideum has also been working with the National Gallery of Art in Washington, D.C., to develop a unique touchless kiosk that will guide visitors to enjoy the museum’s collection just by waving their hands. The kiosk will be similar to the traditional touch-screen display at museums, but with Intel RealSense depth camera D435 and Leap Motion controller for touchless gesture recognition. It doesn’t cost thousands of dollars for museums to adopt this new technology; the Intel RealSense depth camera D435 costs around $200 and the Leap Motion controller costs $80.

Advantages and Disadvantages

Some articles hold different opinions on the advantages and disadvantages of gesture-based technology for use in museums and arts organizations. Derpanis mentioned that traditional interfaces and touch-based interactions have reached a bottleneck in application development. Even before the pandemic, users were getting used to touch-based interfaces and sometimes getting bored with these devices or even ignoring them in a museum setting.

Because the application of the technology is relatively limited in museums for now, there are no large-scale statistics or studies on user demographics and experiences, but we can find from some small-scale data summarized by researchers that most users give high ratings for their gesture-based interactive experience, with children being more satisfied than adults. Users give feedback on some of the exhibits produced by Ideum saying that the exhibits are responsive, interactive, entertaining, and fun.

Two of the most obvious advantages of gesture-based interactives are 1.) they are an alternative to the touch screen interactive that might be reluctantly used by the public after the pandemic and 2.) they are more entertaining for the audience and can easily engage users of different ages.

Koutsabasis and Vosinakis suggest that for museums and cultural heritage attractions, the technology can be critical for educational purposes, especially for highlighting intangible aspects of cultural heritage such as habits, rituals, and everyday activities. Instead of using words and paragraphs on touch screens to define these intangible activities, gesture-based interaction is more user-friendly in engaging visitors to experience these intangible cultural heritages.

Additionally, because there are already mature technology companies in this field, the application of this technology is not particularly expensive for museums. According to the information published by Ideum on GitHub, the total price of sensors and cameras required for the application of bare-hand interaction does not exceed $300. Things that require the museum to spend more money may be the display screens and the creative content to be displayed.

Although this technology has many advantages and prospects, it still has some problems. Museums and technical designers still need to pay attention to many points when designing these interactive experiences. Jim Spadaccini, the founder of Ideum, points out that the instruction for gestures must be simple and clear or it will overwhelm visitors. Another concern is the precision of the sensors and the output image. Koutsabasis and Vosinakis mention in their research that users would sometimes experience hand track loss and miss targets during the interaction. Visitors also get easily disappointed when some of their movements do not get recognized, so technical precision is always a fundamental part of the application.

+ Resources

Jacobs, Julia. “No Touch, No Hands-On Learning, for Now, as Museums Try to Reopen.” The New York Times. The New York Times, May 29, 2020. https://www.nytimes.com/2020/05/29/arts/design/museums-interactive-coronavirus.html.

Fogtmann, Maiken Hillerup, Jonas Fritsch, and Karen Johanne Kortbek. “Kinesthetic Interaction: Revealing the Bodily Potential in Interaction Design.” Proceedings of the 20th Australasian Conference on Computer-Human Interaction Designing for Habitus and Habitat - OZCHI '08, 2008.

Koutsabasis, Panayiotis, and Spyros Vosinakis. “Adult and Children User Experience with Leap Motion in Digital Heritage: The Cycladic Sculpture Application.” Digital Heritage. Progress in Cultural Heritage: Documentation, Preservation, and Protection, 2016, 350–61.

England, D. (2011). Whole body interaction: An introduction. In Whole Body Interaction. Springer London.

Koutsabasis, Panayiotis, and Panagiotis Vogiatzidakis. “Empirical Research in Mid-Air Interaction: A Systematic Review.” International Journal of Human–Computer Interaction 35, no. 18 (2019): 1747–68.

Blooloop, “COVID Hands off Museum Experiences: New Technology.” January 18, 2021. https://blooloop.com/museum/opinion/covid19-hands-off-museum-experiences/.

Ideum, “About”, Accessed April 13, 2021. https://ideum.com/about.

Leap Motion App Store, Accessed April 13, 2021, https://apps.leapmotion.com/.

Jim Spadaccini, “Touchless Gesture-Based Exhibits, Part Two: Full-Body Interaction,” Ideum (Ideum, April 22, 2020), https://ideum.com/news/touchless-interaction-public-spaces-part2.

Github, “Ideum Touchless Design-SDK” Accessed April 13, 2021, https://github.com/ideum/Touchless.Design-SDK.

Kiosk Marketplace “Ideum Teams with National Gallery of Art on Touchless Kiosk,”, July 14, 2020, https://www.kioskmarketplace.com/news/ideum-teams-with-national-gallery-of-art-on- touchless-kiosk/.

Nadia Adona, “Ideum Rolls Out Touchless Design Open-Source Software,” SEGD, September 30, 2020, https://segd.org/ideum-rolls-out-touchless-design-open-source-software.

Rachel Metz, “Look Before You Leap Motion,” MIT Technology Review (MIT Technology Review, April 2, 2020), https://www.technologyreview.com/2013/07/22/177267/look-before- you-leap-motion/.

Von Hardenberg, C. and Bérard, F., 2001, November. “Bare-hand human-computer interaction.” In Proceedings of the 2001 Workshop on Perceptive User Interfaces. pp1-8.

Derpanis, K.G. (2004). “A Review of Vision-Based Hand Gestures.” Unpublished, internal report. Department of Computer Science, York University. February 12, 2004.

Jim Spadaccini, “Touchless Gesture-Based Exhibits, Part One: High-Fidelity Interaction,” Ideum (Ideum, April 10, 2020), https://ideum.com/news/gesture-interaction-public-spaces- part1.

MuseumNext, “Corona-Proofing Museum Interactives,” March 30, 2021, https://www.museumnext.com/article/corona-proofing-museum-interactives/.

Bethan Ross, “Is This the End of Touchscreens in Museums? The Use of Touchless Gesture- Based Controls.,” Medium (Science Museum Group Digital Lab, June 18, 2020), https://lab.sciencemuseum.org.uk/is-this-the-end-of-touchscreens-in-museums-the-use-of- touchless-gesture-based-controls-ee3f3c3f37ce.

Interactive Knowledge Blog, “Revisiting Touchscreen Interactives in the Time of Coronavirus,” accessed April 13, 2021, https://interactiveknowledge.com/insights/revisiting- touchscreen-interactives-time-coronavirus.

Smithsonian, “The Cleveland Museum of Art Wants You To Play With Its Art,” (Smithsonian Institution, February 5, 2018), https://www.smithsonianmag.com/innovation/cleveland- museum-art-wants-you-to-play-with-its-art-180968007/.

Jim Spadaccini, “Touchless Gesture-Based Exhibits, Part Three: Touchless Design,” Ideum (Ideum, July 9, 2020), https://ideum.com/news/touchless-interaction-public-spaces-part3.