By: Kristine Yan

introduction

Throughout history, the healing power of music, both for emotional and physical well-being, has been well-documented. In the early 20th century, musicians flocked to hospitals to play for war-weary soldiers returning from battlefields. The positive response from these soldiers gave rise to the profession of music therapists. Fast forward to the 21st century, advancements in technology and the popularization of smart devices have revolutionized the way we engage with music, making music therapy more accessible than ever before. Patients can now enjoy music therapy through smartphones or computers, breaking geographical barriers. Music therapists have become more professional, with mandatory qualifications and standardized practices. These developments have paved the way for the emergence and growth of AI music therapy.

Dr. Cynthia Colwell (top right) teaching a class titled “Orff Applications in Music Education and Music Therapy.”

Source: Christine Metz Howard / KU School of Music

two facets of music therapy

Music therapy comes in two distinct forms: active and passive. The former includes creating music, composing, and songwriting, and the latter involves passive experiences like listening to live or recorded music. While research has yet to establish which approach is superior for all patients conclusively, active music therapy shows a stronger influence on patients in specific domains and illnesses. For example, in an intervention study on music therapy for dementia patients, it was observed that active interventions, particularly music-making, led to significant brain plasticity. Active interventions, like creating music, not only had a more substantial impact on patients but also enhanced cognitive processing efficiency, as revealed by fMRI scans. Similarly, a study on therapeutic interventions for autistic children illustrated that active participation in music, as opposed to passive listening, engaged more brain regions, contributing to improvements in speech and gesture skills.

Challenges of Traditional Music Therapy

The lack of confidence in their creative abilities and the associated costs often stop patients from embracing active music therapy. Songwriting and performance typically requires a musical background, which, when lacking, can impact therapy outcomes and a patient’s self-assurance. Furthermore, the high price of traditional therapy sessions, which is averaging $110 per hour, often presents a huge obstacle. In contrast, AI music therapy platforms, such as Waterpaths, offer a cost-effective and customizable alternative. With basic memberships priced at a mere $360 per year, these platforms are positioned to provide users with greater flexibility and accessibility. Art institutions implementing this technology will reap significant advantages, as it will lead to cost reductions across their traditional operations including all-encompassing management, personnel, and operational expenditures. The field of arts management plays an important role in facilitating these improvements.

Traditional music therapy encounters three significant challenges: personalization, cost, and the absence of active therapy. In this market landscape, AI music therapy emerges as a formidable contender due to its ability to offer full customization based on a patient’s specific condition and preferences. It is user-friendly, more accessible for beginners, and more cost-effective.

Advancements in AI Music Therapy

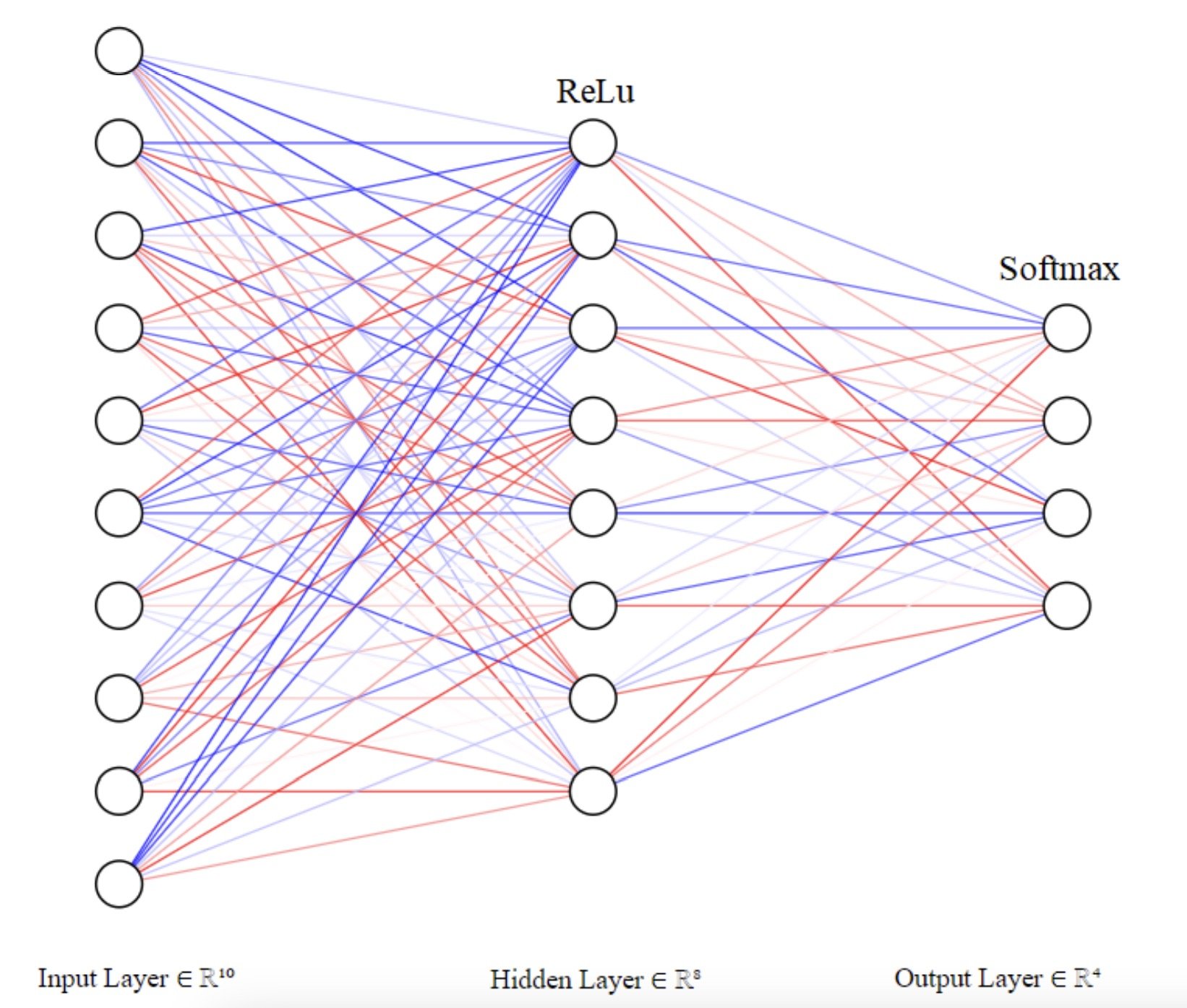

Research on AI music therapy provides the basis for the realization of this technology and its entry into the mass market. In a report by Horia Alexandru Modran, the potential of artificial intelligence and machine learning to predict the therapeutic benefits of specific songs for individuals is being explored. The report outlines the creation of a machine learning model that predicts the therapeutic efficacy of particular songs for specific individuals, considering musical and emotional characteristics, as well as solfège ear training frequency. The model classifies music into four emotional categories: happy, sad, energetic, or calm. Impressively, this model exhibits an accuracy exceeding 94% and delivers promising results when tested on various individuals.

Screenshot of Neural Network Architecture (Photo by author)

Source: Horia Alexandru Modran

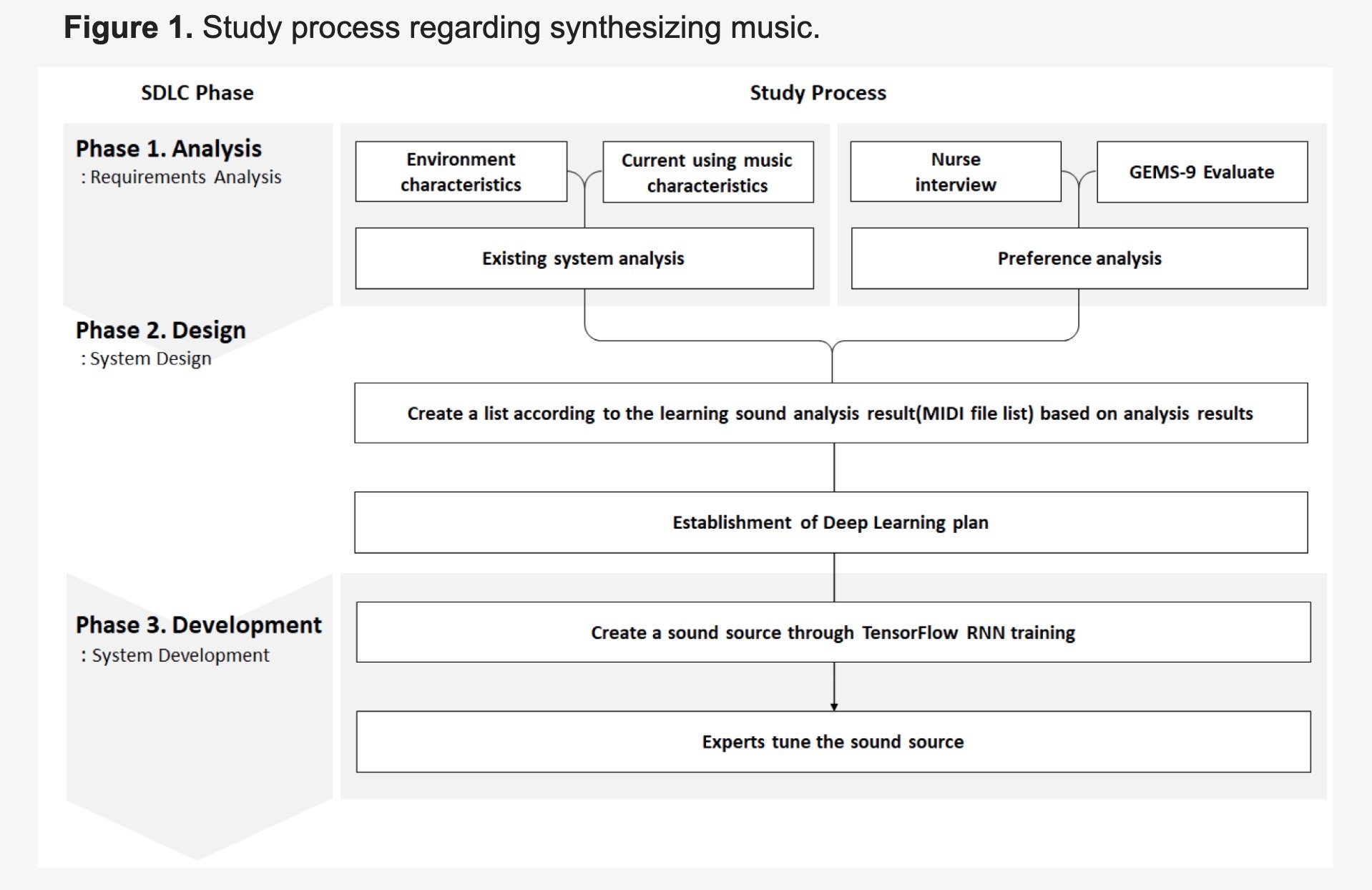

Furthermore, another similar experiment showed how artificial intelligence and deep learning could be used to manage anxiety in pre-surgery patients through music. Common music interventions used in operating rooms often do not adhere to psychological relaxation standards but instead, reflect operating room staff or patient preferences. Nurses select and play quiet music in the operating room without meeting specific criteria. This trial uses AI to create original arrangements of existing tracks by automatically and continuously playing them throughout the surgery. This approach not only promotes relaxation before surgeries but also relieves medical staff from the task of curating playlists, allowing them to focus on other critical medical responsibilities.

Screenshot of Study process regarding synthesizing music (Photo by author)

Source: The Effects of Synthesizing Music Using AI for Preoperative Management of Patients’ Anxiety

The Creative Frontier: AI Collaborations with Artists

While the research has primarily concentrated on customization based on a patient’s condition and affordability, the evolution of active music therapy has predominantly been driven by artists. A groundbreaking interdisciplinary project, Song of the Ambassadors, spearheaded by K Allado-McDowell, head of Google Arts and Machine Intelligence, combines music, neuroscience, and artificial intelligence to create an innovative opera. This opera seamlessly blends diverse musical traditions, including Ghanaian, Persian, electronic, and ambient sounds. It collects real-time EEG data from both the audience and performers to produce breathtaking visual effects in collaboration with costumes and scenery. Notably, audience engagement is more than passive listening; it is a participatory process that continually informs the performance, making it a truly interactive experience.

Official Video of Song of The Ambassadors from YouTube.

Innovative artists have devised more direct approaches to engage in music production, eliminating the need for any prior musical knowledges. UTSA music instructor Steven Parker draws inspiration from the rich history of marching bands, recognizing their dual role as instruments of sonic expression and even warfare. In his creative endeavors, he seamlessly fuses these elements with the practice of deep listening, a form of sound meditation. One standout project, titled FIGHT SONG, features an interactive installation tailored for meditation, involving brass instruments and an AI-powered performance by a military band. During the exhibition, attendees wear headphones that translate their brainwaves into auditory experiences. Guided by a series of sound meditation prompts, the audience’s brainwaves are artfully composed into music by AI, and hanging brass instruments harmoniously bring these sounds to life. In this immersive showcase, attendees can instinctively connect with their own thoughts and emotions through the medium of music, opening up boundless horizons for the advancement of AI music therapy.

Steve Parker Artist Talk about“ FIGHT SONG” from YouTube.

Privacy and Data Security Concerns

While AI music therapy holds tremendous potential, it is not without its share of challenges and ethical dilemmas. The controversy surrounding the ownership of AI-generated music has taken center stage, given AI’s capability to extract and synthesize music from extensive databases autonomously. Furthermore, there are concerns regarding the use of artists’ creations as training data without their consent. Earlier this year, Getty Images filed a copyright infringement lawsuit against the AI art generator Stable Diffusion in the United States. The claim asserted that Stable Diffusion had copied 12 million images to train its AI model without proper authorization or compensation. It is highly likely that more such cases are unfolding without the knowledge of artists. In late September this year, courts and the U.S. Copyright Office initiated discussions on the copyrightability of generative AI output and its potential infringement on the copyrights of other works.

The left is the original photo from Getty Images and the right is a similar image created using Stable Diffusion.

The artist community’s stance on AI-generated music is widely divergent, with composer and musician Kelly Bishop encapsulating this diversity when she stated, “My initial reaction to AI music ranges from amusement at its possible ineptness to concern that it may become so proficient that it ushers in irreversible changes in the entire musical landscape.” Given that the artificial intelligence industry is still in its developmental stages and lacks comprehensive regulatory frameworks, safeguarding artists’ originality and copyrights is of paramount importance during the technology’s advancement.

The art market is also undergoing a shock due to AI. Studies have found that 75% of people cannot distinguish between works created by artists and works created by artificial intelligence. This has caused panic among curators, collectors, artists, and gallery owners. They are worried that artificial intelligence art will replace real artists and cause large-scale unemployment and reshuffling. However, art industry practitioners in the other camp believe that algorithmic models can be used to inspire artists and produce fresh, novel, and bring surprising works and output. It can also help streamline the art management process and create higher productivity.

Another conceivable issue revolves around privacy and data security. In AI music therapy, personal data and musical preferences may be collected and subjected to analysis, introducing potential risks of the misuse or leakage of sensitive patient information. A notable case in 2023 involved a cyberattack on a healthcare organization, resulting in the theft of an average of 3 million patient records by hackers. The underlying principle is that AI empowers hackers to execute personalized and automated attacks. This capability enables the generation of countless emails in various languages tailored to the cultural profile of the targeted organization, mimicking the style of the individual or organization the hacker seeks to impersonate.

“It’s not a faith in technology.

It’s faith in people.”

conclusion

Integrating AI music therapy into mainstream therapeutic practices depends upon the successful solution to the multifaceted challenges and ethical considerations it faces. This imperative requires the concerted efforts of AI experts, healthcare professionals, and ethicists, who must collaboratively craft conscientious and efficacious AI music therapy systems. With the ongoing development in AI technology, the trail for AI music therapy is poised to elevate it to an important role in treatment. This advancement provides well for those pursuing emotional and psychological well-being, especially those with limited access to conventional music therapy.

What can be expected is as the spheres of AI and music therapy continue to converge, a future marked by the harmonious cooperation between technology and the art of healing through sound comes into view.

-

A Descriptive, Statistical Profile of the 2021 AMTA Membership and Music Therapy Community. American Music Therapy Association, 2021.https://www.musictherapy.org/assets/1/7/2021_Workforce_Analysis_final.pdf

American Music Therapy Association. “History of Music Therapy.” Musictherapy.org, American Music Therapy Association, 2019, www.musictherapy.org/about/history

Bishop, Kelly. “Is AI Music a Genuine Threat to Real Artists?” Www.vice.com, 16 Feb. 2023, www.vice.com/en/article/88qzpa/artificial-intelligence-music-industry-future.

Chandran, Nyshka . “Hi-Tech Therapy: AI’s Arrival in Sound Wellness.” Hii Magazine, 2 Mar. 2023, www.hii-mag.com/article/hi-tech-therapy-ai-s-arrival-in-sound-wellness.

Flo, Birthe K., et al. “Study Protocol for the Alzheimer and Music Therapy Study: An RCT to Compare the Efficacy of Music Therapy and Physical Activity on Brain Plasticity, Depressive Symptoms, and Cognitive Decline, in a Population with and at Risk for Alzheimer’s Disease.” PLOS ONE, vol. 17, no. 6, 30 June 2022, p. e0270682, https://doi.org/10.1371/journal.pone.0270682.

Generative Artificial Intelligence and Copyright Law. Congressinal Research Service, 29 Sept. 2023. https://crsreports.congress.gov/product/pdf/LSB/LSB10922

Hong, Yeong-Joo, et al. “The Effects of Synthesizing Music Using AI for Preoperative Management of Patients’ Anxiety.” Applied Sciences, vol. 12, no. 16, 1 Jan. 2022, p. 8089, www.mdpi.com/2076-3417/12/16/8089, https://doi.org/10.3390/app12168089.

Modran, Horia Alexandru, et al. “Using Deep Learning to Recognize Therapeutic Effects of Music Based on Emotions.” Sensors, vol. 23, no. 2, 14 Jan. 2023, p. 986, https://doi.org/10.3390/s23020986.

Molino, Angelo. “What Kind of Music Therapy? Passive and Active Treatments Applications.” Www.linkedin.com, May 2019, Accessed 12 Oct. 2023, www.linkedin.com/pulse/what-kind-music-therapy-passive-active-treatments-angelo-molino/.

Olesch, Arutur. “Ai Helps Hackers Steal Data. Healthcare Providers Must Get Ready Now.” ICT&Health, 2 Oct. 2023, www.ictandhealth.com/ai-helps-hackers-steal-data-healthcare-providers-must-get-ready-now/news/#:~:text=During%20a%20single%20cyberattack%20on.

Rose, Frank. “Music, Science and Healing Intersect in an A.I. Opera.” The New York Times, 28 Oct. 2022, www.nytimes.com/2022/10/28/arts/music/artificial-intelligence-opera.html.

“The Rise of AI Art: What Does This Mean for the Art Market?” The Rise of AI Art: What Does This Mean for the Art Market?, 25 Oct. 2022, www.artsper-for-galleries.com/blog/the-rise-of-ai-art-what-does-this-mean-for-the-art-market.

“UTSA Professor Uses Marching Bands to Explore the Healing Art of Music.” Www.utsa.edu, 2023, www.utsa.edu/today/2023/07/story/steven-parker-explores-healing-art-of-music.html#:~:text=An%20interactive%20installation%20called%20Sonic. Accessed 12 Oct. 2023.

Vincent, James. “Getty Images Sues AI Art Generator Stable Diffusion in the US for Copyright Infringement.” The Verge, 6 Feb. 2023, www.theverge.com/2023/2/6/2358793/ai-art-copyright-lawsuit-getty-images-stable-diffusion.