As digital crowdsourced art continues as a mode of art making it is necessary to developed an understanding of which features of digital arts programming are crucial in the engagement of digital audiences. The following analysis of four digital art projects focuses on the participatory, rather than the interactive. The research focuses on projects wherein audiences become artists by participating in the creation of a piece of art by making one or more creative contributions. Perhaps not surprising, agency and control were identified as significant to participation. The summary, below, gives an overview of the process and findings. The complete report is available here.

Process

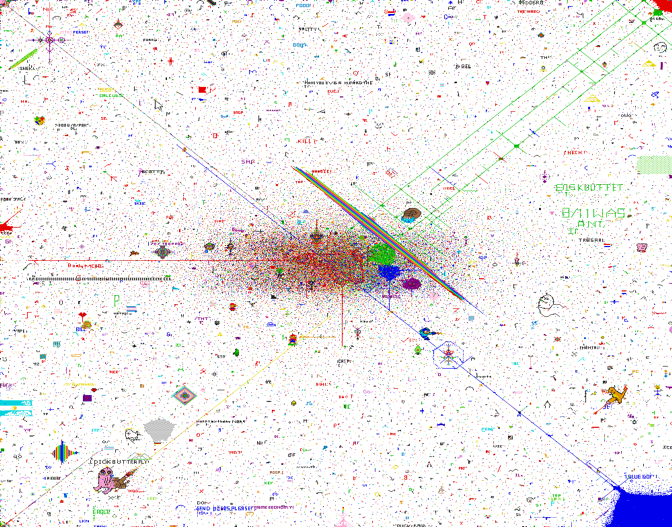

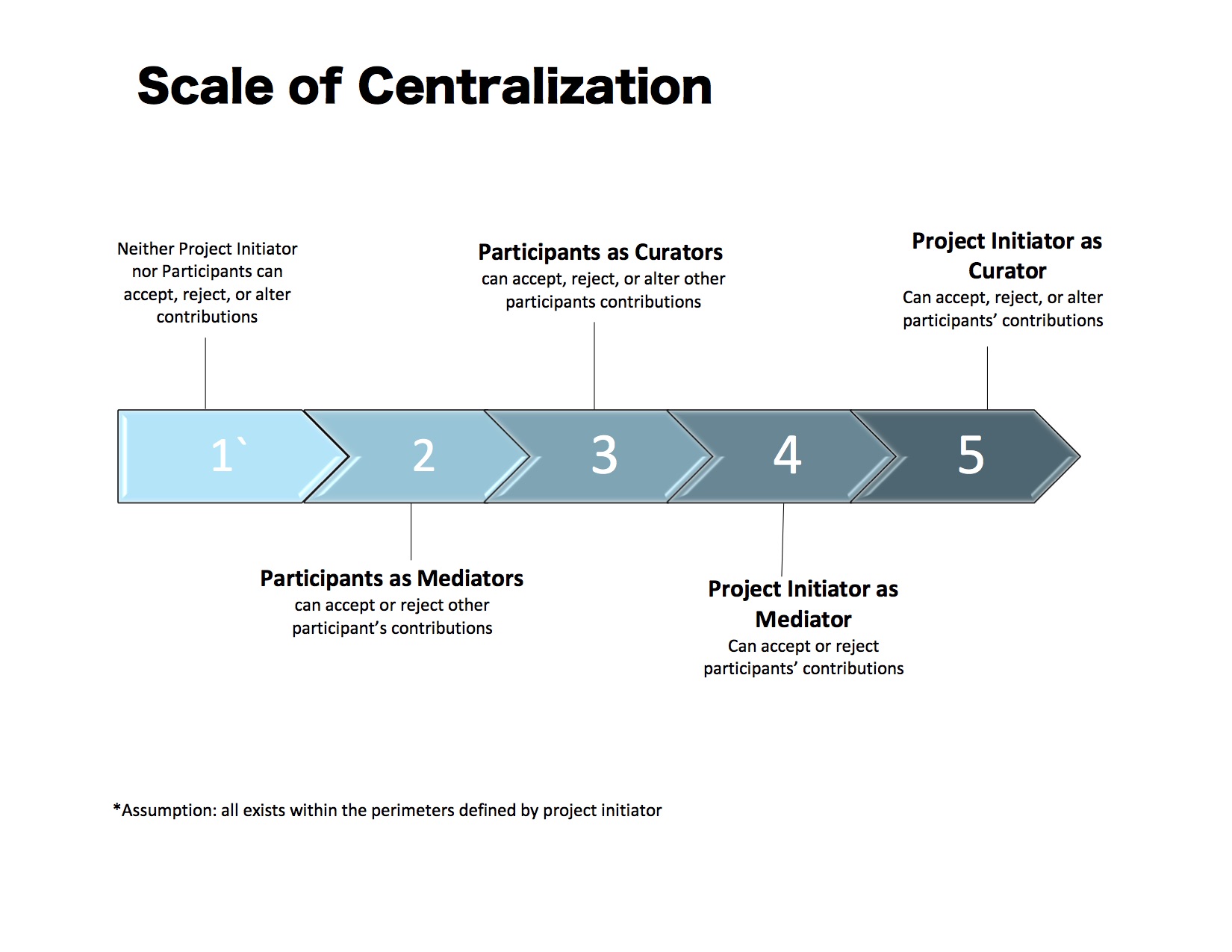

The research team reviewed crowdsourced digital art projects and developed two scales upon which to position projects. In the review of digital art projects it became evident that the level of centralization and control in each project varied. Furthermore, there was an inherent difference in the product of the project depending on the control mechanisms established by the project initiator. Thus, a preliminary scale was designed to capture the range of centralization in the case projects (Figure 1). In examining the varied levels of centralization in the case projects, a secondary difference emerged in the types of participant contributions that impacted the level of engagement or facility a participant had in the project. Literature on audience engagement and the evolution of participatory art informed a separate scale of participant agency (Figure 2).

Figure 1, Scale of Centralization

Figure 2, Scale of Agency

The following research focused on four projects -- first analyzing their process and final product, and then positioned them on the scales according to the project specifications:

1. 1. Aaron Koblin’s The Sheep Market. This is a project wherein artist Aaron Koblin paid Amazon’s Mechanical Turk workers $.02 to draw a single sheep facing to the left. These drawings were compiled and exhibited at a public exhibition. The project now lives as an interactive product online.

Number of Contributors: 7599 unique IP addresses

Scale of Agency Score: 1; Participants contribution is pre-defined by project initiator

Scale of Centralization Score: 4; Project Initiator can accept or reject participants contributions

Duration of Contribution Collection: 40 days

1. 2. Google’s Quick Draw. This project combines game play with research, by prompting participants to draw an item. The participant must try to finish the drawing before the computer guesses what the item is. This is a tool to improve machine learning, while creating an open-source, participant-managed doodling database.

Number of Contributors: 15,000,000

Number of Contributions: 1,000,000,000

Scale of Agency Score: 2; Participants choose from a selection of pre-defined contribution options

Scale of Centralization Score: 2; Participants have the ability to accept, reject (in this case reclass) the contributions of others

1. 3. Reddit’s Place. This is a project wherein Reddit administrators created a blank canvas for participants to draw on, one pixel at a time.

Number of Contributors: 1,000,000

Scale of Agency Score: 4; Participants work together to choose what and how to contribute

Scale of Centralization Score: 3; Participants accept, reject, and alter contributions

Duration of Contribution Collection: 72 hours

1. 4. The Moon Arts Group’s Moon Drawings. This project solicited line drawings from participants around the world via the Moon Drawings website. These drawings were then inscribed on a disc that will travel on a rover to the moon. One part of the rover will draw some of the drawings from the disc on the surface of the moon.

Number of Contributors: 16,000

Scale of Agency Score: 3; Participants choose what to contribute individually

Scale of Centralization Score: 4; Project Initiator can accept or reject contributions

Duration of Contribution Collection: 7 days

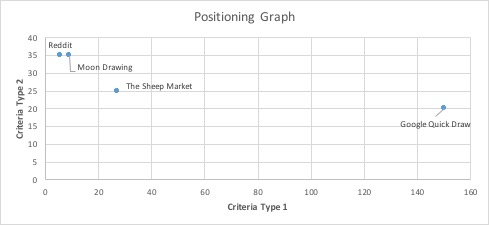

A comparison between scale of centralization and scale of agency, keeping both of them on different axis, and positioning the project’s accordingly has been an incredibly insightful though preliminary in this set of analyses. The development of scales for centralization and agency are useful, but do not yet account for the variance and nuance between crowdsourced digital art projects. Though the projects reviewed varied significantly, there were some commonalities to be found. The most valuable commonality is, unsurprisingly, that centralization or control and participant agency are unavoidable facets of crowdsourced digital art projects. Though the mechanisms of centralization and agency vary, they must be present in order to unify large scale contributions across digital spaces.

Figure 3. Scale of Centralization vs Agency

With further analysis, a larger sample, and continued development of existing criteria a conclusion might be drawn about the relationship between centralization and participant agency. Additional features of crowdsourced digital art projects are significant and need to receive further delineation, specifically between process and product. Deeper analysis of these features and criteria will yield the ability to diagnose the success of crowdsourced digital art projects — the first step toward informed design and strategic digital programming.

Figure 4. Positioning Graph