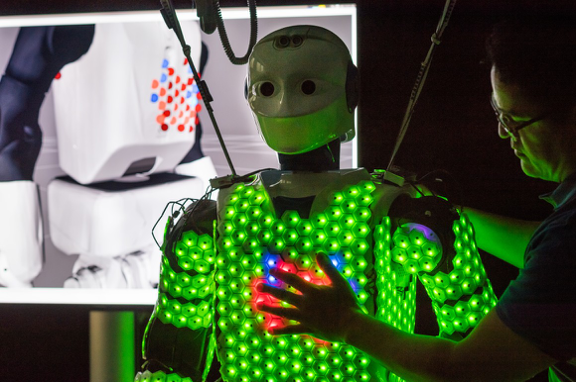

Banner photo: “The Blind Robot” by Louis-Philippe Demers.

Thirty-four distinct definitions for the word “touch” exist in the Merriam-Webster dictionary. Some have subtle differences, and some are archaic, not surprising for a word that's been around since the 14th century. In a sense and as a sense, it's complicated, like relationships. And what is touch, if not the start of many relationships?

Merriam-Webster defines touch as:

1: to bring a bodily part into contact with especially so as to perceive through the tactile sense : handle or feel gently usually with the intent to understand or appreciate

What makes humans unique is not just our ability to touch, but also our ability to sense the touch and respond to it. Studies in the field of tactile sense are increasing, driven by a range of factors: improved touchscreen consumer products like phones and tablets, haptic perception for virtual reality, and the development of a sense of touch in robotics. With touch, we perceive material objects and their spatial properties. Once the body is in motion, that sense plays a vital role in how we navigate the space and negotiate with other bodies and objects sharing that space. Those interactions are a form of communication, which can convey purpose and emotion. Tactile stimuli enrich our comprehension of social interactions. Is it possible to achieve this level of understanding in robots, so much so, that they may appreciate the intended meaning of touch and project it themselves? According to Silvera-Tawil, "people naturally seek interaction through touch and expect even inanimate-looking robots to respond to tactile stimulation." Improved human-robot interaction (HRI) would require a robot that can intuit and respond to touch in line with our expectations.

Current State of HRI in Performance

In performance, artists reduce robots to a type of kinetic sculpture with varying degrees of autonomy. We have progressed from merely programming them with dance-like motions to giving them the ability to watch and then mimic movement, and eventually to react and adapt to the situation through algorithmic behaviors. Still, robots are a tool for the expression of a choreographer's creativity, not as a creative machine in their own right. Humans and robots have shared the stage for decades, but we are always dancing "next to" and never really "with" each other in a true dynamic partnership. HRI has plateaued due to barriers in computing capacity, engineering, and electronics. Interactions are currently reliant on sensors that translate pressure into an electrical signal. However, most processors can't handle the amount of data coming from a multitude of sensors simultaneously, and these sensors cannot account for the exact position or angle of interaction. They don't know where the source of contact is in space or relation to them. And they fail in the presence of moisture, which can be especially problematic in a performance setting when the human counterpart is sweating. Hopefully, the ongoing quest for what some scientists are calling “e-skin” or “smart skin” addresses these limitations.

Why Are Humans So Good at Touch?

We are born for it. Touch is the first of the five senses to develop in a human embryo. Our skin is the largest organ of the human body (about 16% of total body weight), and the surface area covers approximately 22 square feet. Its primary functions are reacting to sensations of touch, vibration, and pressure; regulating body temperature; and responding to sensations of heat and cold. While the skin is composed of several layers, for touch, we are mostly concerned with two. The epidermis is the visible topmost layer of skin. Waterproof, it serves as a protective wrap for the underlying skin layers and houses a variety of sensitive cells called touch receptors. Just beneath is the dermis, containing hair follicles, sweat glands, sebaceous glands, blood vessels, nerve endings, and more touch receptors. Its primary function is to sustain and support the epidermis.

Figure 1: Sensory receptors in human skin. Source: The Salience.

Outside stimuli (mechanical, thermal, and chemical information) are received and translated via a myriad of nerve endings all over the body, then transmitted to the brain via electrical signals allowing us to perceive sensations both pleasurable and painful. Several distinct receptor types exist, including thermoreceptors (temperature), nociceptors (pain), and mechanoreceptors (pressure). Collectively, these nerve cells that respond to both internal and external changes in body state are known as the somatosensory system.

The distance between the receptors determines sensitivity. Fingers, tongue, lips, nose, and forehead are highly sensitive to touch, meaning that these parts have a higher density of tactile organs. Not surprisingly, perhaps, these are all critical parts of the body for touch. Swedish scientists have shown how a human finger can sense even the tiniest irregularity of a seemingly smooth surface. A study from 2013 marked the first time researchers were able to quantify our sense of touch; humans can detect a ridge of just 13 nanometers (the size of a very large molecule).

At the same time, skin facilitates free movement. The four distinct types of mechanoreceptors are essential for fine motor control.

The Merkel receptor is a disk-shaped receptor located near the border between the epidermis and dermis. It demonstrates a slow response and has a small receptive field. It is useful for detecting steady pressure from small objects, such as when gripping something with the hand.

The Meissner corpuscle is a stack of flattened cells located in the dermis, near the epidermis. It demonstrates a rapid response and has a small receptive field. It is useful for detecting texture or movement of objects against the skin.

The Ruffini cylinder is located in the dermis and has many branched fibers inside a cylindrical capsule. It demonstrates a slow response and has a large receptive field. It is good for detecting steady pressure or stretching, such as during the movement of a joint.

The Pacinian corpuscle is a layered, onion-like capsule surrounding a nerve fiber. It is located deep in the dermis, in the subcutaneous fat. It demonstrates a fast response and has a large receptive field. It is useful for detecting large changes in the environment, such as vibrations.

Stimulation or deformation of the tissues in which the mechanoreceptors lie—essentially the stretching or compressing of the skin over joints, ligaments, and muscles—elicits a potential action. The receptors transmit the information to the central nervous system (brain and spinal cord), which then responds by activating the skeletal muscles accordingly. This rich innervation system helps us sense our environment and move through it safely. We perceive objects in the space around us through active exploration, not in a static state. Movement is an inherent part of haptic perception.

In 1966, Gibson defined the haptic system as "the sensibility of the individual to the world adjacent to his body by use of his body." People can rapidly and accurately identify three-dimensional objects by touch: we run our fingers over the surface, hold it in our hands, squeeze it, and feel its weight and temperature. And while most research relating to our sense of touch tends to focus on human hands, due to the concentration of receptors, we must remember our skin doesn't end at the wrists. The entire body is a tool for touch, unlike our other senses. There are around 5 million receptors distributed throughout the human body. But our sense of touch isn't just all about the facts: location, movement, and pressure; these are components of discriminative touch. There's also an emotional touch system.

Figure 2: The cortical homunculus side by side with a human figure scaled to match the proportions of how touch sensors are represented in the brain. Source: OpenStax College.

An entirely different set of "special sensors called C tactile fibers...sends information to a part of the brain called the posterior insula that is crucial for socially-bonding touch." In a way, this system is more primitive, known to be present in the hairy skin of other mammals. Scientists didn't confirm the existence of these nerve fibers in humans until the late 1980s. This secondary pathway used for processing touch is part of the reason it's so hard to understand completely how our sense of touch works. Studies have shown that humans can decode anger, fear, disgust, love, gratitude, and sympathy via touch.Different emotions are associated with particular touch behaviors, which can even be perceived by merely watching others communicate via touch. Acting in tandem, emotional and social components of touch are inseparable from physical sensations.

Developing a comparable sense of touch for robots is complicated and has been a research topic for decades. But as in nature, touch is essential if we expect robots to exist and collaborate with humans in shared spaces. Physical empathy is a necessary quality for safety, especially for robots working in close contact with human counterparts. Moving in proximity is of particular interest in the artistic sphere. How many sensors would a robot need to be a genuinely autonomous and responsive performance partner?

Robots Onstage

Robotic performance art typically includes theatrical presentations or installations where most, if not all, of the show is executed by robots rather than by people. We see examples of this dating back to the 1950s (Brill). The main concern here is mostly the interaction between the robot and the audience. How can the creator develop a synergistic connection between robot (as a performer) and people (as the viewer)? But let's leave the audience out of it for a moment and consider instead onstage relationships when there are humans in the mix.

Most robots currently used in artistic productions are entirely preprogrammed. There are some advantages to this set up: predictability, consistency, and repeatability. A human performer knows precisely what their robotic counterpart is going to do, how it is going to do it, and that it performs it the same way every time. That dependability helps to nurture trust in the partnership, but no human performer can match those qualities. There are always subtle changes to timing or position, and sometimes more significant errors like forgetting the choreography and needing to improvise or even falling. The downside then is that the robot cannot adapt. Programmed behaviors could lead to serious injury if the partners are close. When we add physical contact or weight sharing, we increase the risk. But it's the touch, the actual connection, between human and robot that creates the necessary tension for thought-provoking art.

Consider Huang Yi and KUKA

The duet shown is the third of four sections in an hour-long performance, which toured internationally in 2015. It is an exceptional example of what appears to be seamless human-robot interaction in real time. Yet it took years of planning and thousands of programming hours to create. In 2010, Taiwanese choreographer Huang Yi approached KUKA, a German robotics company, and asked if he could dance with one of their industrial robots. The company replied that “according to the regulation, when the robot is moving, human beings cannot enter the area of its action. If you can find a way, I will lend you a KUKA.” The company finally agreed, but first Yi signed a waiver declaring himself responsible for any injuries resulting in dancing with the 2000-pound robot. With his life on the line, Yi (a self-taught coder) spent anywhere from 10 to 20 hours creating each minute of partnering with the robot. Using data analysis to quantify the physical characteristics of various emotional states resulted in eerily lifelike movements. The programming created an illusion of a true connection and balance between human and robot. However, there is still a human at the center of the paradigm, and the robot is still incredibly dangerous in close encounters. The KUKA robot is not autonomous; there is no room for error or improvisation. According to Cheng:

The biggest challenge of dancing with a robot is that robots never change, which brings out and amplifies the flaws of human beings. Humans get angry, frightened, or tired, while robots convey no emotions, obey all orders, and accept every piece of information inserted.

Figure 3: Huang Yi and KUKA in Huang Yi & KUKA. Photo by Jacob Blickenstaff.

While multi-axis industrial robots may lack the humanoid look of other robots geared toward HRI, they offer the most movement capabilities for performance. They are often able to mimic quite closely the articulation of the human spine and limbs, with different qualities and speeds. And robots like KUKA are very stable, once mounted adequately. But without the addition of an "advanced tactile feedback feature" to the interface, they won't interact more naturally with humans, and safety will always be an issue.

give me some skin: Meet H-1

Led by Professor Gordon Cheng, a team at the Technical University of Munich has successfully covered a human-sized robot in artificial skin for the first time. Although you can see in the video that H-1 humanoid robots don't move as elegantly as KUKA's industrial robots, they are less likely to batter or bruise their human partners. Equipped with 1,260 cells, containing a total of 13,000 sensors, H-1 can effectively feel touch. Each cell is about an inch wide with a self-contained microprocessor capable of discerning contact, acceleration, proximity, and temperature. The robot skin can provide a wealth of contact information about the interaction forces of its environment and its human counterparts. What's exciting about Cheng's design is every part of the robot is covered, not just endpoints of contact (think fingers) as in the past. But with the large number of sensors, the e-skin must also handle a large amount of tactile data within limited available communication bandwidth and without overloading the central computer. Amit Malewar explains:

The trick: The individual cells transmit information from their sensors only when values are changed. This is similar to the way the human nervous system works. For example, we feel a hat when we first put it on, but we quickly get used to the sensation. There is no need to notice the hat again until the wind blows it off our head. This enables our nervous system to concentrate on new impressions that require a physical response.

Figure 4: The sensors detect how close the source of contact is along with pressure, vibration, temperature, and even movement in three-dimensional space. Source: Astrid Eckert / TUM.

The added sensitivity allows the robot to work autonomously with human counterparts safely and even avoid accidents proactively, and because each cell functions individually, the artificial skin continues to work even in the event some cells fail. Additionally, soft and silicone-based materials could cover the sensors to improve friction properties and provide waterproofing. Although initially created with social robots and cobots (collaborative robots) in mind, they can be attached to any manner of robot. The researchers are now working on shrinking the skin cells further and making them cost-effective to manufacture in volume.

Coming Soon to a Theater Near You

The liability involved and the cost associated with robot performance art often keeps it out of mainstream performing arts centers and drives it into alternative or specialized spaces: underground venues, tech conferences, commercial festivals, or university settings. It is expensive to tour with, partially because of heavy equipment, while ensuring high value. The time required to prepare the space can also be extensive.

Is it worth it? Can all this haptic data impart meaning to HRI in such a way that forges real relationships onstage? Eventually, with more advances in artificial intelligence and machine learning, it would be impossible to program a robot with code for appropriate physical responses to every type of touch it might encounter in live performance. Genuine movement creation with a partner in real-time requires both emotional and physical connection between individuals, whether they are robot or human. Touch is vital in forming relationships, as we communicate through contact; it conveys a message or intention to the recipient. But underlying tactile information and context enrich our physical interactions. There is no one correct course of action; it's a learning process. According to David Silvera-Tawil, David Rye, and Mari Velonaki:

A number of factors influence the way that humans interpret touch, and most of these factors are related to touch only in indirect ways. In other words, touch interpretation for human–robot interaction cannot depend purely on an artificial sensitive skin. Touch interpretation should work in a harmonious balance with other sensory modalities–for example, sound or speech, vision and proprioception–that can provide more information about the people touching the robot, the environment, and the nature of the touch.

For now, we must be satisfied with the semblance of an equal partnership, as humans have created robots adept at mimicry and obedience, but not quite self-guidance. The progress is, however, beautiful to watch.

Resources

A Human-Robot Dance Duet. Accessed November 20, 2010. https://www.ted.com/talks/huang_yi_kuka_a_human_robot_dance_duet.

Amato, Ivan. “In Search of the Human Touch.” Science 258, no. 5087 (November 27, 1992): 1436–37. https://doi.org/10.1126/science.1439836.

Anandan, Tanya. “Elevating the Art of Entertainment – Robotically.” Robotics Online, July 26, 2017. https://www.robotics.org/content-detail.cfm/Industrial-Robotics-Industry-Insights/Elevating-the-Art-of-Entertainment-Robotically/content_id/6654.

Andreasson, Rebecca, Beatrice Alenljung, Erik Billing, and Robert Lowe. “Affective Touch in Human--Robot Interaction: Conveying Emotion to the Nao Robot.” International Journal of Social Robotics 10, no. 4 (2018): 473. https://doi.org/10.1007/s12369-017-0446-3.

Anikeeva, Polina, and Ryan A. Koppes. “Restoring the Sense of Touch.” Science 350, no. 6258 (October 16, 2015): 274. https://doi.org/10.1126/science.aad0910.

Apostolos, M. K., M Littman, S Lane, D Handelman, and J Gelfand. “Robot Choreography: An Artistic-Scientific Connection - ScienceDirect.” ScienceDirect, Computers and Mathematics with Applications, 32, no. 1 (July 1996): 1–4.

Bartneck, Christoph. “Art in Human-Robot Interaction: What and How Does Art Contribute to HRI?” Human Robot Interaction, April 18, 2019. https://www.human-robot-interaction.org/2019/04/18/art-in-human-robot-interaction/.

Bergner, Florian, Emmanuel Dean-Leon, Julio Rogelio Guadarrama-Olvera, and Gordon Cheng. “Evaluation of a Large Scale Event Driven Robot Skin.” IEEE Robotics and Automation Letters 4, no. 4 (October 2019): 4247–54. https://doi.org/10.1109/LRA.2019.2930493.

Bianchi, Matteo, and Alessandro Moscatelli. Human and Robot Hands Sensorimotor Synergies to Bridge the Gap Between Neuroscience and Robotics. 1st ed. 2016. Springer Series on Touch and Haptic Systems. Cham: Springer International Publishing, 2016.

Bret, Michel, Marie-Hélène Tramus, and Alain Berthoz. “Interacting with an Intelligent Dancing Figure: Artistic Experiments at the Crossroads between Art and Cognitive Science.” Leonardo 38, no. 1 (2005): 47–53.

Brill, Louis M. “Art Robots: Artists of the Electronic Palette. (Robotics as Autonomous Kinetic Sculpture in Visual and Performing Arts) (Technical).” AI Expert 9, no. 1 (1994): 28.

Buiu, Catalin, and Nirvana Popescu. “Aesthetic Emotions in Human-Robot Interaction. Implications on Interaction Design of Robotic Artists.” Nternational Journal of Innovative Computing, Information & Control 7, no. 3 (2011): 1097–1107.

Cheng, Gordon, Emmanuel Dean-Leon, Florian Bergner, Julio Rogelio Guadarrama Olvera, Quentin Leboutet, and Philipp Mittendorfer. “A Comprehensive Realization of Robot Skin: Sensors, Sensing, Control, and Applications.” Proceedings of the IEEE 107, no. 10 (October 2019): 2034–51. https://doi.org/10.1109/JPROC.2019.2933348.

Cheng, Yenlin. “My Dance Partner Is Not A Human.” CommonWealth Magazine, December 12, 2017. https://english.cw.com.tw/article/article.action?id=1759.

Classen, Constance. The Deepest Sense: A Cultural History of Touch. Studies in Sensory History. Urbana: University of Illinois Press, 2012.

Coxsworth, Ben. “Electronic Robot Skin Out-Senses the Real Thing.” New Atlas, August 10, 2018. https://newatlas.com/smart-robot-skin/55853/.

Ellenbecker, Todd S., George J. Davies, and Jake Bleacher. “Chapter 24 - Proprioception and Neuromuscular Control.” In Physical Rehabilitation of the Injured Athlete (Fourth Edition), edited by James R. Andrews, Gary L. Harrelson, and Kevin E. Wilk, 524–47. Philadelphia: W.B. Saunders, 2012. https://doi.org/10.1016/B978-1-4377-2411-0.00024-1.

Ergen, Emin, and Bülent Ulkar. “Chapter 18 - Proprioception and Coordination.” In Clinical Sports Medicine, edited by Walter R. Frontera, Stanley A. Herring, Lyle J. Micheli, Julie K. Silver, and Timothy P. Young, 237–55. Edinburgh: W.B. Saunders, 2007. https://doi.org/10.1016/B978-141602443-9.50021-0.

“Functions of the Integumentary System | Boundless Anatomy and Physiology.” Accessed January 1, 2020. https://courses.lumenlearning.com/boundless-ap/chapter/functions-of-the-integumentary-system/.

Gibson, James Jerome. The Senses Considered as Perceptual Systems. Boston: Houghton Mifflin, 1966.

Guadarrama-Olvera, Julio Rogelio, Emmanuel Dean-Leon, Florian Bergner, and Gordon Cheng. “Pressure-Driven Body Compliance Using Robot Skin.” IEEE Robotics and Automation Letters 4, no. 4 (October 2019): 4418–23. https://doi.org/10.1109/LRA.2019.2928214.

Hertenstein, Matthew J., Dacher Keltner, Betsy App, Brittany A. Bulleit, and Ariane R. Jaskolka. “Touch Communicates Distinct Emotions.” Emotion 6, no. 3 (2006): 528–33. http://www.communicationcache.com/uploads/1/0/8/8/10887248/touch_communicates_distinct_emotions...pdf.

Vimeo. “Huang Yi.” Accessed November 6, 2019. https://vimeo.com/huangyi.

“Human-Robot Interaction.” Accessed November 6, 2019. https://www.frontiersin.org/journal/robotics-and-ai/section/human-robot-interaction.

KUKA AG. “Industrial Robots.” Accessed January 6, 2020. https://www.kuka.com/en-us/products/robotics-systems/industrial-robots.

Kac, Eduardo. “The Origin and Development of Robotic Art.” Convergence: The International Journal of Research into New Media Technologies 7, no. 1 (2001): 76–86. https://doi.org/10.1177/135485650100700108.

Klatzky, Roberta L., Susan J. Lederman, and Victoria A. Metzger. “Identifying Objects by Touch: An ‘Expert System.’” Perception & Psychophysics 37, no. 4 (July 1, 1985): 299–302. https://doi.org/10.3758/BF03211351.

Lammers, Sebastian, Gary Bente, Ralf Tepest, Mathis Jording, Daniel Roth, and Kai Vogeley. “Introducing ACASS: An Annotated Character Animation Stimulus Set for Controlled (e)Motion Perception Studies.” Frontiers in Robotics and AI 6 (September 27, 2019): 94. https://doi.org/10.3389/frobt.2019.00094.

Liljencrantz, Jaquette, and Håkan Olausson. “Tactile C Fibers and Their Contributions to Pleasant Sensations and to Tactile Allodynia.” Frontiers in Behavioral Neuroscience 8 (2014). https://doi.org/10.3389/fnbeh.2014.00037.

Linden, David. Touch: The Science of Hand, Heart, and Mind. Viking, 2015.

“Modern Dance Premiere Is a Delicate Collaboration between Human and ABB Robot.” Accessed November 5, 2019. https://new.abb.com/news/detail/6936/modern-dance-premiere-is-a-delicate-collaboration-between-human-and-abb-robot.

Mone, Gregory. “Robots with a Human Touch.” Communications of the ACM 58, no. 5 (2015): 18–19. https://doi.org/10.1145/2742486.

Morris, Andréa. “Meet Amy LaViers: The Choreographer Engineer Teaching Robots To Dance For DARPA.” Forbes. Accessed November 5, 2019. https://www.forbes.com/sites/andreamorris/2018/04/04/meet-amy-laviers-the-choreographer-engineer-teaching-robots-to-dance-for-darpa/.

Mullis, Eric. “Dance, Interactive Technology, and the Device Paradigm.” Dance Research Journal 45, no. 3 (2013): 111–124.

Mullis, Eric C. “Performing with Robots: Embodiment, Context and Vulnerability Relations.” International Journal of Performance Arts & Digital Media 11, no. 1 (January 2015): 42–53. https://doi.org/10.1080/14794713.2015.1009233.

Nabar, Bhargav P., Zeynep Celik-Butler, and Donald P. Butler. “Self-Powered Tactile Pressure Sensors Using Ordered Crystalline ZnO Nanorods on Flexible Substrates Toward Robotic Skin and Garments.” IEEE Sensors Journal 15, no. 1 (January 2015): 63–70. https://doi.org/10.1109/JSEN.2014.2337115.

Nabokov, Vladimir Vladimirovich. Lolita. 2nd Vintage International ed. Vintage International. New York: Vintage, 1997.

Pandey, Ajay, and Jonathan Roberts. “It’s Not Easy to Give a Robot a Sense of Touch.” GCN, July 17, 2019. https://gcn.com/articles/2019/07/17/artificial-sense-of-touch.aspx.

“Renowned Swedish Choreographer’s New Dance Partner Is One of the World’s Largest Industrial Robots.” Accessed November 5, 2019. https://new.abb.com/news/detail/6934/renowned-swedish-choreographers-new-dance-partner-is-one-of-the-worlds-largest-industrial-robots.

Renowned Swedish Choreographer’s New Dance Partner Is One of the World’s Largest Industrial Robots. Accessed November 5, 2019. https://www.youtube.com/watch?v=m9oPe9CsUpI.

Rogers, Dan. “Discotech.(Use of Robots in Performing Arts).” Engineering 248, no. 10 (2007): 54–55.

Ronner, Abby. “I Spent an Evening at the Robot Ballet.” Vice (blog), June 16, 2015. https://www.vice.com/en_us/article/kbng79/i-spent-an-evening-at-the-robot-ballet.

Scheva, Be’er. “Art, Design and Human-Robot Interaction,” January 10, 2019. https://arthist.net/archive/18802.

“Sensitive Robots Are Safer.” Accessed November 20, 2019. https://www.tum.de/nc/en/about-tum/news/press-releases/details/35732/.

Sensitive Skin for Robots. Accessed November 20, 2019. https://www.youtube.com/watch?v=M-Y2HW6JcGI#action=share.

Shiomi, Masahiro, Kayako Nakagawa, Kazuhiko Shinozawa, Reo Matsumura, Hiroshi Ishiguro, and Norihiro Hagita. “Does A Robot’s Touch Encourage Human Effort?” International Journal of Social Robotics 9, no. 1 (2017): 5–15. https://doi.org/10.1007/s12369-016-0339-x.

Shirado, H., Y. Nonomura, and T. Maeno. “Realization of Human Skin-like Texture by Emulating Surface Shape Pattern and Elastic Structure.” In 2006 14th Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems, 295–96, 2006. https://doi.org/10.1109/HAPTIC.2006.1627125.

Silvera-Tawil, David, David Rye, and Mari Velonaki. “Artificial Skin and Tactile Sensing for Socially Interactive Robots: A Review.” Robotics and Autonomous Systems 63, no. P3 (January 2015): 230–43.

Skedung, Lisa, Martin Arvidsson, Jun Young Chung, Christopher M. Stafford, Birgitta Berglund, and Mark W. Rutland. “Feeling Small: Exploring the Tactile Perception Limits.” Scientific Reports 3, no. 1 (September 12, 2013): 1–6. https://doi.org/10.1038/srep02617.

Science. “Skin and How It Functions,” January 18, 2017. https://www.nationalgeographic.com/science/health-and-human-body/human-body/skin/.

Sozo Artists. “Sozo Artists.” Accessed November 5, 2019. https://www.sozoartists.com/huangyi.

“Strap on Your Exoskeleton and Dance, Dance, Dance.” Wired. Accessed November 5, 2019. https://www.wired.com/story/gray-area-festival-2019/.

Tachi, Susumu. “Remotely Controlled Robot with Human Touch.” Advanced Manufacturing Technology 33, no. 8 (2012): 10.

“The Art of Human-Robot Interaction: Creative Perspectives from Design and the Arts | Frontiers Research Topic.” Accessed November 6, 2019. https://www.frontiersin.org/research-topics/11183/the-art-of-human-robot-interaction-creative-perspectives-from-design-and-the-arts.

“Touch | Definition of Touch by Merriam-Webster.” Accessed December 10, 2019. https://www.merriam-webster.com/dictionary/touch.

Triscoli, Chantal, Håkan Olausson, Uta Sailer, Hanna Ignell, and Ilona Croy. “CT-Optimized Skin Stroking Delivered by Hand or Robot Is Comparable.” Frontiers in Behavioral Neuroscience 7 (2013). https://doi.org/10.3389/fnbeh.2013.00208.

Wilson, Mark. “Robots Have Skin Now. This Is Fine.” Fast Company, October 14, 2019. https://www.fastcompany.com/90416395/robots-have-skin-now-this-is-fine.

Yi, Huang. “黃翊與庫卡.” Huang Yi Studio + (blog), April 20, 2018. http://huangyi.tw/project/%e9%bb%83%e7%bf%8a%e8%88%87%e5%ba%ab%e5%8d%a1/.

Yu, Xinge, Zhaoqian Xie, Yang Yu, Jungyup Lee, Abraham Vazquez-Guardado, Haiwen Luan, Jasper Ruban, et al. “Skin-Integrated Wireless Haptic Interfaces for Virtual and Augmented Reality.” Nature 575, no. 7783 (November 2019): 473–79. https://doi.org/10.1038/s41586-019-1687-0.