Written by Carol Niedringhaus

When we think about AI, it generally is with some amount of wariness. We’ve all seen too many science-fiction movies where sentient robots take over the world, but we’re mostly certain that the creativity needed for these sentient beings to overtake us doesn’t exist. Artists, musicians, and creatives especially have historically been comfortable with the knowledge that their livelihoods were not in danger of being replaced by AI because of the inherent creativity necessary in those industries… until now.

Although AI technology has existed for some time, recent developments in the music industry point to a fundamental shift toward the acceptance of AI as a compositional tool. Take for example a Billboard article posted on April 5, 2021 regarding the release of a new Nirvana track twenty-seven years after lead singer Kurt Cobain’s tragic death. The group behind the release, Lost Tapes of the 27 Club, is using AI technology to create an album of music in the style of deceased music legends Kurt Cobain, Amy Winehouse, Jimi Hendrix, and more who were lost at the age of twenty-seven due to mental health issues. The songs mirror the writing style and musicianship of these beloved stars through AI analysis of their bodies of work. In listening to the Nirvana song Drowned in the Sun, people have agreed that the AI was pretty convincing.

Lost Tapes of the 27 Club – Drowned in the Sun. Source: YouTube

All this begs many questions; Are computer AI systems inherently creative? What types of AI tools are available to musicians right now? Who is using these tools and why? This article attempts to answer these questions by providing a background on AI use in music composition, analyzing current composition tools (including my own experience with two of these tools), and by looking at the implications AI composition has on the future of the music industry.

Developments in Music Related AI

The conceptual framework for AI composers is nothing new. As far back as the 1840’s, Ada Lovelace, considered to be the world’s first computer programmer, predicted that computers would at some point become capable of producing creative outputs. She is quoted as saying that computers “might compose elaborate and scientific pieces of music of any degree of complexity.” More recently, developments in AI technology have resulted in major changes for the music industry and accelerated the development of new tools for composers’ musical toolboxes.

History of AI and Music. Source: A Retrospective of AI + Music

In a medium article titled A Retrospective of AI + Music, the author Chong Li, a current interaction designer at Google, lays out a 3-part framework for the history of AI use in music that can be summarized by the image above and detailed in the following paragraphs.

Development of this technology began in earnest in the 1950’s with the first full AI composition of Illiac Suite for String Quartet programmed by Lejaren Hiller and Leonard Isaacson from the University of Illinois at Urbana–Champaign. This “algorithmic composition” is achieved by using algorithms to allow a computer to make composition decisions based on a given set of rules. In reality, this is similar to the rules used by humans when writing in counterpoint; a set of rules exists to guide where the next note is placed. Algorithmic composition merely passes this decision-making process to a computer rather than a human hand.

As research progressed, new models of programming came about in the 1980’s with “generative modeling” which more closely mimics the neural networks involved in human brain activity. Rather than simply giving the computer a set of rules, the AI is fed volumes of existing data from which it can learn. For example, programmers were able to utilize generative modeling to compose the final two movements of Franz Schubert’s unfinished Symphony No. 8, which he abandoned writing in 1822. They fed the software as much of Schubert’s music as they could find – about 2,000 pieces of piano music – which allowed the software to detect compositional patterns allowing it to “think” like Schubert when composing it’s own piece. Of course, the end result was then touched up by a human composer before being performed.

Although written by AI, Schubert’s Symphony No. 8 was rehearsed and performed by human musicians. Source: NBC News

Finally, research continues into the potential uses for AI not only in composition, but also in the area of intelligent sound analysis and cognitive science. Several AI initiatives have been created in the past several years, such as Google Magenta, IBM Watson Beat, and more that allow for collaboration between artist and AI. Taryn Southern, a former American Idol semi-finalist, has been creating new music with this type of AI collaboration that allows even non-composers to be involved in the music-making process.

“Break Free” by Taryn Southern with music written with the help of AI technology.

Her 2017 single “Break Free” was written with the help of AI, which Southern used as a guide for what became the final product. Although the final instrumental version is much different than the original AI composition, it retains similar elements enhanced by Southern’s own musical creativity.

Clearly, AI has changed drastically since it was first used to write a piece of music in 1957. Now, new tools are arriving every day that allow composers and musicians to interact with musical AI programs.

Current composition tools

There are currently many AI programs on the market for those who want to utilize AI as a compositional tool. As mentioned earlier, Google Magenta, and IBM Watson Beat, are two tools that are generating a lot of attention. Most popular though are the compositional tools Amper Music and AIVA which both fulfill similar, yet different roles in the AI composition landscape.

Amper Music

Amper Music, created by three Hollywood film composers, is marketed as a solution for anyone who needs cheap, easy to produce background music. Rather than an AI learning from being fed mountains of existing compositions, this AI technology was taught music theory and how different music elicits certain emotional responses. There is no musical knowledge required to utilize the Amper AI. Users can simply make a few decisions about the way they want their music to sound by selecting “a mood, style, tempo, and length.” The resulting track is then editable, allowing the user to further customize the music with no compositional or production experience necessary.

This tool has been popularized by aforementioned singer Taryn Southern, creator of the first full-length album in collaboration with AI technology. The following video, featuring Amper CEO Drew Silverstein, talks more about the process that went into creating this album.

First album composed, produced entirely by AI. Source: YouTube

AIVA (Artificial Intelligence Virtual Artist)

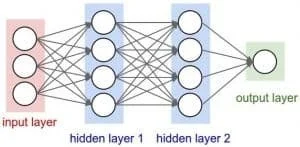

A second tool, created by a small Luxembourg startup, has garnered a lot of attention with its AI compositions. AIVA, or Artificial Intelligence Virtual Artist, composes classical music in the style of the old masters. It was fed massive amounts of music by composers like Bach, Mozart, and Beethoven and through this learning process created its own interpretation of music theory to apply to its compositions. Once given compositional parameters, AIVA produces sheet music that is then interpreted by human artists. In addition, AIVA is based on deep learning algorithms that allow it to learn and evolve without direct data inputs or outputs, allowing the AI to learn on its own after the initial programming.

Deep learning algorithmic model. Source: Futurism.com

According to a 2017 Futurism article on AIVA, the program has produced a full length album entitled ‘Genesis’ as well as many single tracks, earning it the title of Composer; it is the first AI to receive this distinction.

‘Genesis’ composition by AIVA. Source: YouTube

While AIVA is currently only being used to compose classical pieces of music, its creators are looking to expand its output and allow for the AIVA program to compose music in any style. However, even though listeners cannot tell the difference between AIVA compositions and those composed by humans (a type of musical Turing Test), the program still relies on humans to fuel its learning.

Tool Review

After reading about popular composition tools Amper Music and AIVA, I decided to experiment with both to learn more about how non-composers (like myself) could make use of these technologies and how quickly I could create something that sounded good.

I started with Amper since it is supposedly the most user friendly and widely used tool. Upon creating an account and logging in, I was able to start an new project and select the the parameters for my composition.

Screenshot of Amper selections to begin a project. Photo by author.

As seen pictured above, I was able to choose from a variety of different styles of music, such as cinematic or hip hop, and then selected the tone and mood of the song before deciding on a sound clip that would serve as the example sound for the AI. I chose “Good Time Alliance” and after less than 30 seconds of processing, the program gave me a 1 minute long composition.

Screenshot of Amper workspace. Photo by author.

At this point I was able to listen to the composition created by Amper. Was it anything special? No. But for its intended purpose of filling in background music on a YouTube video or low budget film project, it would work well. I was also able to adjust the tempo, key, and instrumentation, although it wasn’t as customizable as I had hoped. Additionally, the tracks are not free and users are unable to download any projects without paying at least $5 for a personal license; this is an admittedly small price to pay compared to other music licensing sites. All in all, it took about 5 minutes to create this sound bite.

Next, I experimented with AIVA. After creating an account, I was able to watch a quick video tutorial to familiarize myself with the platform and start creating a track. As with Amper, users can choose from a variety of pre-set styles which the AI will use to help compose the track. The length, tempo, and number of compositions needed are customizable right from the beginning.

Screenshot of AIVA style selection options. Photo by author.

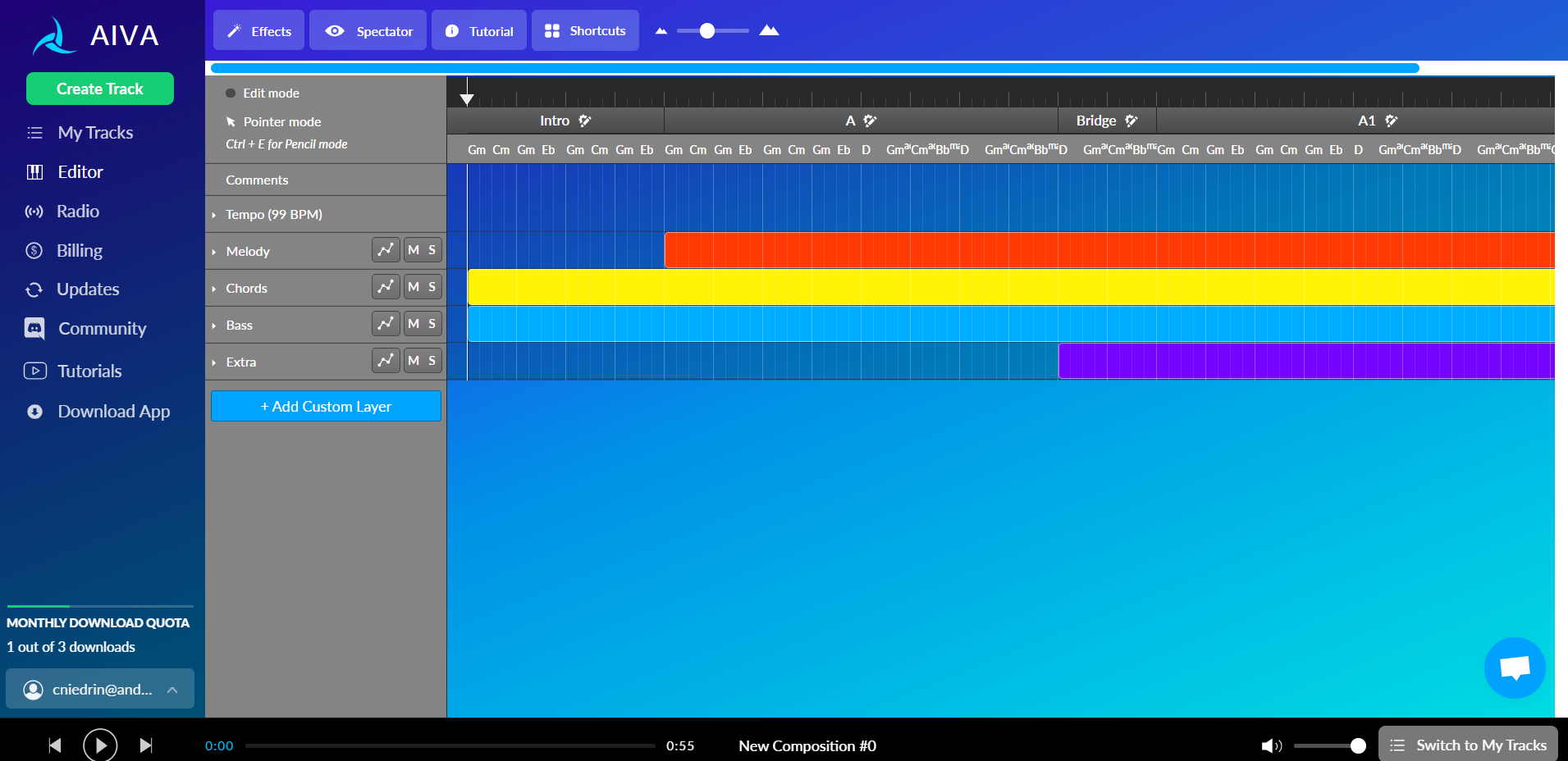

AIVA takes a bit longer to render than Amper, but the track was complete in about a minute. After taking an initial listen, I decided to make some changes and navigated to the Piano Roll screen, which allows users to customize their track.

Screenshot of AIVA Piano Roll screen. Photo by author.

By selecting any of the grey dropdown menus on the left side of the screen, users can change the tempo, the instrumentation, the dynamics, and the volume of each individual line within the track. The process is intuitive, and the program gives a reminder to re-render the track after every edit so progress is always saved. Given a longer period of time, it is easy to see how someone could create an impressive piece by using the initial AI composition as a modifiable framework.

After about 15 minutes of playing with the volume and instrumentation, I produced a fairly nice composition with a much more robust sound. Unlike Amper, users are allowed 3 downloads per month with the free AIVA account, which is nice for those who want to be able to share this music without cost.

After completing my own experimentation, I can say that both tools are relatively easy to use, and each come with their own advantages and disadvantages. However, AIVA being is slightly better due to its music quality and free download feature.

Implications and Thoughts for the Future

There is still more work to be done on AI compositional tools before they become autonomous creative beings. The compositions I created with Amper and AIVA exemplify why human intervention is still vital to AI composition being successful. Between programming and editing compositions, AI has yet to achieve a believable level of human ingenuity.

However, there is no doubt that AI is changing the music industry in significant ways. According to a 2019 Forbes article, AI is catalyzing significant growth in the industry by creating music, advancing audio mastering, and identifying up and coming stars for the industry. Furthermore, a McKinsey report stated that 70% of companies will have adopted some kind of AI technology by 2030; the music industry is certainly included in this number.

Although AI technology is in high demand, experts say that the technology is more of a complement to human creativity, allowing for increased accessibility to music-making by providing inspiration and tools for non-composers. Maybe one day AI will become autonomously creative, but for now, it looks like our AI music revolution is more of a science-fiction fantasy than an imminent reality.

+ Resources

“AI Music Composition Tools for Content Creators.” Accessed May 3, 2021. https://www.ampermusic.com/.

“AIVA – The AI Composing Emotional Soundtrack Music.” Accessed May 3, 2021. https://www.aiva.ai/.

“Algorithmic Composition – Wikipedia.” Accessed May 4, 2021. https://en.wikipedia.org/wiki/Algorithmic_composition.

Billboard. “‘New’ Nirvana Song Created 27 Years After Kurt Cobain’s Death Via AI Software.” Accessed April 6, 2021. https://www.billboard.com/articles/columns/rock/9551482/ai-software-generats-new-nirvana-song-27-years-after-kurt-cobain-death/.

Break Free Official Music Video – Composed with AI | Lyrics by Taryn Southern. Accessed May 4, 2021. https://www.youtube.com/watch?v=XUs6CznN8pw.

Brownlee, Jason. “What Is Deep Learning?” Machine Learning Mastery (blog), August 15, 2019. https://machinelearningmastery.com/what-is-deep-learning/.

Deahl, Dani. “How AI-Generated Music Is Changing the Way Hits Are Made.” The Verge, August 31, 2018. https://www.theverge.com/2018/8/31/17777008/artificial-intelligence-taryn-southern-amper-music.

Futurism. “A New AI Can Write Music as Well as a Human Composer.” Accessed May 3, 2021. https://futurism.com/a-new-ai-can-write-music-as-well-as-a-human-composer.

IBM Watson Music. “Listen to ‘Not Easy’, the New Collaboration by AlexDaKid + IBM Watson. #CognitiveMusic,” April 6, 2016. http://www.ibm.com/watson/music/uk-en.

Li, Chong. “A Retrospective of AI + Music.” Medium, September 24, 2019. https://blog.prototypr.io/a-retrospective-of-ai-music-95bfa9b38531.

“Lost Tapes of the 27 Club.” Accessed May 3, 2021. https://losttapesofthe27club.com/.

“Lost Tapes of the 27 Club – Drowned in the Sun – YouTube.” Accessed May 3, 2021. https://www.youtube.com/.

Magenta. “Magenta.” Accessed May 4, 2021. https://magenta.tensorflow.org/.

Mántaras, Ramon, and Josep Lluís Arcos. “AI and Music: From Composition to Expressive Performance.” Ai Magazine 23 (September 1, 2002): 43–58. Marr, Bernard.

Marr, Bernard.“The Amazing Ways Artificial Intelligence Is Transforming The Music Industry.” Forbes. Accessed May 3, 2021. https://www.forbes.com/sites/bernardmarr/2019/07/05/the-amazing-ways-artificial-intelligence-is-transforming-the-music-industry/.

“Modeling the Global Economic Impact of AI | McKinsey.” Accessed May 6, 2021. https://www.mckinsey.com/featured-insights/artificial-intelligence/notes-from-the-ai-frontier-modeling-the-impact-of-ai-on-the-world-economy.

NBC News. “AI Can Now Compose Pop Music and Even Symphonies. Here’s How Composers Are Joining In.” Accessed May 3, 2021. https://www.nbcnews.com/mach/science/ai-can-now-compose-pop-music-even-symphonies-here-s-ncna1010931.

OpenAI. “MuseNet,” April 25, 2019. https://openai.com/blog/musenet/.

Osiński, Błażej, and Konrad Budek. “What Is Reinforcement Learning? The Complete Guide.” Deepsense.Ai (blog), July 5, 2018. https://deepsense.ai/what-is-reinforcement-learning-the-complete-guide/.

Synced. “AI’s Growing Role in Musical Composition.” Medium, September 9, 2018. https://medium.com/syncedreview/ais-growing-role-in-musical-composition-ec105417899.

The National. “Artificial Intelligence and the the Future of Music Composition,” May 21, 2018. https://www.thenationalnews.com/arts-culture/music/artificial-intelligence-and-the-the-future-of-music-composition-1.732554.

“Turing Test – Wikipedia.” Accessed May 6, 2021. https://en.wikipedia.org/wiki/Turing_test. SearchEnterpriseAI.

“What Is Generative Modeling? – Definition from WhatIs.Com.” Accessed May 4, 2021. https://searchenterpriseai.techtarget.com/definition/generative-modeling.