By: Evangelia Bournousouzi

Traditional Photography Vs. AI-Generated Photography

Traditional photography has long been regarded as an essential part of society. Either as a form of artistic expression (fine art photography) or as a form of historic documentation (photojournalism/documentary photography), photography has played a crucial role in how people perceive and understand the world around them. A part of the visual culture has been constructed on the foundation of traditional photography. For centuries, people have relied on it as a trustworthy source of information and inspiration. Nevertheless, in the saturated image economy of 2023, people are finding it more and more difficult to trust all the images they see in their everyday lives, and this is where Generative Artificial Intelligence (Gen AI) comes onto the discussion table.

In contrast to traditional photography, where the camera lens receives light rays and uses glass to redirect them to a single point, AI-generated images are produced based on natural language descriptions, called “prompts.'“ The programs that generate these images use machine learning artificial neural networks to recognize colors, shapes, textures, etc. Therefore, every time an AI photography generative program is provided with a description, it immediately searches its database of learned images to construct the needed outcome: high-quality fake visual content, based on a database of traditionally photographed images.

It’s important to note that this article doesn’t cover the topic of deepfakes. Deepfakes refer to manufactured content, such as images or videos, that appear to be real but are entirely fabricated. These fakes manipulate facial appearances and make individuals look like they are doing or saying things they never did. Whereas, this article is focused on the kind of AI-generated images that are created from a large database without any intention of altering the facial appearance of real individuals.

These latest innovations raise some challenging questions: Are people able to distinguish between an AI-generated image and one captured with a camera? What impact will this have on their confidence in photography as a medium of visual storytelling?

AI-Generated Photography: How Does It Work?

Generative artificial intelligence programs create images by taking text descriptions (prompts) and running them through a machine learning algorithm. Through deep learning, this algorithm not only understands individual objects but also learns from the relationships between those objects. Great examples are Midjourney and DALL-E.

Video 1. DALL-E 2 Explained.

Source: YouTube

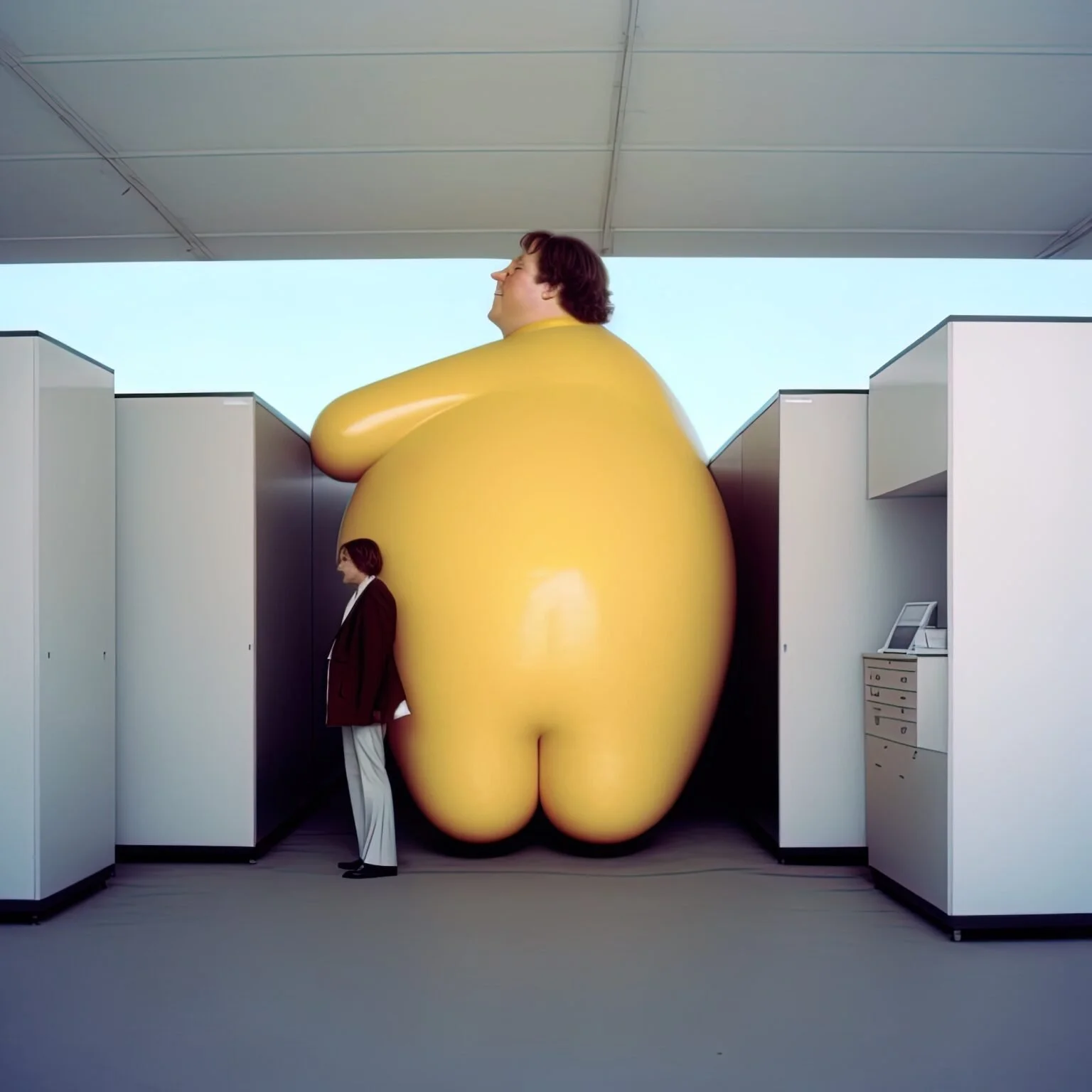

Figure 1. Charlie Engman, Parking Lot I, 2023. Image generated by DALL-E 2.

Source: Aperture

Figure 2. Charlie Engman, Sweetcheeks, 2023.

Image generated by DALL-E 2.

Getty Images recently introduced generative AI, a tool that pairs Getty’s vast creative library with NVIDIA’s AI capabilities to generate original visual content that is commercially safe and is backed by Getty’s uncapped indemnification. This initiative respects the intellectual property of the creators - a missing point in generative AI’s application so far.

AI-generated images are currently used not only by photographers, but by everyone. There are no limitations on usage, except that some image-generating applications require payment or submission. With the help of artificial intelligence, anyone can produce AI-generated artworks, like the ones that were on exhibit at the Gagosian art gallery in New York some months ago. No special skills are required, but the better you understand the rules of photography, the more likely you are to create a successful image that imitates reality.

Are these real photos? – Human difficulty in telling the difference between AI-generated and real images

Thanks to the latest rapid advancements, AI can produce multiple photographs, different in styles and colors, resembling real images. The case of the Sony World Photography Awards 2023 where an AI-generated image misled the organizers and won first place, brings the critical question of distinguishing between AI and real photographs back in the game. In fact, a recent survey shows that humans struggle significantly to distinguish real photos from AI-generated ones, with a misclassification rate of 38.7%.

Figure 3. (c)The average human ability to distinguish between high-quality AI-generated images and real images is only 61% in HPBench. (d) The highest performing model trained on the Fake2M dataset, selected from MPBench, achieves an accuracy of 87% on HPBench.

Source: Seeing is not always believing: Benchmarking Human and Model Perception of AI-Generated Image. September 22, 2023.

The survey’s goal was benchmarking human capability and cutting-edge fake image detection AI algorithms, using a newly collected large-scale fake image dataset Fake2M, and was presented at the 37th Conference on Neural Information Processing Systems (NeurIPS 2023) Track on Datasets and Benchmarks. Fifty participants coming from diverse backgrounds were provided with questionnaires and were asked to distinguish AI-generated images from real ones. During this selection, they were also asked to explain the reason why they perceived an image as AI-generated. Amongst others, some comments were: lack of certain details, blurred and unclear edges, unrealistic colors and/or lighting, irrational content, etc.

Figure 4: Sample of the 50 AI-generated and 50 real images provided through a questionnaire form to 50 survey participants.

Source: Seeing is not always believing: Benchmarking Human and Model Perception of AI-Generated Image. September 22, 2023.

Photographer Boris Eldagsen, who won the Sony World Photography Award in April 2023 but didn’t accept it, stated the real issue: “The threat, is to democracy and to photojournalism; we have so many fake images, we need to come up with a way to show people what is what.” High-quality AI-generated images still exhibit various imperfections, but if carefully produced by creatives who have the necessary knowledge, the result can be a misleading and alternated version of reality. If an AI-generated image can win a photography competition and be perceived as a real one, then how much truth is there in our reality?

Disruption in the Photography Industry – What does the future look like?

Artificial Intelligence and machine learning show that a camera is no longer needed for photo production. Imaginative human beings can work along with clever systems to make a new medium of interpreting the world which reminds a lot of photography. But is it really photography? The use of AI-generated images by photographers has become quite popular lately, and some have even sold their AI artworks for good money. However, Michael Christopher Brown’s latest project, “90 Miles,” sparked a debate in the industry regarding the impact of AI on photojournalism. As an ex-photojournalist, Brown himself stated that although AI-generated images can tell a story, they cannot be considered journalism, but rather storytelling. This raises questions about what the future of photojournalism will look like and whether AI should be used to document real-life events. Nonetheless, it is clear that AI-generated images have their limitations and cannot replace human photojournalists in certain situations.

Very recently the World Press Photography (WPP), one of the most prestigious photojournalism contests based in the Netherlands, announced that they will no longer accept submissions of AI-generated images. This decision was made after receiving feedback from the photographic community, who raised concerns about the use of AI-generated images in the competition. As a result, WPP excluded both generative fill and fully generated images from the Open Format. This move highlights the impact that AI is having on the industry, and the need for careful consideration and discussion around its use in photojournalism.

Figure 5. From the series 90 Miles (Image generated by AI) © Michael Christopher Brown

Figure 6. From the series 90 Miles (Image generated by AI) © Michael Christopher Brown

AI is unable to observe and focus on the interesting aspects while it also lacks the ability to motivate changes in the photographic scenes. Traditional photography not only does that, but it also requires artists to engage with their subjects most of the time. There are multiple physical limitations to computational photography. But there is also a lot of freedom regarding who is using it, how they use it, and for what reasons. This freedom in use along with the absence of a legal framework around AI-generated images is what confuses audiences and makes photography untrusty nowadays.

“I love photography, I love generating images with AI, but I’ve realized, they’re not the same. One is writing with light; one is writing with prompts. They are connected, the visual language was learned from photography, but now AI has a life of its own.”

In the past, analog film was the primary medium used for photography. However, with the advent of digital photography, we are now witnessing the emergence of a new player in the field – Artificial Intelligence. This does not imply that traditional mediums will disappear or that AI will take over the artistic process. Similarly, analog photography was not completely replaced by digital photography, as many photographers still prefer using film for better results. However, the way in which we perceive photography has changed significantly. What was once considered a reliable medium for documenting reality is now at a turning point, becoming less trustworthy. As we progress in this technological era, it is imperative to prioritize responsible creation and application of generative AI to ensure its positive impact on society.

-

Gagosian. “Bennett Miller, 976 Madison Avenue, New York, March 21–April 22, 2023,” February 16, 2023. https://gagosian.com/exhibitions/2023/bennett-miller/.

Getty Images Press Site – Newsroom – Getty Images. “Getty Images Launches Commercially Safe Generative AI Offering,” September 24, 2023. https://newsroom.gettyimages.com/en/getty-images/getty-images-launches-commercially-safe-generative-ai-offering.

Kent, Charlotte. “How Will AI Transform Photography?” Aperture, March 16, 2023. https://aperture.org/editorial/how-will-ai-transform-photography/.

Lu, Zeyu, Di Huang, Lei Bai, Xihui Liu, Jingjing Qu, and Wanli Ouyang. “Seeing Is Not Always Believing: A Quantitative Study on Human Perception of AI-Generated Images,” September 22, 2023. https://arxiv.org/pdf/2304.13023.pdf.

McEvoy, Rick . “Photography Explained: How Is Artificial Intelligence Used in Photography? Will AI Ever Replace Me? On Apple Podcasts.” Apple Podcasts, 2023. https://podcasts.apple.com/gb/podcast/how-is-artificial-intelligence-used-in-photography/id1535978701?i=1000587503427.

Story, Derrick. “Putting the AI in ImAge with Aaron Hockley – TDS Photo Podcast.” The Digital Story, October 24, 2022. https://thedigitalstory.com/2022/10/putting-the-AI-in-ImAge-with-Arron-Hockley-photo-podcast.html.

Terranova, Amber. “How AI Imagery Is Shaking Photojournalism.” Blind Magazine, April 26, 2023. https://www.blind-magazine.com/stories/how-ai-imagery-is-shaking-photojournalism/.

Williams, Zoe, and @zoesqwilliams. “‘AI Isn’t a Threat’ – Boris Eldagsen, Whose Fake Photo Duped the Sony Judges, Hits Back.” The Guardian, April 18, 2023, sec. Art and design. https://www.theguardian.com/artanddesign/2023/apr/18/ai-threat-boris-eldagsen-fake-photo-duped-sony-judges-hits-back.

World Press Photo. “Rules Change Prohibits Generative AI Images in All Categories | World Press Photo.” https://www.worldpressphoto.org/news/2023/could-an-ai-image-win-our-contest

Yavuz, Ozan. “Novel Paradigm of Cameraless Photography: Methodology of AI-Generated Photographs.” Electronic Workshops in Computing, July 2021. https://doi.org/10.14236/ewic/eva2021.35.